The following tutorials are created to highlight specific features in Wallaroo.

Wallaroo Features Tutorials

- 1: Hot Swap Models Tutorial

- 2: Inference URL Tutorials

- 2.1: Wallaroo SDK Inferencing with Pipeline Inference URL Tutorial

- 2.2: Wallaroo MLOps API Inferencing with Pipeline Inference URL Tutorial

- 3: Large Language Model with GPU Pipeline Deployment in Wallaroo Demonstration

- 4: Onnx Deployment with Multi Input-Output Tutorial

- 5: Wallaroo Assays Model Insights Tutorial

- 6: Computer Vision Pipeline Logs MLOps API Tutorial

- 7: Pipeline Logs MLOps API Tutorial

- 8: Pipeline Logs Tutorial

- 9: Statsmodel Forecast with Wallaroo Features

- 9.1: Statsmodel Forecast with Wallaroo Features: Deploy and Test Infer

- 9.2: Statsmodel Forecast with Wallaroo Features: Parallel Inference

- 9.3: Statsmodel Forecast with Wallaroo Features: Data Connection

- 9.4: Statsmodel Forecast with Wallaroo Features: ML Workload Orchestration

- 10: Tags Tutorial

1 - Hot Swap Models Tutorial

This tutorial and the assets can be downloaded as part of the Wallaroo Tutorials repository.

Model Hot Swap Tutorial

One of the biggest challenges facing organizations once they have a model trained is deploying the model: Getting all of the resources together, MLOps configured and systems prepared to allow inferences to run.

The next biggest challenge? Replacing the model while keeping the existing production systems running.

This tutorial demonstrates how Wallaroo model hot swap can update a pipeline step with a new model with one command. This lets organizations keep their production systems running while changing a ML model, with the change taking only milliseconds, and any inference requests in that time are processed after the hot swap is completed.

This example and sample data comes from the Machine Learning Group’s demonstration on Credit Card Fraud detection.

This tutorial provides the following:

- Models:

rf_model.onnx: The champion model that has been used in this environment for some time.xgb_model.onnxandgbr_model.onnx: Rival models that we will swap out from the champion model.

- Data:

- xtest-1.df.json and xtest-1k.df.json: DataFrame JSON inference inputs with 1 input and 1,000 inputs.

- xtest-1.arrow and xtest-1k.arrow: Apache Arrow inference inputs with 1 input and 1,000 inputs.

Reference

For more information about Wallaroo and related features, see the Wallaroo Documentation Site.

Prerequisites

- A deployed Wallaroo instance

- The following Python libraries installed:

Steps

The following steps demonstrate the following:

- Connect to a Wallaroo instance.

- Create a workspace and pipeline.

- Upload both models to the workspace.

- Deploy the pipe with the

rf_model.onnxmodel as a pipeline step. - Perform sample inferences.

- Hot swap and replace the existing model with the

xgb_model.onnxwhile keeping the pipeline deployed. - Conduct additional inferences to demonstrate the model hot swap was successful.

- Hot swap again with gbr_model.onnx, and perform more sample inferences.

- Undeploy the pipeline and return the resources back to the Wallaroo instance.

Load the Libraries

Load the Python libraries used to connect and interact with the Wallaroo instance.

import wallaroo

from wallaroo.object import EntityNotFoundError

# to display dataframe tables

from IPython.display import display

# used to display dataframe information without truncating

import pandas as pd

pd.set_option('display.max_colwidth', None)

import pyarrow as pa

Connect to the Wallaroo Instance

The first step is to connect to Wallaroo through the Wallaroo client. The Python library is included in the Wallaroo install and available through the Jupyter Hub interface provided with your Wallaroo environment.

This is accomplished using the wallaroo.Client() command, which provides a URL to grant the SDK permission to your specific Wallaroo environment. When displayed, enter the URL into a browser and confirm permissions. Store the connection into a variable that can be referenced later.

If logging into the Wallaroo instance through the internal JupyterHub service, use wl = wallaroo.Client(). For more information on Wallaroo Client settings, see the Client Connection guide.

# Login through local Wallaroo instance

wl = wallaroo.Client()

Set the Variables

The following variables are used in the later steps for creating the workspace, pipeline, and uploading the models. Modify them according to your organization’s requirements.

Just for the sake of this tutorial, we’ll use the SDK below to create our workspace , assign as our current workspace, then display all of the workspaces we have at the moment. We’ll also set up for our models and pipelines down the road, so we have one spot to change names to whatever fits your organization’s standards best.

To allow this tutorial to be run multiple times or by multiple users in the same Wallaroo instance, a random 4 character prefix will be added to the workspace, pipeline, and model.

import string

import random

# make a random 4 character prefix

prefix= ''.join(random.choice(string.ascii_lowercase) for i in range(4))

workspace_name = f'{prefix}hotswapworkspace'

pipeline_name = f'{prefix}hotswappipeline'

original_model_name = f'{prefix}housingmodelcontrol'

original_model_file_name = './models/rf_model.onnx'

replacement_model_name01 = f'{prefix}gbrhousingchallenger'

replacement_model_file_name01 = './models/gbr_model.onnx'

replacement_model_name02 = f'{prefix}xgbhousingchallenger'

replacement_model_file_name02 = './models/xgb_model.onnx'

def get_workspace(name):

workspace = None

for ws in wl.list_workspaces():

if ws.name() == name:

workspace= ws

if(workspace == None):

workspace = wl.create_workspace(name)

return workspace

def get_pipeline(name):

try:

pipeline = wl.pipelines_by_name(name)[0]

except EntityNotFoundError:

pipeline = wl.build_pipeline(name)

return pipeline

Create the Workspace

We will create a workspace based on the variable names set above, and set the new workspace as the current workspace. This workspace is where new pipelines will be created in and store uploaded models for this session.

Once set, the pipeline will be created.

workspace = get_workspace(workspace_name)

wl.set_current_workspace(workspace)

pipeline = get_pipeline(pipeline_name)

pipeline

| name | puaehotswappipeline |

|---|---|

| created | 2023-10-31 19:51:30.785109+00:00 |

| last_updated | 2023-10-31 19:51:30.785109+00:00 |

| deployed | (none) |

| arch | None |

| tags | |

| versions | 0f1ccb35-b6c4-4bf6-8467-2e1722c57eb8 |

| steps | |

| published | False |

Upload Models

We can now upload both of the models. In a later step, only one model will be added as a pipeline step, where the pipeline will submit inference requests to the pipeline.

original_model = (wl.upload_model(original_model_name ,

original_model_file_name,

framework=wallaroo.framework.Framework.ONNX)

.configure(tensor_fields=["tensor"])

)

replacement_model01 = (wl.upload_model(replacement_model_name01 ,

replacement_model_file_name01,

framework=wallaroo.framework.Framework.ONNX)

.configure(tensor_fields=["tensor"])

)

replacement_model02 = (wl.upload_model(replacement_model_name02 ,

replacement_model_file_name02,

framework=wallaroo.framework.Framework.ONNX)

.configure(tensor_fields=["tensor"])

)

wl.list_models()

| Name | # of Versions | Owner ID | Last Updated | Created At |

|---|---|---|---|---|

| puaexgbhousingchallenger | 1 | "" | 2023-10-31 19:51:36.363832+00:00 | 2023-10-31 19:51:36.363832+00:00 |

| puaegbrhousingchallenger | 1 | "" | 2023-10-31 19:51:34.715224+00:00 | 2023-10-31 19:51:34.715224+00:00 |

| puaehousingmodelcontrol | 1 | "" | 2023-10-31 19:51:33.070235+00:00 | 2023-10-31 19:51:33.070235+00:00 |

Add Model to Pipeline Step

With the models uploaded, we will add the original model as a pipeline step, then deploy the pipeline so it is available for performing inferences.

pipeline.add_model_step(original_model)

pipeline

| name | puaehotswappipeline |

|---|---|

| created | 2023-10-31 19:51:30.785109+00:00 |

| last_updated | 2023-10-31 19:51:30.785109+00:00 |

| deployed | (none) |

| arch | None |

| tags | |

| versions | 0f1ccb35-b6c4-4bf6-8467-2e1722c57eb8 |

| steps | |

| published | False |

pipeline.deploy()

| name | puaehotswappipeline |

|---|---|

| created | 2023-10-31 19:51:30.785109+00:00 |

| last_updated | 2023-10-31 19:51:39.783255+00:00 |

| deployed | True |

| arch | None |

| tags | |

| versions | b1e80203-be2b-4a78-9e24-3e56d5d10d21, 0f1ccb35-b6c4-4bf6-8467-2e1722c57eb8 |

| steps | puaehousingmodelcontrol |

| published | False |

pipeline.status()

{'status': 'Running',

'details': [],

'engines': [{'ip': '10.244.3.81',

'name': 'engine-696fcc57d7-7m9t7',

'status': 'Running',

'reason': None,

'details': [],

'pipeline_statuses': {'pipelines': [{'id': 'puaehotswappipeline',

'status': 'Running'}]},

'model_statuses': {'models': [{'name': 'puaehousingmodelcontrol',

'version': 'bc9e5117-5ab0-4da8-971c-06854137243f',

'sha': 'e22a0831aafd9917f3cc87a15ed267797f80e2afa12ad7d8810ca58f173b8cc6',

'status': 'Running'}]}}],

'engine_lbs': [{'ip': '10.244.4.102',

'name': 'engine-lb-584f54c899-c9z75',

'status': 'Running',

'reason': None,

'details': []}],

'sidekicks': []}

Verify the Model

The pipeline is deployed with our model. The following will verify that the model is operating correctly. The high_fraud.json file contains data that the model should process as a high likelihood of being a fraudulent transaction.

normal_input = pd.DataFrame.from_records({"tensor": [[4.0, 2.5, 2900.0, 5505.0, 2.0, 0.0, 0.0, 3.0, 8.0, 2900.0, 0.0, 47.6063, -122.02, 2970.0, 5251.0, 12.0, 0.0, 0.0]]})

result = pipeline.infer(normal_input)

display(result)

| time | in.tensor | out.variable | check_failures | |

|---|---|---|---|---|

| 0 | 2023-10-31 19:51:52.769 | [4.0, 2.5, 2900.0, 5505.0, 2.0, 0.0, 0.0, 3.0, 8.0, 2900.0, 0.0, 47.6063, -122.02, 2970.0, 5251.0, 12.0, 0.0, 0.0] | [718013.7] | 0 |

large_house_input = pd.DataFrame.from_records({'tensor': [[4.0, 3.0, 3710.0, 20000.0, 2.0, 0.0, 2.0, 5.0, 10.0, 2760.0, 950.0, 47.6696, -122.261, 3970.0, 20000.0, 79.0, 0.0, 0.0]]})

large_house_result = pipeline.infer(large_house_input)

display(large_house_result)

| time | in.tensor | out.variable | check_failures | |

|---|---|---|---|---|

| 0 | 2023-10-31 19:51:53.171 | [4.0, 3.0, 3710.0, 20000.0, 2.0, 0.0, 2.0, 5.0, 10.0, 2760.0, 950.0, 47.6696, -122.261, 3970.0, 20000.0, 79.0, 0.0, 0.0] | [1514079.4] | 0 |

Replace the Model

The pipeline is currently deployed and is able to handle inferences. The model will now be replaced without having to undeploy the pipeline. This is done using the pipeline method replace_with_model_step(index, model). Steps start at 0, so the method called below will replace step 0 in our pipeline with the replacement model.

As an exercise, this deployment can be performed while inferences are actively being submitted to the pipeline to show how quickly the swap takes place.

pipeline.replace_with_model_step(0, replacement_model01).deploy()

| name | puaehotswappipeline |

|---|---|

| created | 2023-10-31 19:51:30.785109+00:00 |

| last_updated | 2023-10-31 19:51:53.854214+00:00 |

| deployed | True |

| arch | None |

| tags | |

| versions | 90a41e8d-2b33-445c-a8af-411840377674, b1e80203-be2b-4a78-9e24-3e56d5d10d21, 0f1ccb35-b6c4-4bf6-8467-2e1722c57eb8 |

| steps | puaehousingmodelcontrol |

| published | False |

Verify the Swap

To verify the swap, we’ll submit the same inferences and display the result. Note that out.variable has a different output than with the original model.

normal_input = pd.DataFrame.from_records({"tensor": [[4.0, 2.5, 2900.0, 5505.0, 2.0, 0.0, 0.0, 3.0, 8.0, 2900.0, 0.0, 47.6063, -122.02, 2970.0, 5251.0, 12.0, 0.0, 0.0]]})

result02 = pipeline.infer(normal_input)

display(result02)

| time | in.tensor | out.variable | check_failures | |

|---|---|---|---|---|

| 0 | 2023-10-31 19:51:58.130 | [4.0, 2.5, 2900.0, 5505.0, 2.0, 0.0, 0.0, 3.0, 8.0, 2900.0, 0.0, 47.6063, -122.02, 2970.0, 5251.0, 12.0, 0.0, 0.0] | [704901.9] | 0 |

large_house_input = pd.DataFrame.from_records({'tensor': [[4.0, 3.0, 3710.0, 20000.0, 2.0, 0.0, 2.0, 5.0, 10.0, 2760.0, 950.0, 47.6696, -122.261, 3970.0, 20000.0, 79.0, 0.0, 0.0]]})

large_house_result02 = pipeline.infer(large_house_input)

display(large_house_result02)

| time | in.tensor | out.variable | check_failures | |

|---|---|---|---|---|

| 0 | 2023-10-31 19:51:58.541 | [4.0, 3.0, 3710.0, 20000.0, 2.0, 0.0, 2.0, 5.0, 10.0, 2760.0, 950.0, 47.6696, -122.261, 3970.0, 20000.0, 79.0, 0.0, 0.0] | [1981238.0] | 0 |

Replace the Model Again

Let’s do one more hot swap, this time with our replacement_model02, then get some test inferences.

pipeline.replace_with_model_step(0, replacement_model02).deploy()

| name | puaehotswappipeline |

|---|---|

| created | 2023-10-31 19:51:30.785109+00:00 |

| last_updated | 2023-10-31 19:51:59.247708+00:00 |

| deployed | True |

| arch | None |

| tags | |

| versions | ecf69506-b73a-455d-83de-b81a5c815b36, 90a41e8d-2b33-445c-a8af-411840377674, b1e80203-be2b-4a78-9e24-3e56d5d10d21, 0f1ccb35-b6c4-4bf6-8467-2e1722c57eb8 |

| steps | puaehousingmodelcontrol |

| published | False |

normal_input = pd.DataFrame.from_records({"tensor": [[4.0, 2.5, 2900.0, 5505.0, 2.0, 0.0, 0.0, 3.0, 8.0, 2900.0, 0.0, 47.6063, -122.02, 2970.0, 5251.0, 12.0, 0.0, 0.0]]})

result03 = pipeline.infer(normal_input)

display(result03)

| time | in.tensor | out.variable | check_failures | |

|---|---|---|---|---|

| 0 | 2023-10-31 19:52:03.710 | [4.0, 2.5, 2900.0, 5505.0, 2.0, 0.0, 0.0, 3.0, 8.0, 2900.0, 0.0, 47.6063, -122.02, 2970.0, 5251.0, 12.0, 0.0, 0.0] | [659806.0] | 0 |

large_house_input = pd.DataFrame.from_records({'tensor': [[4.0, 3.0, 3710.0, 20000.0, 2.0, 0.0, 2.0, 5.0, 10.0, 2760.0, 950.0, 47.6696, -122.261, 3970.0, 20000.0, 79.0, 0.0, 0.0]]})

large_house_result03 = pipeline.infer(large_house_input)

display(large_house_result03)

| time | in.tensor | out.variable | check_failures | |

|---|---|---|---|---|

| 0 | 2023-10-31 19:52:04.085 | [4.0, 3.0, 3710.0, 20000.0, 2.0, 0.0, 2.0, 5.0, 10.0, 2760.0, 950.0, 47.6696, -122.261, 3970.0, 20000.0, 79.0, 0.0, 0.0] | [2176827.0] | 0 |

Compare Outputs

We’ll display the outputs of our inferences through the different models for comparison.

display([original_model_name, result.loc[0, "out.variable"]])

display([replacement_model_name01, result02.loc[0, "out.variable"]])

display([replacement_model_name02, result03.loc[0, "out.variable"]])

['puaehousingmodelcontrol', [718013.7]]

[‘puaegbrhousingchallenger’, [704901.9]]

[‘puaexgbhousingchallenger’, [659806.0]]

display([original_model_name, large_house_result.loc[0, "out.variable"]])

display([replacement_model_name01, large_house_result02.loc[0, "out.variable"]])

display([replacement_model_name02, large_house_result03.loc[0, "out.variable"]])

['puaehousingmodelcontrol', [1514079.4]]

[‘puaegbrhousingchallenger’, [1981238.0]]

[‘puaexgbhousingchallenger’, [2176827.0]]

Undeploy the Pipeline

With the tutorial complete, the pipeline is undeployed to return the resources back to the Wallaroo instance.

pipeline.undeploy()

| name | puaehotswappipeline |

|---|---|

| created | 2023-10-31 19:51:30.785109+00:00 |

| last_updated | 2023-10-31 19:51:59.247708+00:00 |

| deployed | False |

| arch | None |

| tags | |

| versions | ecf69506-b73a-455d-83de-b81a5c815b36, 90a41e8d-2b33-445c-a8af-411840377674, b1e80203-be2b-4a78-9e24-3e56d5d10d21, 0f1ccb35-b6c4-4bf6-8467-2e1722c57eb8 |

| steps | puaehousingmodelcontrol |

| published | False |

2 - Inference URL Tutorials

Wallaroo provides multiple methods of performing inferences through a deployed pipeline.

2.1 - Wallaroo SDK Inferencing with Pipeline Inference URL Tutorial

This tutorial and the assets can be downloaded as part of the Wallaroo Tutorials repository.

Wallaroo SDK Inference Tutorial

Wallaroo provides the ability to perform inferences through deployed pipelines via the Wallaroo SDK and the Wallaroo MLOps API. This tutorial demonstrates performing inferences using the Wallaroo SDK.

This tutorial provides the following:

ccfraud.onnx: A pre-trained credit card fraud detection model.data/cc_data_1k.arrow,data/cc_data_10k.arrow: Sample testing data in Apache Arrow format with 1,000 and 10,000 records respectively.wallaroo-model-endpoints-sdk.py: A code-only version of this tutorial as a Python script.

This tutorial and sample data comes from the Machine Learning Group’s demonstration on Credit Card Fraud detection.

Prerequisites

The following is required for this tutorial:

- A deployed Wallaroo instance with Model Endpoints Enabled

- The following Python libraries:

Tutorial Goals

This demonstration provides a quick tutorial on performing inferences using the Wallaroo SDK using the Pipeline infer and infer_from_file methods. This following steps will be performed:

- Connect to a Wallaroo instance using environmental variables. This bypasses the browser link confirmation for a seamless login. For more information, see the Wallaroo SDK Essentials Guide: Client Connection.

- Create a workspace for our models and pipelines.

- Upload the

ccfraudmodel. - Create a pipeline and add the

ccfraudmodel as a pipeline step. - Run a sample inference through SDK Pipeline

infermethod. - Run a batch inference through SDK Pipeline

infer_from_filemethod. - Run a DataFrame and Arrow based inference through the pipeline Inference URL.

Open a Connection to Wallaroo

The first step is to connect to Wallaroo through the Wallaroo client. This example will store the user’s credentials into the file ./creds.json which contains the following:

{

"username": "{Connecting User's Username}",

"password": "{Connecting User's Password}",

"email": "{Connecting User's Email Address}"

}

Replace the username, password, and email fields with the user account connecting to the Wallaroo instance. This allows a seamless connection to the Wallaroo instance and bypasses the standard browser based confirmation link. For more information, see the Wallaroo SDK Essentials Guide: Client Connection.

If running this example within the internal Wallaroo JupyterHub service, use the wallaroo.Client(auth_type="user_password") method. If connecting externally via the Wallaroo SDK, use the following to specify the URL of the Wallaroo instance as defined in the Wallaroo DNS Integration Guide, replacing wallarooPrefix. and wallarooSuffix with your Wallaroo instance’s DNS prefix and suffix.

Note the . is part of the prefix. If there is no prefix, then wallarooPrefix = ""

import wallaroo

from wallaroo.object import EntityNotFoundError

import pandas as pd

import os

import pyarrow as pa

# used to display dataframe information without truncating

from IPython.display import display

pd.set_option('display.max_colwidth', None)

import requests

# Used to create unique workspace and pipeline names

import string

import random

# make a random 4 character suffix to prevent workspace and pipeline name clobbering

suffix= ''.join(random.choice(string.ascii_lowercase) for i in range(4))

# Retrieve the login credentials.

os.environ["WALLAROO_SDK_CREDENTIALS"] = './creds.json'

# Client connection from local Wallaroo instance

wl = wallaroo.Client(auth_type="user_password")

Create the Workspace

We will create a workspace to work in and call it the sdkinferenceexampleworkspace, then set it as current workspace environment. We’ll also create our pipeline in advance as sdkinferenceexamplepipeline.

The model to be uploaded and used for inference will be labeled as ccfraud.

workspace_name = f'sdkinferenceexampleworkspace{suffix}'

pipeline_name = f'sdkinferenceexamplepipeline{suffix}'

model_name = f'ccfraud{suffix}'

model_file_name = './ccfraud.onnx'

def get_workspace(name):

workspace = None

for ws in wl.list_workspaces():

if ws.name() == name:

workspace= ws

if(workspace == None):

workspace = wl.create_workspace(name)

return workspace

def get_pipeline(name):

try:

pipeline = wl.pipelines_by_name(name)[0]

except EntityNotFoundError:

pipeline = wl.build_pipeline(name)

return pipeline

workspace = get_workspace(workspace_name)

wl.set_current_workspace(workspace)

{'name': 'sdkinferenceexampleworkspacesrsw', 'id': 47, 'archived': False, 'created_by': 'fec5b97a-934b-487f-b95b-ade7f3b81f9c', 'created_at': '2023-05-19T15:14:02.432103+00:00', 'models': [], 'pipelines': []}

Build Pipeline

In a production environment, the pipeline would already be set up with the model and pipeline steps. We would then select it and use it to perform our inferences.

For this example we will create the pipeline and add the ccfraud model as a pipeline step and deploy it. Deploying a pipeline allocates resources from the Kubernetes cluster hosting the Wallaroo instance and prepares it for performing inferences.

If this process was already completed, it can be commented out and skipped for the next step Select Pipeline.

Then we will list the pipelines and select the one we will be using for the inference demonstrations.

# Create or select the current pipeline

ccfraudpipeline = get_pipeline(pipeline_name)

# Add ccfraud model as the pipeline step

ccfraud_model = (wl.upload_model(model_name,

model_file_name,

framework=wallaroo.framework.Framework.ONNX)

.configure(tensor_fields=["tensor"])

)

ccfraudpipeline.add_model_step(ccfraud_model).deploy()

| name | sdkinferenceexamplepipelinesrsw |

|---|---|

| created | 2023-05-19 15:14:03.916503+00:00 |

| last_updated | 2023-05-19 15:14:05.162541+00:00 |

| deployed | True |

| tags | |

| versions | 81840bdb-a1bc-48b9-8df0-4c7a196fa79a, 49cfc2cc-16fb-4dfa-8d1b-579fa86dab07 |

| steps | ccfraudsrsw |

Select Pipeline

This step assumes that the pipeline is prepared with ccfraud as the current step. The method pipelines_by_name(name) returns an array of pipelines with names matching the pipeline_name field. This example assumes only one pipeline is assigned the name sdkinferenceexamplepipeline.

# List the pipelines by name in the current workspace - just the first several to save space.

display(wl.list_pipelines()[:5])

# Set the `pipeline` variable to our sample pipeline.

pipeline = wl.pipelines_by_name(pipeline_name)[0]

display(pipeline)

[{'name': 'sdkinferenceexamplepipelinesrsw', 'create_time': datetime.datetime(2023, 5, 19, 15, 14, 3, 916503, tzinfo=tzutc()), 'definition': '[]'},

{'name': 'ccshadoweonn', 'create_time': datetime.datetime(2023, 5, 19, 15, 13, 48, 963815, tzinfo=tzutc()), 'definition': '[]'},

{'name': 'ccshadowgozg', 'create_time': datetime.datetime(2023, 5, 19, 15, 8, 23, 58929, tzinfo=tzutc()), 'definition': '[]'}]

| name | sdkinferenceexamplepipelinesrsw |

|---|---|

| created | 2023-05-19 15:14:03.916503+00:00 |

| last_updated | 2023-05-19 15:14:05.162541+00:00 |

| deployed | True |

| tags | |

| versions | 81840bdb-a1bc-48b9-8df0-4c7a196fa79a, 49cfc2cc-16fb-4dfa-8d1b-579fa86dab07 |

| steps | ccfraudsrsw |

Interferences via SDK

Once a pipeline has been deployed, an inference can be run. This will submit data to the pipeline, where it is processed through each of the pipeline’s steps with the output of the previous step providing the input for the next step. The final step will then output the result of all of the pipeline’s steps.

- Inputs are either sent one of the following:

- pandas.DataFrame. The return value will be a pandas.DataFrame.

- Apache Arrow. The return value will be an Apache Arrow table.

- Custom JSON. The return value will be a Wallaroo InferenceResult object.

Inferences are performed through the Wallaroo SDK via the Pipeline infer and infer_from_file methods.

infer Method

Now that the pipeline is deployed we’ll perform an inference using the Pipeline infer method, and submit a pandas DataFrame as our input data. This will return a pandas DataFrame as the inference output.

For more information, see the Wallaroo SDK Essentials Guide: Inferencing: Run Inference through Local Variable.

smoke_test = pd.DataFrame.from_records([

{

"tensor":[

1.0678324729,

0.2177810266,

-1.7115145262,

0.682285721,

1.0138553067,

-0.4335000013,

0.7395859437,

-0.2882839595,

-0.447262688,

0.5146124988,

0.3791316964,

0.5190619748,

-0.4904593222,

1.1656456469,

-0.9776307444,

-0.6322198963,

-0.6891477694,

0.1783317857,

0.1397992467,

-0.3554220649,

0.4394217877,

1.4588397512,

-0.3886829615,

0.4353492889,

1.7420053483,

-0.4434654615,

-0.1515747891,

-0.2668451725,

-1.4549617756

]

}

])

result = pipeline.infer(smoke_test)

display(result)

| time | in.tensor | out.dense_1 | check_failures | |

|---|---|---|---|---|

| 0 | 2023-05-19 15:14:22.066 | [1.0678324729, 0.2177810266, -1.7115145262, 0.682285721, 1.0138553067, -0.4335000013, 0.7395859437, -0.2882839595, -0.447262688, 0.5146124988, 0.3791316964, 0.5190619748, -0.4904593222, 1.1656456469, -0.9776307444, -0.6322198963, -0.6891477694, 0.1783317857, 0.1397992467, -0.3554220649, 0.4394217877, 1.4588397512, -0.3886829615, 0.4353492889, 1.7420053483, -0.4434654615, -0.1515747891, -0.2668451725, -1.4549617756] | [0.0014974177] | 0 |

infer_from_file Method

This example uses the Pipeline method infer_from_file to submit 10,000 records as a batch using an Apache Arrow table. The method will return an Apache Arrow table. For more information, see the Wallaroo SDK Essentials Guide: Inferencing: Run Inference From A File

The results will be converted into a pandas.DataFrame. The results will be filtered by transactions likely to be credit card fraud.

result = pipeline.infer_from_file('./data/cc_data_10k.arrow')

display(result)

pyarrow.Table

time: timestamp[ms]

in.tensor: list<item: float> not null

child 0, item: float

out.dense_1: list<inner: float not null> not null

child 0, inner: float not null

check_failures: int8

----

time: [[2023-05-19 15:14:22.851,2023-05-19 15:14:22.851,2023-05-19 15:14:22.851,2023-05-19 15:14:22.851,2023-05-19 15:14:22.851,...,2023-05-19 15:14:22.851,2023-05-19 15:14:22.851,2023-05-19 15:14:22.851,2023-05-19 15:14:22.851,2023-05-19 15:14:22.851]]

in.tensor: [[[-1.0603298,2.3544967,-3.5638788,5.138735,-1.2308457,...,0.038412016,1.0993439,1.2603409,-0.14662448,-1.4463212],[-1.0603298,2.3544967,-3.5638788,5.138735,-1.2308457,...,0.038412016,1.0993439,1.2603409,-0.14662448,-1.4463212],...,[-2.1694233,-3.1647356,1.2038506,-0.2649221,0.0899006,...,1.8174038,-0.19327773,0.94089776,0.825025,1.6242892],[-0.12405868,0.73698884,1.0311689,0.59917533,0.11831961,...,-0.36567155,-0.87004745,0.41288367,0.49470216,-0.6710689]]]

out.dense_1: [[[0.99300325],[0.99300325],...,[0.00024175644],[0.0010648072]]]

check_failures: [[0,0,0,0,0,...,0,0,0,0,0]]

# use pyarrow to convert results to a pandas DataFrame and display only the results with > 0.75

list = [0.75]

outputs = result.to_pandas()

# display(outputs)

filter = [elt[0] > 0.75 for elt in outputs['out.dense_1']]

outputs = outputs.loc[filter]

display(outputs)

| time | in.tensor | out.dense_1 | check_failures | |

|---|---|---|---|---|

| 0 | 2023-05-19 15:14:22.851 | [-1.0603298, 2.3544967, -3.5638788, 5.138735, -1.2308457, -0.76878244, -3.5881228, 1.8880838, -3.2789674, -3.9563255, 4.099344, -5.653918, -0.8775733, -9.131571, -0.6093538, -3.7480276, -5.0309124, -0.8748149, 1.9870535, 0.7005486, 0.9204423, -0.10414918, 0.32295644, -0.74181414, 0.038412016, 1.0993439, 1.2603409, -0.14662448, -1.4463212] | [0.99300325] | 0 |

| 1 | 2023-05-19 15:14:22.851 | [-1.0603298, 2.3544967, -3.5638788, 5.138735, -1.2308457, -0.76878244, -3.5881228, 1.8880838, -3.2789674, -3.9563255, 4.099344, -5.653918, -0.8775733, -9.131571, -0.6093538, -3.7480276, -5.0309124, -0.8748149, 1.9870535, 0.7005486, 0.9204423, -0.10414918, 0.32295644, -0.74181414, 0.038412016, 1.0993439, 1.2603409, -0.14662448, -1.4463212] | [0.99300325] | 0 |

| 2 | 2023-05-19 15:14:22.851 | [-1.0603298, 2.3544967, -3.5638788, 5.138735, -1.2308457, -0.76878244, -3.5881228, 1.8880838, -3.2789674, -3.9563255, 4.099344, -5.653918, -0.8775733, -9.131571, -0.6093538, -3.7480276, -5.0309124, -0.8748149, 1.9870535, 0.7005486, 0.9204423, -0.10414918, 0.32295644, -0.74181414, 0.038412016, 1.0993439, 1.2603409, -0.14662448, -1.4463212] | [0.99300325] | 0 |

| 3 | 2023-05-19 15:14:22.851 | [-1.0603298, 2.3544967, -3.5638788, 5.138735, -1.2308457, -0.76878244, -3.5881228, 1.8880838, -3.2789674, -3.9563255, 4.099344, -5.653918, -0.8775733, -9.131571, -0.6093538, -3.7480276, -5.0309124, -0.8748149, 1.9870535, 0.7005486, 0.9204423, -0.10414918, 0.32295644, -0.74181414, 0.038412016, 1.0993439, 1.2603409, -0.14662448, -1.4463212] | [0.99300325] | 0 |

| 161 | 2023-05-19 15:14:22.851 | [-9.716793, 9.174981, -14.450761, 8.653825, -11.039951, 0.6602411, -22.825525, -9.919395, -8.064324, -16.737926, 4.852197, -12.563343, -1.0762653, -7.524591, -3.2938414, -9.62102, -15.6501045, -7.089741, 1.7687134, 5.044906, -11.365625, 4.5987034, 4.4777045, 0.31702697, -2.2731977, 0.07944675, -10.052058, -2.024108, -1.0611985] | [1.0] | 0 |

| 941 | 2023-05-19 15:14:22.851 | [-0.50492376, 1.9348029, -3.4217603, 2.2165704, -0.6545315, -1.9004827, -1.6786858, 0.5380051, -2.7229102, -5.265194, 3.504164, -5.4661765, 0.68954825, -8.725291, 2.0267954, -5.4717045, -4.9123807, -1.6131229, 3.8021576, 1.3881834, 1.0676425, 0.28200775, -0.30759808, -0.48498034, 0.9507336, 1.5118006, 1.6385275, 1.072455, 0.7959132] | [0.9873102] | 0 |

| 1445 | 2023-05-19 15:14:22.851 | [-7.615594, 4.659706, -12.057331, 7.975307, -5.1068773, -1.6116138, -12.146941, -0.5952333, -6.4605103, -12.535655, 10.017626, -14.839381, 0.34900802, -14.953928, -0.3901092, -9.342014, -14.285043, -5.758632, 0.7512068, 1.4632998, -3.3777077, 0.9950705, -0.5855211, -1.6528498, 1.9089833, 1.6860862, 5.5044003, -3.703297, -1.4715525] | [1.0] | 0 |

| 2092 | 2023-05-19 15:14:22.851 | [-14.115489, 9.905631, -18.67885, 4.602589, -15.404288, -3.7169847, -15.887272, 15.616176, -3.2883947, -7.0224414, 4.086536, -5.7809114, 1.2251061, -5.4301147, -0.14021407, -6.0200763, -12.957546, -5.545689, 0.86074656, 2.2463796, 2.492611, -2.9649208, -2.265674, 0.27490455, 3.9263225, -0.43438172, 3.1642237, 1.2085277, 0.8223642] | [0.99999] | 0 |

| 2220 | 2023-05-19 15:14:22.851 | [-0.1098309, 2.5842443, -3.5887418, 4.63558, 1.1825614, -1.2139517, -0.7632139, 0.6071841, -3.7244265, -3.501917, 4.3637576, -4.612757, -0.44275254, -10.346612, 0.66243565, -0.33048683, 1.5961986, 2.5439718, 0.8787973, 0.7406088, 0.34268215, -0.68495077, -0.48357907, -1.9404846, -0.059520483, 1.1553137, 0.9918434, 0.7067319, -1.6016251] | [0.91080534] | 0 |

| 4135 | 2023-05-19 15:14:22.851 | [-0.547029, 2.2944348, -4.149202, 2.8648357, -0.31232587, -1.5427867, -2.1489344, 0.9471863, -2.663241, -4.2572775, 2.1116028, -6.2264414, -1.1307784, -6.9296007, 1.0049651, -5.876498, -5.6855297, -1.5800936, 3.567338, 0.5962099, 1.6361043, 1.8584082, -0.08202618, 0.46620172, -2.234368, -0.18116793, 1.744976, 2.1414309, -1.6081295] | [0.98877275] | 0 |

| 4236 | 2023-05-19 15:14:22.851 | [-3.135635, -1.483817, -3.0833669, 1.6626456, -0.59695035, -0.30199608, -3.316563, 1.869609, -1.8006078, -4.5662026, 2.8778172, -4.0887237, -0.43401834, -3.5816982, 0.45171788, -5.725131, -8.982029, -4.0279546, 0.89264476, 0.24721873, 1.8289508, 1.6895254, -2.5555577, -2.4714024, -0.4500012, 0.23333028, 2.2119386, -2.041805, 1.1568314] | [0.95601666] | 0 |

| 5658 | 2023-05-19 15:14:22.851 | [-5.4078765, 3.9039962, -8.98522, 5.128742, -7.373224, -2.946234, -11.033238, 5.914019, -5.669241, -12.041053, 6.950792, -12.488795, 1.2236942, -14.178565, 1.6514667, -12.47019, -22.350504, -8.928755, 4.54775, -0.11478994, 3.130207, -0.70128506, -0.40275285, 0.7511918, -0.1856308, 0.92282087, 0.146656, -1.3761806, 0.42997098] | [1.0] | 0 |

| 6768 | 2023-05-19 15:14:22.851 | [-16.900557, 11.7940855, -21.349983, 4.746453, -17.54182, -3.415758, -19.897173, 13.8569145, -3.570626, -7.388376, 3.0761156, -4.0583425, 1.2901028, -2.7997534, -0.4298746, -4.777225, -11.371295, -5.2725616, 0.0964799, 4.2148075, -0.8343371, -2.3663573, -1.6571938, 0.2110055, 4.438088, -0.49057993, 2.342008, 1.4479793, -1.4715525] | [0.9999745] | 0 |

| 6780 | 2023-05-19 15:14:22.851 | [-0.74893713, 1.3893062, -3.7477517, 2.4144504, -0.11061429, -1.0737498, -3.1504633, 1.2081385, -1.332872, -4.604276, 4.438548, -7.687688, 1.1683422, -5.3296027, -0.19838685, -5.294243, -5.4928794, -1.3254275, 4.387228, 0.68643385, 0.87228596, -0.1154091, -0.8364338, -0.61202216, 0.10518055, 2.2618086, 1.1435078, -0.32623357, -1.6081295] | [0.9852645] | 0 |

| 7133 | 2023-05-19 15:14:22.851 | [-7.5131927, 6.507386, -12.439463, 5.7453, -9.513038, -1.4236209, -17.402607, -3.0903268, -5.378041, -15.169325, 5.7585907, -13.448207, -0.45244268, -8.495097, -2.2323692, -11.429063, -19.578058, -8.367617, 1.8869618, 2.1813896, -4.799091, 2.4388566, 2.9503248, 0.6293566, -2.6906652, -2.1116931, -6.4196434, -1.4523355, -1.4715525] | [1.0] | 0 |

| 7566 | 2023-05-19 15:14:22.851 | [-2.1804514, 1.0243497, -4.3890443, 3.4924, -3.7609894, 0.023624033, -2.7677023, 1.1786921, -2.9450424, -6.8823, 6.1294384, -9.564066, -1.6273017, -10.940607, 0.3062539, -8.854589, -15.382658, -5.419305, 3.2210033, -0.7381137, 0.9632334, 0.6612066, 2.1337948, -0.90536207, 0.7498649, -0.019404415, 5.5950212, 0.26602694, 1.7534728] | [0.9999705] | 0 |

| 7911 | 2023-05-19 15:14:22.851 | [-1.594454, 1.8545462, -2.6311765, 2.759316, -2.6988854, -0.08155677, -3.8566258, -0.04912437, -1.9640644, -4.2058415, 3.391933, -6.471933, -0.9877536, -6.188904, 1.2249585, -8.652863, -11.170872, -6.134417, 2.5400054, -0.29327056, 3.591464, 0.3057127, -0.052313827, 0.06196331, -0.82863224, -0.2595842, 1.0207018, 0.019899422, 1.0935433] | [0.9980203] | 0 |

| 8921 | 2023-05-19 15:14:22.851 | [-0.21756083, 1.786712, -3.4240367, 2.7769134, -1.420116, -2.1018193, -3.4615245, 0.7367844, -2.3844852, -6.3140697, 4.382665, -8.348951, -1.6409378, -10.611383, 1.1813216, -6.251184, -10.577264, -3.5184007, 0.7997489, 0.97915924, 1.081642, -0.7852368, -0.4761941, -0.10635195, 2.066527, -0.4103488, 2.8288178, 1.9340333, -1.4715525] | [0.99950194] | 0 |

| 9244 | 2023-05-19 15:14:22.851 | [-3.314442, 2.4431305, -6.1724143, 3.6737356, -3.81542, -1.5950849, -4.8292923, 2.9850774, -4.22416, -7.5519834, 6.1932964, -8.59886, 0.25443414, -11.834097, -0.39583337, -6.015362, -13.532762, -4.226845, 1.1153877, 0.17989528, 1.3166595, -0.64433384, 0.2305495, -0.5776498, 0.7609739, 2.2197483, 4.01189, -1.2347667, 1.2847253] | [0.9999876] | 0 |

| 10176 | 2023-05-19 15:14:22.851 | [-5.0815525, 3.9294617, -8.4077635, 6.373701, -7.391173, -2.1574461, -10.345097, 5.5896044, -6.3736906, -11.330594, 6.618754, -12.93748, 1.1884484, -13.9628935, 1.0340953, -12.278127, -23.333889, -8.886669, 3.5720036, -0.3243157, 3.4229393, 0.493529, 0.08469851, 0.791218, 0.30968663, 0.6811129, 0.39306796, -1.5204874, 0.9061435] | [1.0] | 0 |

Inferences via HTTP POST

Each pipeline has its own Inference URL that allows HTTP/S POST submissions of inference requests. Full details are available from the Inferencing via the Wallaroo MLOps API.

This example will demonstrate performing inferences with a DataFrame input and an Apache Arrow input.

Request JWT Token

There are two ways to retrieve the JWT token used to authenticate to the Wallaroo MLOps API.

- Wallaroo SDK. This method requires a Wallaroo based user.

- API Clent Secret. This is the recommended method as it is user independent. It allows any valid user to make an inference request.

This tutorial will use the Wallaroo SDK method Wallaroo Client wl.auth.auth_header() method, extracting the Authentication header from the response.

Reference: MLOps API Retrieve Token Through Wallaroo SDK

headers = wl.auth.auth_header()

display(headers)

{'Authorization': 'Bearer eyJhbGciOiJSUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICJhSFpPS1RacGhxT1JQVkw4Y19JV25qUDNMU29iSnNZNXBtNE5EQTA1NVZNIn0.eyJleHAiOjE2ODQ1MDkzMDgsImlhdCI6MTY4NDUwOTI0OCwiYXV0aF90aW1lIjoxNjg0NTA4ODUwLCJqdGkiOiJkZmU3ZTIyMS02ODMyLTRiOGItYjJiMS1hYzFkYWY2YjVmMWYiLCJpc3MiOiJodHRwczovL3NwYXJrbHktYXBwbGUtMzAyNi5rZXljbG9hay53YWxsYXJvby5jb21tdW5pdHkvYXV0aC9yZWFsbXMvbWFzdGVyIiwiYXVkIjpbIm1hc3Rlci1yZWFsbSIsImFjY291bnQiXSwic3ViIjoiZmVjNWI5N2EtOTM0Yi00ODdmLWI5NWItYWRlN2YzYjgxZjljIiwidHlwIjoiQmVhcmVyIiwiYXpwIjoic2RrLWNsaWVudCIsInNlc3Npb25fc3RhdGUiOiI5ZTQ0YjNmNC0xYzg2LTRiZmQtOGE3My0yYjc0MjY5ZmNiNGMiLCJhY3IiOiIwIiwicmVhbG1fYWNjZXNzIjp7InJvbGVzIjpbImNyZWF0ZS1yZWFsbSIsImRlZmF1bHQtcm9sZXMtbWFzdGVyIiwib2ZmbGluZV9hY2Nlc3MiLCJhZG1pbiIsInVtYV9hdXRob3JpemF0aW9uIl19LCJyZXNvdXJjZV9hY2Nlc3MiOnsibWFzdGVyLXJlYWxtIjp7InJvbGVzIjpbInZpZXctaWRlbnRpdHktcHJvdmlkZXJzIiwidmlldy1yZWFsbSIsIm1hbmFnZS1pZGVudGl0eS1wcm92aWRlcnMiLCJpbXBlcnNvbmF0aW9uIiwiY3JlYXRlLWNsaWVudCIsIm1hbmFnZS11c2VycyIsInF1ZXJ5LXJlYWxtcyIsInZpZXctYXV0aG9yaXphdGlvbiIsInF1ZXJ5LWNsaWVudHMiLCJxdWVyeS11c2VycyIsIm1hbmFnZS1ldmVudHMiLCJtYW5hZ2UtcmVhbG0iLCJ2aWV3LWV2ZW50cyIsInZpZXctdXNlcnMiLCJ2aWV3LWNsaWVudHMiLCJtYW5hZ2UtYXV0aG9yaXphdGlvbiIsIm1hbmFnZS1jbGllbnRzIiwicXVlcnktZ3JvdXBzIl19LCJhY2NvdW50Ijp7InJvbGVzIjpbIm1hbmFnZS1hY2NvdW50IiwibWFuYWdlLWFjY291bnQtbGlua3MiLCJ2aWV3LXByb2ZpbGUiXX19LCJzY29wZSI6ImVtYWlsIHByb2ZpbGUiLCJzaWQiOiI5ZTQ0YjNmNC0xYzg2LTRiZmQtOGE3My0yYjc0MjY5ZmNiNGMiLCJlbWFpbF92ZXJpZmllZCI6dHJ1ZSwiaHR0cHM6Ly9oYXN1cmEuaW8vand0L2NsYWltcyI6eyJ4LWhhc3VyYS11c2VyLWlkIjoiZmVjNWI5N2EtOTM0Yi00ODdmLWI5NWItYWRlN2YzYjgxZjljIiwieC1oYXN1cmEtZGVmYXVsdC1yb2xlIjoidXNlciIsIngtaGFzdXJhLWFsbG93ZWQtcm9sZXMiOlsidXNlciJdLCJ4LWhhc3VyYS11c2VyLWdyb3VwcyI6Int9In0sInByZWZlcnJlZF91c2VybmFtZSI6ImpvaG4uaHVtbWVsQHdhbGxhcm9vLmFpIiwiZW1haWwiOiJqb2huLmh1bW1lbEB3YWxsYXJvby5haSJ9.YksrXBWIxMHz2Mh0dhM8GVvFUQJH5sCVTfA5qYiMIquME5vROVjqlm72k2FwdHQmRdwbwKGU1fGfuw6ijAfvVvd50lMdhYrT6TInhdaXX6UZ0pqsuuXyC1HxaTfC5JA7yOQo7SGQ3rjVvsSo_tHhf08HW6gmg2FO9Sdsbo3y2cPEqG7xR_vbB93s_lmQHjN6T8lAdq_io2jkDFUlKtAapAQ3Z5d68-Na5behVqtGeYRb6UKJTUoH-dso7zRwZ1RcqX5_3kT2xEL-dfkAndkvzRCfjOz-OJQEjo2j9iJFWpVaNjsUA45FCUhSNfuG1-zYtAOWcSmq8DyxAt6hY-fgaA'}

Retrieve the Pipeline Inference URL

The Pipeline Inference URL is retrieved via the Wallaroo SDK with the Pipeline ._deployment._url() method.

- IMPORTANT NOTE: The

_deployment._url()method will return an internal URL when using Python commands from within the Wallaroo instance - for example, the Wallaroo JupyterHub service. When connecting via an external connection,_deployment._url()returns an external URL.- External URL connections requires the authentication be included in the HTTP request, and Model Endpoints are enabled in the Wallaroo configuration options.

deploy_url = pipeline._deployment._url()

print(deploy_url)

https://sparkly-apple-3026.api.wallaroo.community/v1/api/pipelines/infer/sdkinferenceexamplepipelinesrsw-28/sdkinferenceexamplepipelinesrsw

HTTP Inference with DataFrame Input

The following example performs a HTTP Inference request with a DataFrame input. The request will be made with first a Python requests method, then using curl.

# get authorization header

headers = wl.auth.auth_header()

## Inference through external URL using dataframe

# retrieve the json data to submit

data = pd.DataFrame.from_records([

{

"tensor":[

1.0678324729,

0.2177810266,

-1.7115145262,

0.682285721,

1.0138553067,

-0.4335000013,

0.7395859437,

-0.2882839595,

-0.447262688,

0.5146124988,

0.3791316964,

0.5190619748,

-0.4904593222,

1.1656456469,

-0.9776307444,

-0.6322198963,

-0.6891477694,

0.1783317857,

0.1397992467,

-0.3554220649,

0.4394217877,

1.4588397512,

-0.3886829615,

0.4353492889,

1.7420053483,

-0.4434654615,

-0.1515747891,

-0.2668451725,

-1.4549617756

]

}

])

# set the content type for pandas records

headers['Content-Type']= 'application/json; format=pandas-records'

# set accept as pandas-records

headers['Accept']='application/json; format=pandas-records'

# submit the request via POST, import as pandas DataFrame

response = pd.DataFrame.from_records(

requests.post(

deploy_url,

data=data.to_json(orient="records"),

headers=headers)

.json()

)

display(response.loc[:,["time", "out"]])

| time | out | |

|---|---|---|

| 0 | 1684509263640 | {'dense_1': [0.0014974177]} |

!curl -X POST {deploy_url} -H "Authorization: {headers['Authorization']}" -H "Content-Type:{headers['Content-Type']}" -H "Accept:{headers['Accept']}" --data '{data.to_json(orient="records")}'

[{"time":1684509264292,"in":{"tensor":[1.0678324729,0.2177810266,-1.7115145262,0.682285721,1.0138553067,-0.4335000013,0.7395859437,-0.2882839595,-0.447262688,0.5146124988,0.3791316964,0.5190619748,-0.4904593222,1.1656456469,-0.9776307444,-0.6322198963,-0.6891477694,0.1783317857,0.1397992467,-0.3554220649,0.4394217877,1.4588397512,-0.3886829615,0.4353492889,1.7420053483,-0.4434654615,-0.1515747891,-0.2668451725,-1.4549617756]},"out":{"dense_1":[0.0014974177]},"check_failures":[],"metadata":{"last_model":"{\"model_name\":\"ccfraudsrsw\",\"model_sha\":\"bc85ce596945f876256f41515c7501c399fd97ebcb9ab3dd41bf03f8937b4507\"}","pipeline_version":"81840bdb-a1bc-48b9-8df0-4c7a196fa79a","elapsed":[62451,212744]}}]

HTTP Inference with Arrow Input

The following example performs a HTTP Inference request with an Apache Arrow input. The request will be made with first a Python requests method, then using curl.

Only the first 5 rows will be displayed for space purposes.

# get authorization header

headers = wl.auth.auth_header()

# Submit arrow file

dataFile="./data/cc_data_10k.arrow"

data = open(dataFile,'rb').read()

# set the content type for Arrow table

headers['Content-Type']= "application/vnd.apache.arrow.file"

# set accept as Apache Arrow

headers['Accept']="application/vnd.apache.arrow.file"

response = requests.post(

deploy_url,

headers=headers,

data=data,

verify=True

)

# Arrow table is retrieved

with pa.ipc.open_file(response.content) as reader:

arrow_table = reader.read_all()

# convert to Polars DataFrame and display the first 5 rows

display(arrow_table.to_pandas().head(5).loc[:,["time", "out"]])

| time | out | |

|---|---|---|

| 0 | 1684509265142 | {'dense_1': [0.99300325]} |

| 1 | 1684509265142 | {'dense_1': [0.99300325]} |

| 2 | 1684509265142 | {'dense_1': [0.99300325]} |

| 3 | 1684509265142 | {'dense_1': [0.99300325]} |

| 4 | 1684509265142 | {'dense_1': [0.0010916889]} |

!curl -X POST {deploy_url} -H "Authorization: {headers['Authorization']}" -H "Content-Type:{headers['Content-Type']}" -H "Accept:{headers['Accept']}" --data-binary @{dataFile} > curl_response.arrow

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4200k 100 3037k 100 1162k 1980k 757k 0:00:01 0:00:01 --:--:-- 2766k

Undeploy Pipeline

When finished with our tests, we will undeploy the pipeline so we have the Kubernetes resources back for other tasks.

pipeline.undeploy()

| name | sdkinferenceexamplepipelinesrsw |

|---|---|

| created | 2023-05-19 15:14:03.916503+00:00 |

| last_updated | 2023-05-19 15:14:05.162541+00:00 |

| deployed | False |

| tags | |

| versions | 81840bdb-a1bc-48b9-8df0-4c7a196fa79a, 49cfc2cc-16fb-4dfa-8d1b-579fa86dab07 |

| steps | ccfraudsrsw |

2.2 - Wallaroo MLOps API Inferencing with Pipeline Inference URL Tutorial

This tutorial and the assets can be downloaded as part of the Wallaroo Tutorials repository.

Wallaroo API Inference Tutorial

Wallaroo provides the ability to perform inferences through deployed pipelines via the Wallaroo SDK and the Wallaroo MLOps API. This tutorial demonstrates performing inferences using the Wallaroo MLOps API.

This tutorial provides the following:

ccfraud.onnx: A pre-trained credit card fraud detection model.data/cc_data_1k.arrow,data/cc_data_10k.arrow: Sample testing data in Apache Arrow format with 1,000 and 10,000 records respectively.wallaroo-model-endpoints-api.py: A code-only version of this tutorial as a Python script.

This tutorial and sample data comes from the Machine Learning Group’s demonstration on Credit Card Fraud detection.

Prerequisites

The following is required for this tutorial:

- A deployed Wallaroo instance with Model Endpoints Enabled

- The following Python libraries:

Tutorial Goals

This demonstration provides a quick tutorial on performing inferences using the Wallaroo MLOps API using a deployed pipeline’s Inference URL. This following steps will be performed:

- Connect to a Wallaroo instance using the Wallaroo SDK and environmental variables. This bypasses the browser link confirmation for a seamless login, and provides a simple method of retrieving the JWT token used for Wallaroo MLOps API calls. For more information, see the Wallaroo SDK Essentials Guide: Client Connection and the Wallaroo MLOps API Essentials Guide.

- Create a workspace for our models and pipelines.

- Upload the

ccfraudmodel. - Create a pipeline and add the

ccfraudmodel as a pipeline step. - Run sample inferences with pandas DataFrame inputs and Apache Arrow inputs.

Retrieve Token

There are two methods of retrieving the JWT token used to authenticate to the Wallaroo instance’s API service:

- Wallaroo SDK. This method requires a Wallaroo based user.

- API Client Secret. This is the recommended method as it is user independent. It allows any valid user to make an inference request.

This tutorial will use the Wallaroo SDK method for convenience with environmental variables for a seamless login without browser validation. For more information, see the Wallaroo SDK Essentials Guide: Client Connection.

API Request Methods

All Wallaroo API endpoints follow the format:

https://$URLPREFIX.api.$URLSUFFIX/v1/api$COMMAND

Where $COMMAND is the specific endpoint. For example, for the command to list of workspaces in the Wallaroo instance would use the above format based on these settings:

$URLPREFIX:smooth-moose-1617$URLSUFFIX:example.wallaroo.ai$COMMAND:/workspaces/list

This would create the following API endpoint:

https://smooth-moose-1617.api.example.wallaroo.ai/v1/api/workspaces/list

Connect to Wallaroo

For this example, a connection to the Wallaroo SDK is used. This will be used to retrieve the JWT token for the MLOps API calls.

This example will store the user’s credentials into the file ./creds.json which contains the following:

{

"username": "{Connecting User's Username}",

"password": "{Connecting User's Password}",

"email": "{Connecting User's Email Address}"

}

Replace the username, password, and email fields with the user account connecting to the Wallaroo instance. This allows a seamless connection to the Wallaroo instance and bypasses the standard browser based confirmation link. For more information, see the Wallaroo SDK Essentials Guide: Client Connection.

Update wallarooPrefix = "YOUR PREFIX." and wallarooSuffix = "YOUR SUFFIX" to match the Wallaroo instance used for this demonstration. Note the . is part of the prefix. If there is no prefix, then wallarooPrefix = ""

import wallaroo

from wallaroo.object import EntityNotFoundError

import pandas as pd

import os

import base64

import pyarrow as pa

import requests

from requests.auth import HTTPBasicAuth

# Used to create unique workspace and pipeline names

import string

import random

# make a random 4 character prefix

suffix= ''.join(random.choice(string.ascii_lowercase) for i in range(4))

display(suffix)

import json

# used to display dataframe information without truncating

from IPython.display import display

pd.set_option('display.max_colwidth', None)

'atwc'

# Retrieve the login credentials.

os.environ["WALLAROO_SDK_CREDENTIALS"] = './creds.json.example'

# wl = wallaroo.Client(auth_type="user_password")

# Client connection from local Wallaroo instance

wallarooPrefix = ""

wallarooSuffix = "autoscale-uat-ee.wallaroo.dev"

wl = wallaroo.Client(api_endpoint=f"https://{wallarooPrefix}api.{wallarooSuffix}",

auth_endpoint=f"https://{wallarooPrefix}keycloak.{wallarooSuffix}",

auth_type="user_password")

wallarooPrefix = "YOUR PREFIX."

wallarooPrefix = "YOUR SUFFIX"

wallarooPrefix = ""

wallarooSuffix = "autoscale-uat-ee.wallaroo.dev"

APIURL=f"https://{wallarooPrefix}api.{wallarooSuffix}"

APIURL

'https://api.autoscale-uat-ee.wallaroo.dev'

Retrieve the JWT Token

As mentioned earlier, there are multiple methods of authenticating to the Wallaroo instance for MLOps API calls. This tutorial will use the Wallaroo SDK method Wallaroo Client wl.auth.auth_header() method, extracting the token from the response.

Reference: MLOps API Retrieve Token Through Wallaroo SDK

# Retrieve the token

headers = wl.auth.auth_header()

display(headers)

{'Authorization': 'Bearer eyJhbGciOiJSUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICJEWkc4UE4tOHJ0TVdPdlVGc0V0RWpacXNqbkNjU0tJY3Zyak85X3FxcXc0In0.eyJleHAiOjE2ODg3NTE2NjQsImlhdCI6MTY4ODc1MTYwNCwianRpIjoiNGNmNmFjMzQtMTVjMy00MzU0LWI0ZTYtMGYxOWIzNjg3YmI2IiwiaXNzIjoiaHR0cHM6Ly9rZXljbG9hay5hdXRvc2NhbGUtdWF0LWVlLndhbGxhcm9vLmRldi9hdXRoL3JlYWxtcy9tYXN0ZXIiLCJhdWQiOlsibWFzdGVyLXJlYWxtIiwiYWNjb3VudCJdLCJzdWIiOiJkOWE3MmJkOS0yYTFjLTQ0ZGQtOTg5Zi0zYzdjMTUxMzA4ODUiLCJ0eXAiOiJCZWFyZXIiLCJhenAiOiJzZGstY2xpZW50Iiwic2Vzc2lvbl9zdGF0ZSI6Ijk0MjkxNTAwLWE5MDgtNGU2Ny1hMzBiLTA4MTczMzNlNzYwOCIsImFjciI6IjEiLCJyZWFsbV9hY2Nlc3MiOnsicm9sZXMiOlsiZGVmYXVsdC1yb2xlcy1tYXN0ZXIiLCJvZmZsaW5lX2FjY2VzcyIsInVtYV9hdXRob3JpemF0aW9uIl19LCJyZXNvdXJjZV9hY2Nlc3MiOnsibWFzdGVyLXJlYWxtIjp7InJvbGVzIjpbIm1hbmFnZS11c2VycyIsInZpZXctdXNlcnMiLCJxdWVyeS1ncm91cHMiLCJxdWVyeS11c2VycyJdfSwiYWNjb3VudCI6eyJyb2xlcyI6WyJtYW5hZ2UtYWNjb3VudCIsIm1hbmFnZS1hY2NvdW50LWxpbmtzIiwidmlldy1wcm9maWxlIl19fSwic2NvcGUiOiJwcm9maWxlIGVtYWlsIiwic2lkIjoiOTQyOTE1MDAtYTkwOC00ZTY3LWEzMGItMDgxNzMzM2U3NjA4IiwiZW1haWxfdmVyaWZpZWQiOmZhbHNlLCJodHRwczovL2hhc3VyYS5pby9qd3QvY2xhaW1zIjp7IngtaGFzdXJhLXVzZXItaWQiOiJkOWE3MmJkOS0yYTFjLTQ0ZGQtOTg5Zi0zYzdjMTUxMzA4ODUiLCJ4LWhhc3VyYS1kZWZhdWx0LXJvbGUiOiJ1c2VyIiwieC1oYXN1cmEtYWxsb3dlZC1yb2xlcyI6WyJ1c2VyIl0sIngtaGFzdXJhLXVzZXItZ3JvdXBzIjoie30ifSwibmFtZSI6IkpvaG4gSGFuc2FyaWNrIiwicHJlZmVycmVkX3VzZXJuYW1lIjoiam9obi5odW1tZWxAd2FsbGFyb28uYWkiLCJnaXZlbl9uYW1lIjoiSm9obiIsImZhbWlseV9uYW1lIjoiSGFuc2FyaWNrIiwiZW1haWwiOiJqb2huLmh1bW1lbEB3YWxsYXJvby5haSJ9.QE5WJ6NI5bQob0p2M7KsVXxrAiUUxnsIjZPuHIx7_6kTsDt4zarcCu2b5X6s6wg0EZQDX22oANWUAXnkWRTQd_E6zE7DkKF7H5kodtyu90ewiFM8ULx2iOWy2GkafQTdiuW90-BGDIjAcOiQtOkdHNaNHqJ9go2Lsom1t_b4-FOhh8bAGhMM3aDS0w-Y8dGKClxW_xFSTmOjNLaPxbFs5NCib-_QAsR_PiyfSFNJ_kjIV8f2mdzeyOauj0YOE-w5nXjhbrDvhS1kJ3n_8C2J2eOnEg85OGd3m6VKVzoR7oPzoZH15Jtl8shKTDS6BEUWpzZNfjYjwZdy1KTenCbzAQ'}

Create Workspace

In a production environment, the Wallaroo workspace that contains the pipeline and models would be created and deployed. We will quickly recreate those steps using the MLOps API. If the workspace and pipeline have already been created through the Wallaroo SDK Inference Tutorial, then we can skip directly to Deploy Pipeline.

Workspaces are created through the MLOps API with the /v1/api/workspaces/create command. This requires the workspace name be provided, and that the workspace not already exist in the Wallaroo instance.

Reference: MLOps API Create Workspace

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

# Create workspace

apiRequest = f"{APIURL}/v1/api/workspaces/create"

workspace_name = f"apiinferenceexampleworkspace{suffix}"

data = {

"workspace_name": workspace_name

}

response = requests.post(apiRequest, json=data, headers=headers, verify=True).json()

display(response)

# Stored for future examples

workspaceId = response['workspace_id']

{'workspace_id': 374}

Upload Model

The model is uploaded using the /v1/api/models/upload_and_convert command. This uploads a ML Model to a Wallaroo workspace via POST with Content-Type: multipart/form-data and takes the following parameters:

- Parameters

- name - (REQUIRED string): Name of the model

- visibility - (OPTIONAL string): The visibility of the model as either

publicorprivate. - workspace_id - (REQUIRED int): The numerical id of the workspace to upload the model to. Stored earlier as

workspaceId.

Directly after we will use the /models/list_versions to retrieve model details used for later steps.

Reference: Wallaroo MLOps API Essentials Guide: Model Management: Upload Model to Workspace

## upload model

# Retrieve the token

headers = wl.auth.auth_header()

apiRequest = f"{APIURL}/v1/api/models/upload_and_convert"

framework='onnx'

model_name = f"{suffix}ccfraud"

data = {

"name": model_name,

"visibility": "public",

"workspace_id": workspaceId,

"conversion": {

"framework": framework,

"python_version": "3.8",

"requirements": []

}

}

files = {

"metadata": (None, json.dumps(data), "application/json"),

'file': (model_name, open('./ccfraud.onnx', 'rb'), "application/octet-stream")

}

response = requests.post(apiRequest, files=files, headers=headers).json()

display(response)

modelId=response['insert_models']['returning'][0]['models'][0]['id']

{'insert_models': {'returning': [{'models': [{'id': 176}]}]}}

# Get the model details

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

apiRequest = f"{APIURL}/v1/api/models/get_by_id"

data = {

"id": modelId

}

response = requests.post(apiRequest, json=data, headers=headers, verify=True).json()

display(response)

{'msg': 'The provided model id was not found.', 'code': 400}

# Get the model details

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

apiRequest = f"{APIURL}/v1/api/models/list_versions"

data = {

"model_id": model_name,

"models_pk_id" : modelId

}

response = requests.post(apiRequest, json=data, headers=headers, verify=True).json()

display(response)

[{'sha': 'bc85ce596945f876256f41515c7501c399fd97ebcb9ab3dd41bf03f8937b4507',

'models_pk_id': 175,

'model_version': 'fa4c2f8c-769e-4ee1-9a91-fe029a4beffc',

'owner_id': '""',

'model_id': 'vsnaccfraud',

'id': 176,

'file_name': 'vsnaccfraud',

'image_path': 'proxy.replicated.com/proxy/wallaroo/ghcr.io/wallaroolabs/mlflow-deploy:v2023.3.0-main-3481',

'status': 'ready'},

{'sha': 'bc85ce596945f876256f41515c7501c399fd97ebcb9ab3dd41bf03f8937b4507',

'models_pk_id': 175,

'model_version': '701be439-8702-4896-88b5-644bb5cb4d61',

'owner_id': '""',

'model_id': 'vsnaccfraud',

'id': 175,

'file_name': 'vsnaccfraud',

'image_path': 'proxy.replicated.com/proxy/wallaroo/ghcr.io/wallaroolabs/mlflow-deploy:v2023.3.0-main-3481',

'status': 'ready'}]

model_version = response[0]['model_version']

display(model_version)

model_sha = response[0]['sha']

display(model_sha)

'fa4c2f8c-769e-4ee1-9a91-fe029a4beffc'

‘bc85ce596945f876256f41515c7501c399fd97ebcb9ab3dd41bf03f8937b4507’

Create Pipeline

Create Pipeline in a Workspace with the /v1/api/pipelines/create command. This creates a new pipeline in the specified workspace.

- Parameters

- pipeline_id - (REQUIRED string): Name of the new pipeline.

- workspace_id - (REQUIRED int): Numerical id of the workspace for the new pipeline. Stored earlier as

workspaceId. - definition - (REQUIRED string): Pipeline definitions, can be

{}for none.

For our example, we are setting the pipeline steps through the definition field. This will direct inference requests to the model before output.

Reference: Wallaroo MLOps API Essentials Guide: Pipeline Management: Create Pipeline in a Workspace

# Create pipeline

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

apiRequest = f"{APIURL}/v1/api/pipelines/create"

pipeline_name=f"{suffix}apiinferenceexamplepipeline"

data = {

"pipeline_id": pipeline_name,

"workspace_id": workspaceId,

"definition": {'steps': [{'ModelInference': {'models': [{'name': f'{model_name}', 'version': model_version, 'sha': model_sha}]}}]}

}

response = requests.post(apiRequest, json=data, headers=headers, verify=True).json()

pipeline_id = response['pipeline_pk_id']

pipeline_variant_id=response['pipeline_variant_pk_id']

pipeline_variant_version=['pipeline_variant_version']

Deploy Pipeline

With the pipeline created and the model uploaded into the workspace, the pipeline can be deployed. This will allocate resources from the Kubernetes cluster hosting the Wallaroo instance and prepare the pipeline to process inference requests.

Pipelines are deployed through the MLOps API command /v1/api/pipelines/deploy which takes the following parameters:

- Parameters

- deploy_id (REQUIRED string): The name for the pipeline deployment.

- engine_config (OPTIONAL string): Additional configuration options for the pipeline.

- pipeline_version_pk_id (REQUIRED int): Pipeline version id. Captured earlier as

pipeline_variant_id. - model_configs (OPTIONAL Array int): Ids of model configs to apply.

- model_ids (OPTIONAL Array int): Ids of models to apply to the pipeline. If passed in, model_configs will be created automatically.

- models (OPTIONAL Array models): If the model ids are not available as a pipeline step, the models’ data can be passed to it through this method. The options below are only required if

modelsare provided as a parameter.- name (REQUIRED string): Name of the uploaded model that is in the same workspace as the pipeline. Captured earlier as the

model_namevariable. - version (REQUIRED string): Version of the model to use.

- sha (REQUIRED string): SHA value of the model.

- name (REQUIRED string): Name of the uploaded model that is in the same workspace as the pipeline. Captured earlier as the

- pipeline_id (REQUIRED int): Numerical value of the pipeline to deploy.

- Returns

- id (int): The deployment id.

Reference: Wallaroo MLOps API Essentials Guide: Pipeline Management: Deploy a Pipeline

# Deploy Pipeline

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

apiRequest = f"{APIURL}/v1/api/pipelines/deploy"

exampleModelDeployId=pipeline_name

data = {

"deploy_id": exampleModelDeployId,

"pipeline_version_pk_id": pipeline_variant_id,

"model_ids": [

modelId

],

"pipeline_id": pipeline_id

}

response = requests.post(apiRequest, json=data, headers=headers, verify=True).json()

display(response)

exampleModelDeploymentId=response['id']

# wait 45 seconds for the pipeline to complete deployment

import time

time.sleep(45)

{'id': 260}

Get Deployment Status

This returns the deployment status - we’re waiting until the deployment has the status “Ready.”

- Parameters

- name - (REQUIRED string): The deployment in the format {deployment_name}-{deploymnent-id}.

Example: The deployed empty and model pipelines status will be displayed.

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

# Get model pipeline deployment

api_request = f"{APIURL}/v1/api/status/get_deployment"

data = {

"name": f"{pipeline_name}-{exampleModelDeploymentId}"

}

response = requests.post(api_request, json=data, headers=headers, verify=True).json()

response

{'status': 'Running',

'details': [],

'engines': [{'ip': '10.244.17.3',

'name': 'engine-f77b5c44b-4j2n5',

'status': 'Running',

'reason': None,

'details': [],

'pipeline_statuses': {'pipelines': [{'id': 'vsnaapiinferenceexamplepipeline',

'status': 'Running'}]},

'model_statuses': {'models': [{'name': 'vsnaccfraud',

'version': 'fa4c2f8c-769e-4ee1-9a91-fe029a4beffc',

'sha': 'bc85ce596945f876256f41515c7501c399fd97ebcb9ab3dd41bf03f8937b4507',

'status': 'Running'}]}}],

'engine_lbs': [{'ip': '10.244.17.4',

'name': 'engine-lb-584f54c899-q877m',

'status': 'Running',

'reason': None,

'details': []}],

'sidekicks': []}

Get External Inference URL

The API command /admin/get_pipeline_external_url retrieves the external inference URL for a specific pipeline in a workspace.

- Parameters

- workspace_id (REQUIRED integer): The workspace integer id.

- pipeline_name (REQUIRED string): The name of the pipeline.

In this example, a list of the workspaces will be retrieved. Based on the setup from the Internal Pipeline Deployment URL Tutorial, the workspace matching urlworkspace will have it’s workspace id stored and used for the /admin/get_pipeline_external_url request with the pipeline urlpipeline.

The External Inference URL will be stored as a variable for the next step.

Reference: Wallaroo MLOps API Essentials Guide: Pipeline Management: Get External Inference URL

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

## Retrieve the pipeline's External Inference URL

apiRequest = f"{APIURL}/v1/api/admin/get_pipeline_external_url"

data = {

"workspace_id": workspaceId,

"pipeline_name": pipeline_name

}

response = requests.post(apiRequest, json=data, headers=headers, verify=True).json()

deployurl = response['url']

deployurl

'https://api.autoscale-uat-ee.wallaroo.dev/v1/api/pipelines/infer/vsnaapiinferenceexamplepipeline-260/vsnaapiinferenceexamplepipeline'

Perform Inference Through External URL

The inference can now be performed through the External Inference URL. This URL will accept the same inference data file that is used with the Wallaroo SDK, or with an Internal Inference URL as used in the Internal Pipeline Inference URL Tutorial.

For this example, the externalUrl retrieved through the Get External Inference URL is used to submit a single inference request through the data file data-1.json.

Reference: Wallaroo MLOps API Essentials Guide: Pipeline Management: Perform Inference Through External URL

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json; format=pandas-records'

## Inference through external URL using dataframe

# retrieve the json data to submit

data = [

{

"tensor":[

1.0678324729,

0.2177810266,

-1.7115145262,

0.682285721,

1.0138553067,

-0.4335000013,

0.7395859437,

-0.2882839595,

-0.447262688,

0.5146124988,

0.3791316964,

0.5190619748,

-0.4904593222,

1.1656456469,

-0.9776307444,

-0.6322198963,

-0.6891477694,

0.1783317857,

0.1397992467,

-0.3554220649,

0.4394217877,

1.4588397512,

-0.3886829615,

0.4353492889,

1.7420053483,

-0.4434654615,

-0.1515747891,

-0.2668451725,

-1.4549617756

]

}

]

# submit the request via POST, import as pandas DataFrame

response = pd.DataFrame.from_records(

requests.post(

deployurl,

json=data,

headers=headers)

.json()

)

display(response.loc[:,["time", "out"]])

| time | out | |

|---|---|---|

| 0 | 1688750664105 | {'dense_1': [0.0014974177]} |

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/vnd.apache.arrow.file'

# set accept as apache arrow table

headers['Accept']="application/vnd.apache.arrow.file"

# Submit arrow file

dataFile="./data/cc_data_10k.arrow"

data = open(dataFile,'rb').read()

response = requests.post(

deployurl,

headers=headers,

data=data,

verify=True

)

# Arrow table is retrieved

with pa.ipc.open_file(response.content) as reader:

arrow_table = reader.read_all()

# convert to Polars DataFrame and display the first 5 rows

display(arrow_table.to_pandas().head(5).loc[:,["time", "out"]])

| time | out | |

|---|---|---|

| 0 | 1688750664889 | {'dense_1': [0.99300325]} |

| 1 | 1688750664889 | {'dense_1': [0.99300325]} |

| 2 | 1688750664889 | {'dense_1': [0.99300325]} |

| 3 | 1688750664889 | {'dense_1': [0.99300325]} |

| 4 | 1688750664889 | {'dense_1': [0.0010916889]} |

Undeploy the Pipeline

With the tutorial complete, we’ll undeploy the pipeline with /v1/api/pipelines/undeploy and return the resources back to the Wallaroo instance.

Reference: Wallaroo MLOps API Essentials Guide: Pipeline Management: Undeploy a Pipeline

# Retrieve the token

headers = wl.auth.auth_header()

# set Content-Type type

headers['Content-Type']='application/json'

apiRequest = f"{APIURL}/v1/api/pipelines/undeploy"

data = {

"pipeline_id": pipeline_id,

"deployment_id":exampleModelDeploymentId

}

response = requests.post(apiRequest, json=data, headers=headers, verify=True).json()

display(response)

None

Wallaroo supports the ability to perform inferences through the SDK and through the API for each deployed pipeline. For more information on how to use Wallaroo, see the Wallaroo Documentation Site for full details.

3 - Large Language Model with GPU Pipeline Deployment in Wallaroo Demonstration

This tutorial and the assets can be downloaded as part of the Wallaroo Tutorials repository.

Large Language Model with GPU Pipeline Deployment in Wallaroo Demonstration

Wallaroo supports the use of GPUs for model deployment and inferences. This demonstration demonstrates using a Hugging Face Large Language Model (LLM) stored in a registry service that creates summaries of larger text strings.

Tutorial Goals

For this demonstration, a cluster with GPU resources will be hosting the Wallaroo instance.

- The containerized model

hf-bart-summarizer3will be registered to a Wallaroo workspace. - The model will be added as a step to a Wallaroo pipeline.

- When the pipeline is deployed, the deployment configuration will specify the allocation of a GPU to the pipeline.

- A sample inference summarizing a set of text is used as an inference input, and the sample results and time period displayed.

Prerequisites

The following is required for this tutorial:

- A Wallaroo Enterprise version 2023.2.1 or greater instance installed into a GPU enabled Kubernetes cluster as described in the Wallaroo Create GPU Nodepools Kubernetes Clusters guide.

- The Wallaroo SDK version 2023.2.1 or greater.

References

- Wallaroo SDK Essentials Guide: Pipeline Deployment Configuration

- Wallaroo SDK Reference wallaroo.deployment_config

Tutorial Steps

Import Libraries

The first step is to import the libraries we’ll be using. These are included by default in the Wallaroo instance’s JupyterHub service.

import json

import os

import pickle

import wallaroo

from wallaroo.pipeline import Pipeline

from wallaroo.deployment_config import DeploymentConfigBuilder

from wallaroo.framework import Framework

import pyarrow as pa

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.cluster import KMeans

Connect to the Wallaroo Instance through the User Interface

The next step is to connect to Wallaroo through the Wallaroo client. The Python library is included in the Wallaroo install and available through the Jupyter Hub interface provided with your Wallaroo environment.

This is accomplished using the wallaroo.Client() command, which provides a URL to grant the SDK permission to your specific Wallaroo environment. When displayed, enter the URL into a browser and confirm permissions. Store the connection into a variable that can be referenced later.

If logging into the Wallaroo instance through the internal JupyterHub service, use wl = wallaroo.Client(). For more information on Wallaroo Client settings, see the Client Connection guide.

wl = wallaroo.Client()

Register MLFlow Model in Wallaroo

MLFlow Containerized Model require the input and output schemas be defined in Apache Arrow format. Both the input and output schema is a string.

Once complete, the MLFlow containerized model is registered to the Wallaroo workspace.

input_schema = pa.schema([

pa.field('inputs', pa.string())

])

output_schema = pa.schema([

pa.field('summary_text', pa.string()),

])

model = wl.register_model_image(

name="hf-bart-summarizer3",

image=f"ghcr.io/wallaroolabs/doc-samples/gpu-hf-summ-official2:1.30"

).configure("mlflow", input_schema=input_schema, output_schema=output_schema)

model

| Name | hf-bart-summarizer3 |

| Version | d511a20c-9612-4112-9368-2d79ae764dec |

| File Name | none |

| SHA | 360dcd343a593e87639106757bad58a7d960899c915bbc9787e7601073bc1121 |

| Status | ready |

| Image Path | proxy.replicated.com/proxy/wallaroo/ghcr.io/wallaroolabs/gpu-hf-summ-official2:1.30 |

| Updated At | 2023-11-Jul 19:23:57 |

Pipeline Deployment With GPU

The registered model will be added to our sample pipeline as a pipeline step. When the pipeline is deployed, a specific resource configuration is applied that allocated a GPU to our MLFlow containerized model.

MLFlow models are run in the Containerized Runtime in the pipeline. As such, the DeploymentConfigBuilder method .sidekick_gpus(model: wallaroo.model.Model, core_count: int) is used to allocate 1 GPU to our model.

The pipeline is then deployed with our deployment configuration, and a GPU from the cluster is allocated for use by this model.

pipeline_name = f"test-gpu7"

pipeline = wl.build_pipeline(pipeline_name)

pipeline.add_model_step(model)

deployment_config = DeploymentConfigBuilder() \

.cpus(0.25).memory('1Gi').gpus(0) \

.sidekick_gpus(model, 1) \

.sidekick_env(model, {"GUNICORN_CMD_ARGS": "--timeout=180 --workers=1"}) \

.image("ghcr.io/wallaroolabs/doc-samples/gpu-hf-summ-official2:1.30") \

.build()

deployment_config

{'engine': {'cpu': 0.25,

'resources': {'limits': {'cpu': 0.25, 'memory': '1Gi', 'nvidia.com/gpu': 0},

'requests': {'cpu': 0.25, 'memory': '1Gi', 'nvidia.com/gpu': 0}},

'gpu': 0,

'image': 'proxy.replicated.com/proxy/wallaroo/ghcr.io/wallaroolabs/fitzroy-mini-cuda:v2023.3.0-josh-fitzroy-gpu-3374'},

'enginelb': {},

'engineAux': {'images': {'hf-bart-summarizer3-28': {'resources': {'limits': {'nvidia.com/gpu': 1},

'requests': {'nvidia.com/gpu': 1}},

'env': [{'name': 'GUNICORN_CMD_ARGS',

'value': '--timeout=180 --workers=1'}]}}},

'node_selector': {}}

pipeline.deploy(deployment_config=deployment_config)

pipeline.status()

Waiting for deployment - this will take up to 90s ................ ok

{‘status’: ‘Running’,

‘details’: [],

’engines’: [{‘ip’: ‘10.244.38.26’,

’name’: ’engine-7457c88db4-42ww6’,

‘status’: ‘Running’,

‘reason’: None,

‘details’: [],

‘pipeline_statuses’: {‘pipelines’: [{‘id’: ’test-gpu7’,

‘status’: ‘Running’}]},

‘model_statuses’: {‘models’: [{’name’: ‘hf-bart-summarizer3’,

‘version’: ‘d511a20c-9612-4112-9368-2d79ae764dec’,

‘sha’: ‘360dcd343a593e87639106757bad58a7d960899c915bbc9787e7601073bc1121’,

‘status’: ‘Running’}]}}],

’engine_lbs’: [{‘ip’: ‘10.244.0.113’,

’name’: ’engine-lb-584f54c899-ht5cd’,

‘status’: ‘Running’,

‘reason’: None,

‘details’: []}],

‘sidekicks’: [{‘ip’: ‘10.244.41.21’,

’name’: ’engine-sidekick-hf-bart-summarizer3-28-f5f8d6567-zzh62’,

‘status’: ‘Running’,

‘reason’: None,

‘details’: [],

‘statuses’: ‘\n’}]}

pipeline.status()

{'status': 'Running',

'details': [],

'engines': [{'ip': '10.244.38.26',

'name': 'engine-7457c88db4-42ww6',

'status': 'Running',

'reason': None,

'details': [],

'pipeline_statuses': {'pipelines': [{'id': 'test-gpu7',

'status': 'Running'}]},

'model_statuses': {'models': [{'name': 'hf-bart-summarizer3',

'version': 'd511a20c-9612-4112-9368-2d79ae764dec',

'sha': '360dcd343a593e87639106757bad58a7d960899c915bbc9787e7601073bc1121',

'status': 'Running'}]}}],

'engine_lbs': [{'ip': '10.244.0.113',

'name': 'engine-lb-584f54c899-ht5cd',

'status': 'Running',

'reason': None,

'details': []}],

'sidekicks': [{'ip': '10.244.41.21',

'name': 'engine-sidekick-hf-bart-summarizer3-28-f5f8d6567-zzh62',

'status': 'Running',

'reason': None,

'details': [],

'statuses': '\n'}]}

Sample Text Inference

A sample inference is performed 10 times using the definition of LinkedIn, and the time to completion displayed. In this case, the total time to create a summary of the text multiple times is around 2 seconds per inference request.

input_data = {

"inputs": ["LinkedIn (/lɪŋktˈɪn/) is a business and employment-focused social media platform that works through websites and mobile apps. It launched on May 5, 2003. It is now owned by Microsoft. The platform is primarily used for professional networking and career development, and allows jobseekers to post their CVs and employers to post jobs. From 2015 most of the company's revenue came from selling access to information about its members to recruiters and sales professionals. Since December 2016, it has been a wholly owned subsidiary of Microsoft. As of March 2023, LinkedIn has more than 900 million registered members from over 200 countries and territories. LinkedIn allows members (both workers and employers) to create profiles and connect with each other in an online social network which may represent real-world professional relationships. Members can invite anyone (whether an existing member or not) to become a connection. LinkedIn can also be used to organize offline events, join groups, write articles, publish job postings, post photos and videos, and more."]

}

dataframe = pd.DataFrame(input_data)

dataframe.to_json('test_data.json', orient='records')

dataframe

| inputs | |

|---|---|

| 0 | LinkedIn (/lɪŋktˈɪn/) is a business and employ... |

import time

start = time.time()

end = time.time()

end - start

2.765655517578125e-05

start = time.time()

elapsed_time = 0

for i in range(10):

s = time.time()

res = pipeline.infer_from_file('test_data.json', timeout=120)

print(res)

e = time.time()

el = e-s

print(el)

end = time.time()

elapsed_time += end - start

print('Execution time:', elapsed_time, 'seconds')

time in.inputs \

0 2023-07-11 19:27:50.806 LinkedIn (/lɪŋktˈɪn/) is a business and employ...

out.summary_text check_failures

0 LinkedIn is a business and employment-focused … 0

2.616016387939453

time in.inputs

0 2023-07-11 19:27:53.421 LinkedIn (/lɪŋktˈɪn/) is a business and employ…

out.summary_text check_failures

0 LinkedIn is a business and employment-focused … 0

2.478372097015381

time in.inputs

0 2023-07-11 19:27:55.901 LinkedIn (/lɪŋktˈɪn/) is a business and employ…

out.summary_text check_failures

0 LinkedIn is a business and employment-focused … 0

2.453855514526367

time in.inputs

0 2023-07-11 19:27:58.365 LinkedIn (/lɪŋktˈɪn/) is a business and employ…

out.summary_text check_failures

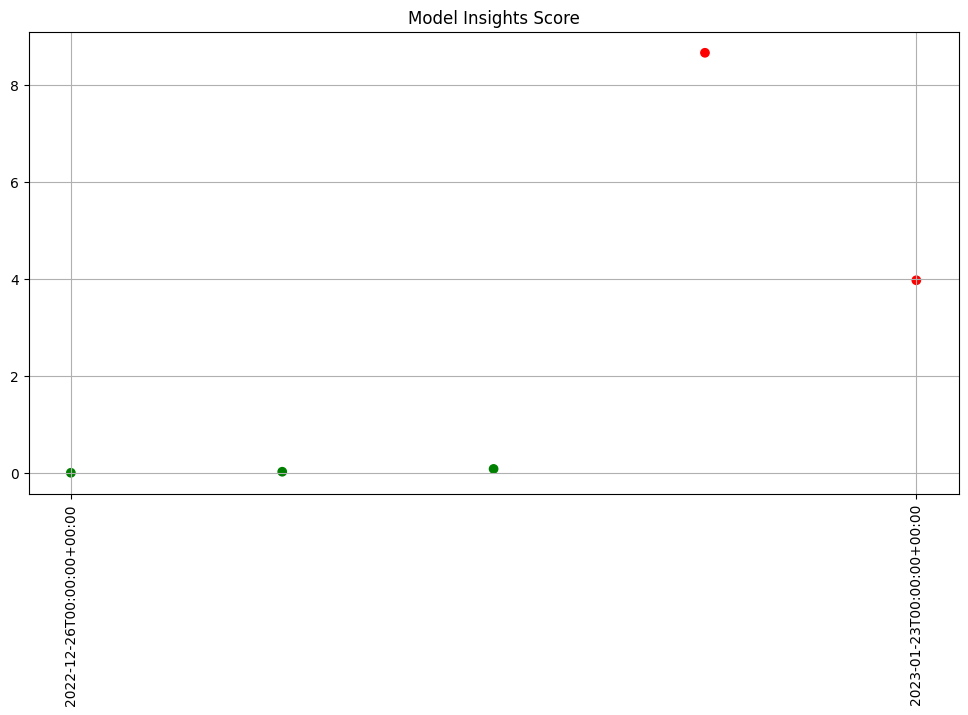

0 LinkedIn is a business and employment-focused … 0