Welcome to Wallaroo! Whether you’re using our free Community Edition or the Enterprise Edition, these Quick Start Guides are made to help learn how to deploy your ML Models with Wallaroo. Each of these guides includes a Jupyter Notebook with the documentation, code, and sample data that you can download and run wherever you have Wallaroo installed.

Wallaroo Feature Tutorials

- 1: Setting Up Wallaroo for Sample Pipelines and Models

- 2: Wallaroo ML Model Upload and Registrations

- 2.1: Model Registry Service with Wallaroo Tutorials

- 2.2: Wallaroo SDK Upload Tutorials: Arbitrary Python

- 2.2.1: Wallaroo SDK Upload Arbitrary Python Tutorial: Generate VGG16 Model

- 2.2.2: Wallaroo SDK Upload Arbitrary Python Tutorial: Deploy VGG16 Model

- 2.3: Wallaroo SDK Upload Tutorials: Hugging Face

- 2.3.1: Wallaroo API Upload Tutorial: Hugging Face Zero Shot Classification

- 2.3.2: Wallaroo SDK Upload Tutorial: Hugging Face Zero Shot Classification

- 2.4: Wallaroo SDK Upload Tutorials: Python Step Shape

- 2.5: Wallaroo SDK Upload Tutorials: Pytorch

- 2.5.1: Wallaroo SDK Upload Tutorial: Pytorch Single IO

- 2.5.2: Wallaroo SDK Upload Tutorial: Pytorch Multiple IO

- 2.6: Wallaroo SDK Upload Tutorials: SKLearn

- 2.6.1: Wallaroo SDK Upload Tutorial: SKLearn Clustering Kmeans

- 2.6.2: Wallaroo SDK Upload Tutorial: SKLearn Clustering SVM

- 2.6.3: Wallaroo SDK Upload Tutorial: SKLearn Linear Regression

- 2.6.4: Wallaroo SDK Upload Tutorial: SKLearn Logistic Regression

- 2.6.5: Wallaroo SDK Upload Tutorial: SKLearn SVM PCA

- 2.7: Wallaroo SDK Upload Tutorials: Tensorflow

- 2.8: Wallaroo SDK Upload Tutorials: Tensorflow Keras

- 2.9: Wallaroo SDK Upload Tutorials: XGBoost

- 2.9.1: Wallaroo SDK Upload Tutorial: RF Regressor Tutorial

- 2.9.2: Wallaroo SDK Upload Tutorial: XGBoost Classification

- 2.9.3: Wallaroo SDK Upload Tutorial: XGBoost Regressor

- 2.9.4: Wallaroo SDK Upload Tutorial: XGBoost RF Classification

- 2.10: Model Conversion Tutorials

- 2.10.1: PyTorch to ONNX Outside Wallaroo

- 2.10.2: XGBoost Convert to ONNX

- 3: Wallaroo Observability

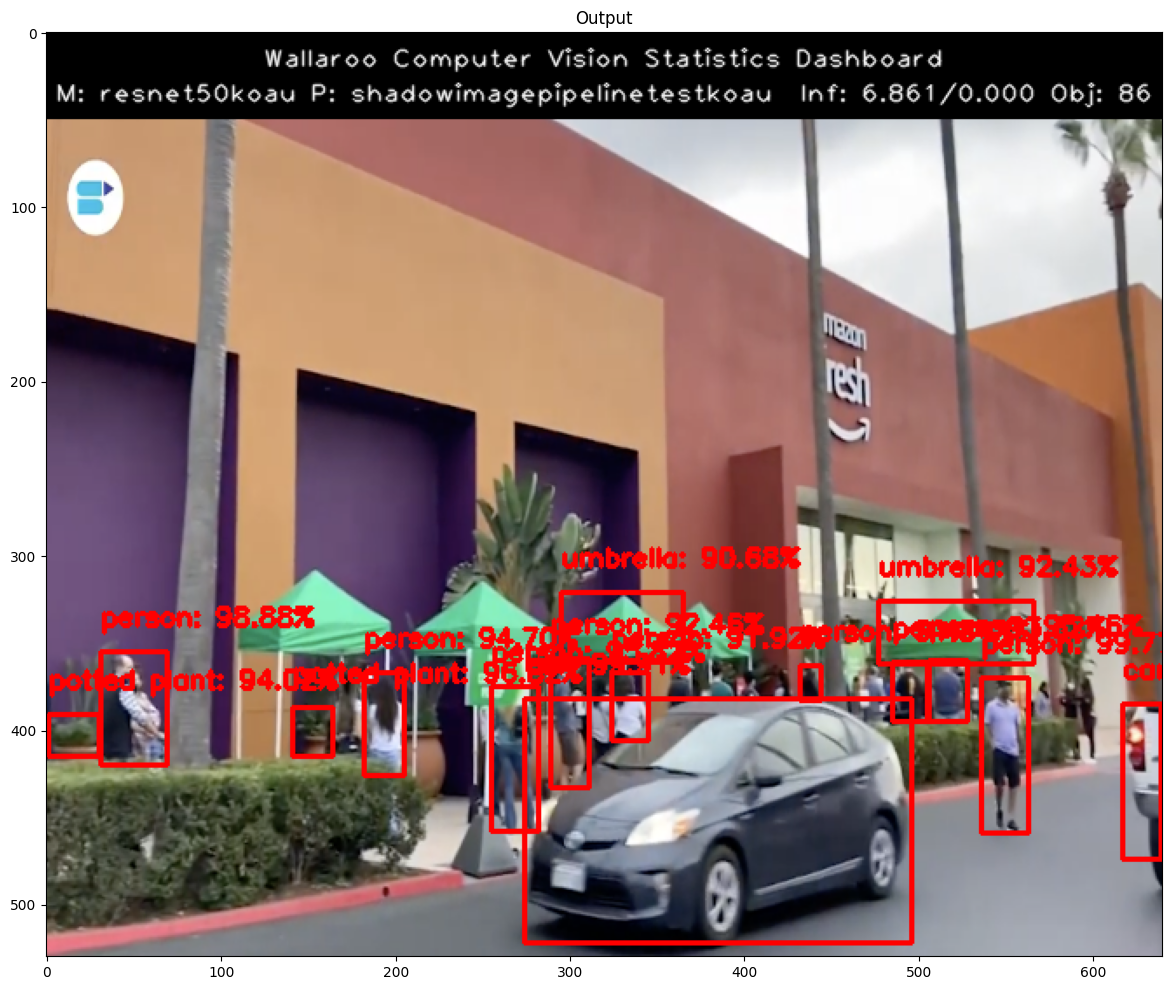

- 3.1: Wallaroo Edge Computer Vision Observability

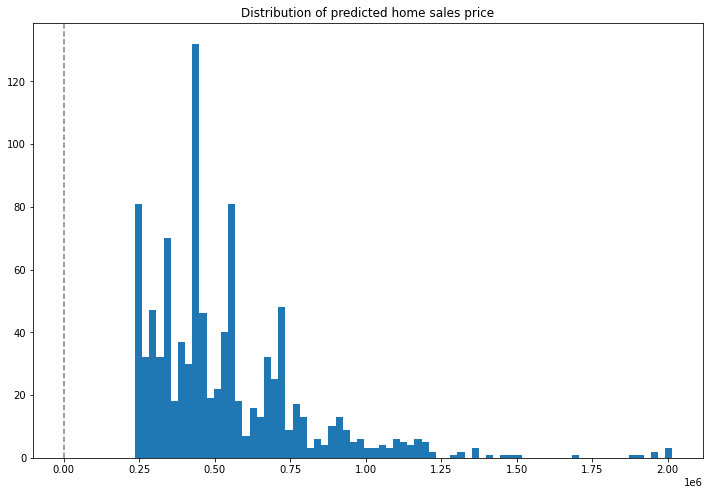

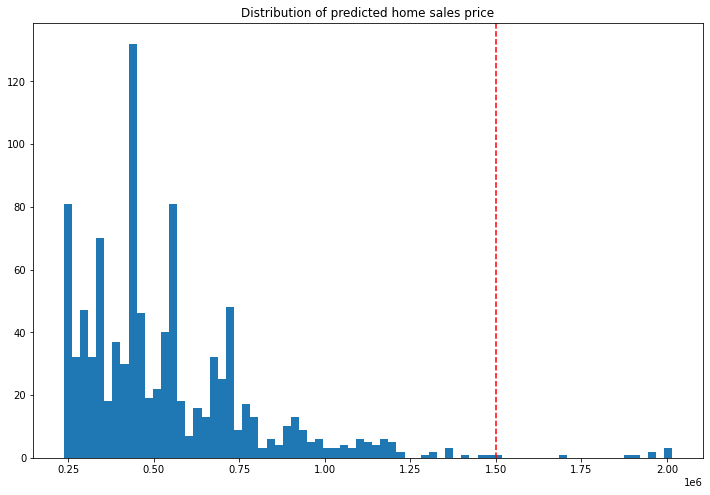

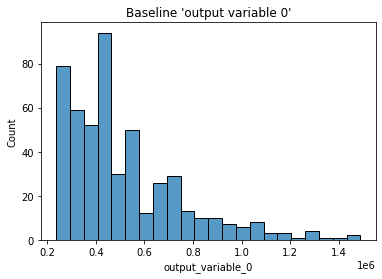

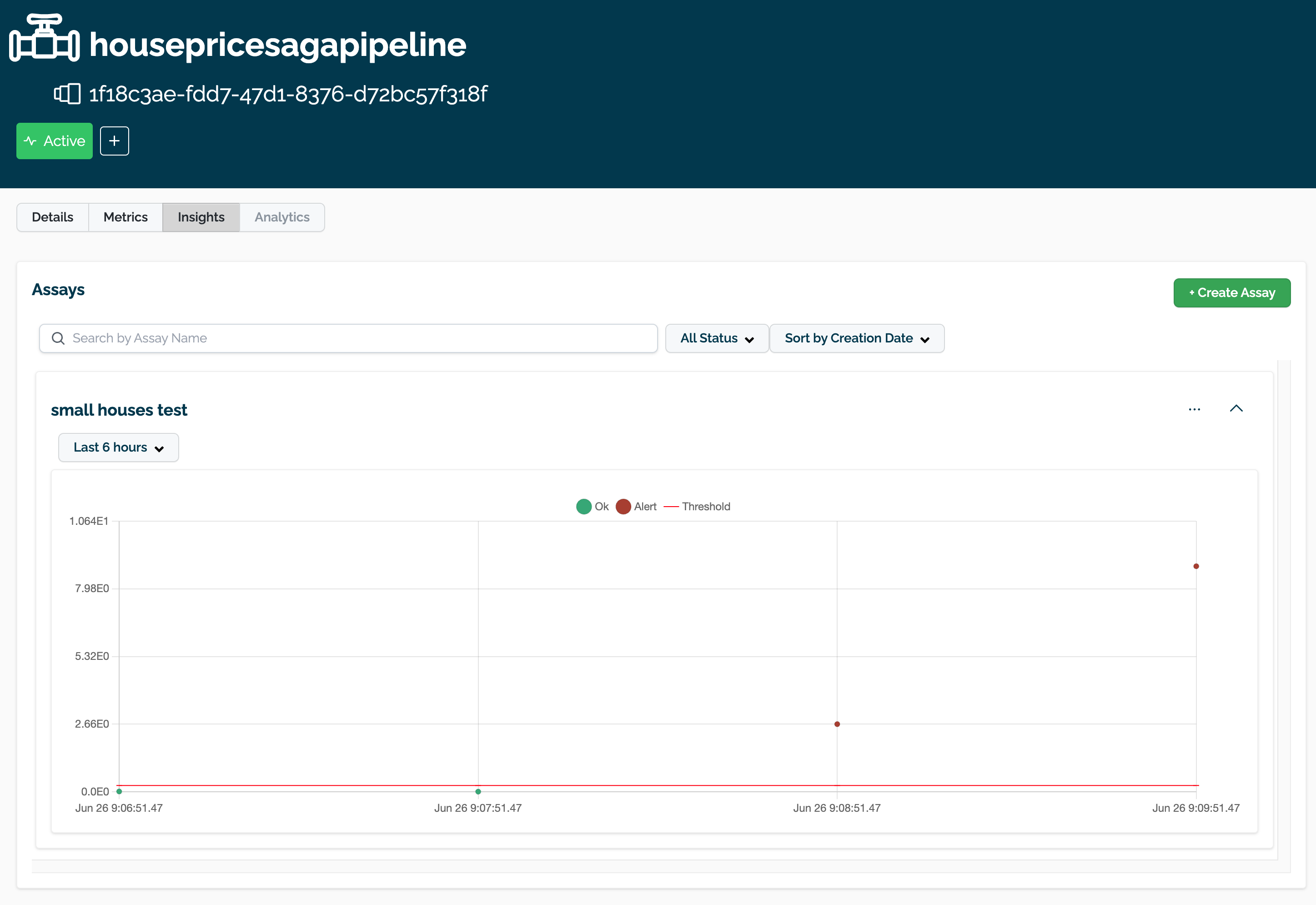

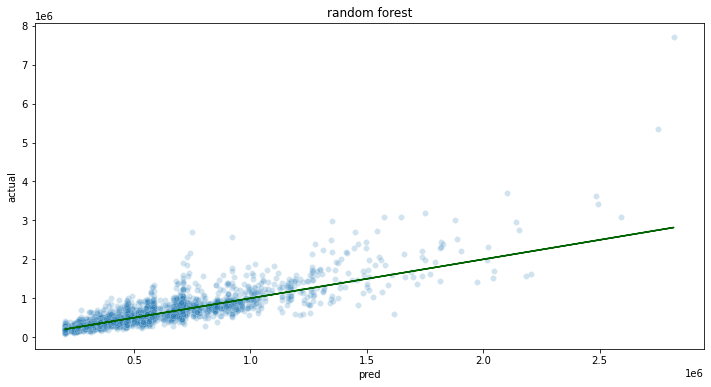

- 3.2: Wallaroo Model Observability: Anomaly Detection with CCFraud

- 3.3: Wallaroo Model Observability: Anomaly Detection with House Price Prediction

- 3.4: Wallaroo Edge Observability with Classification Financial Models

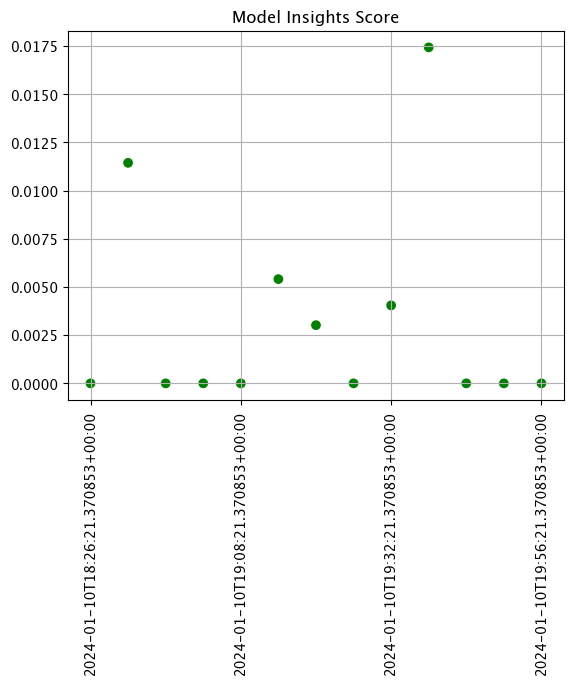

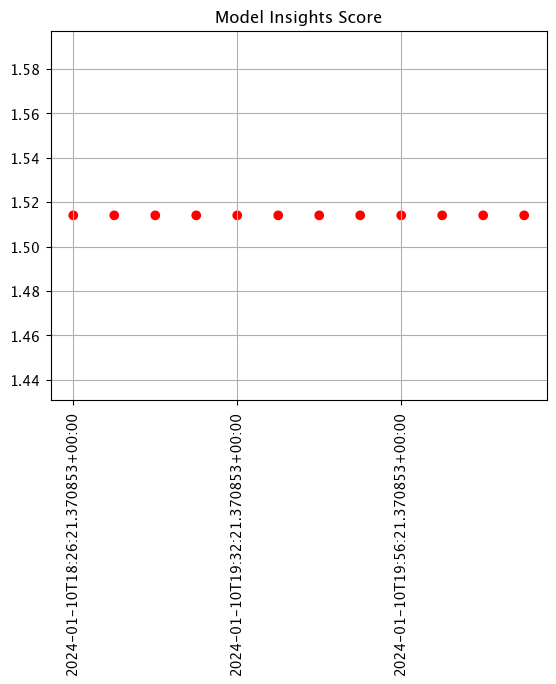

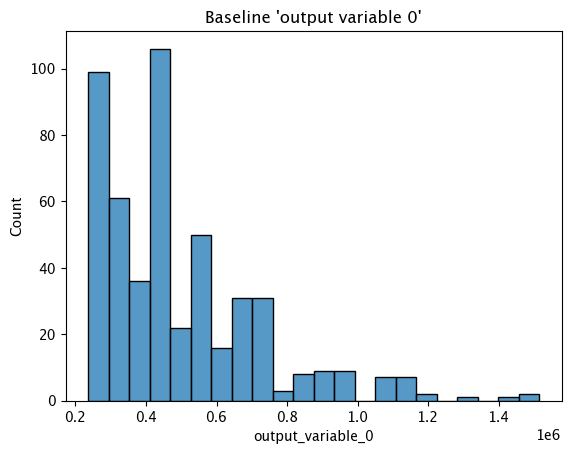

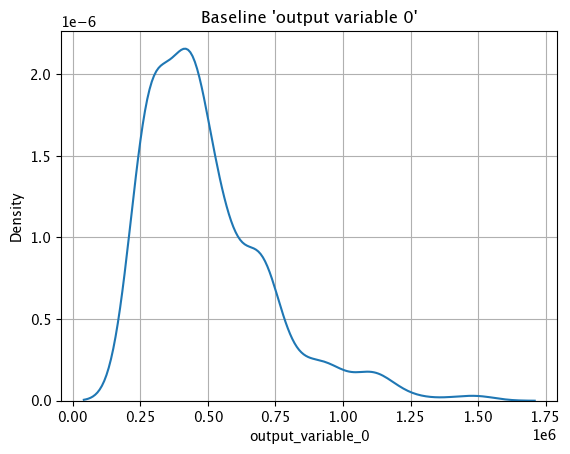

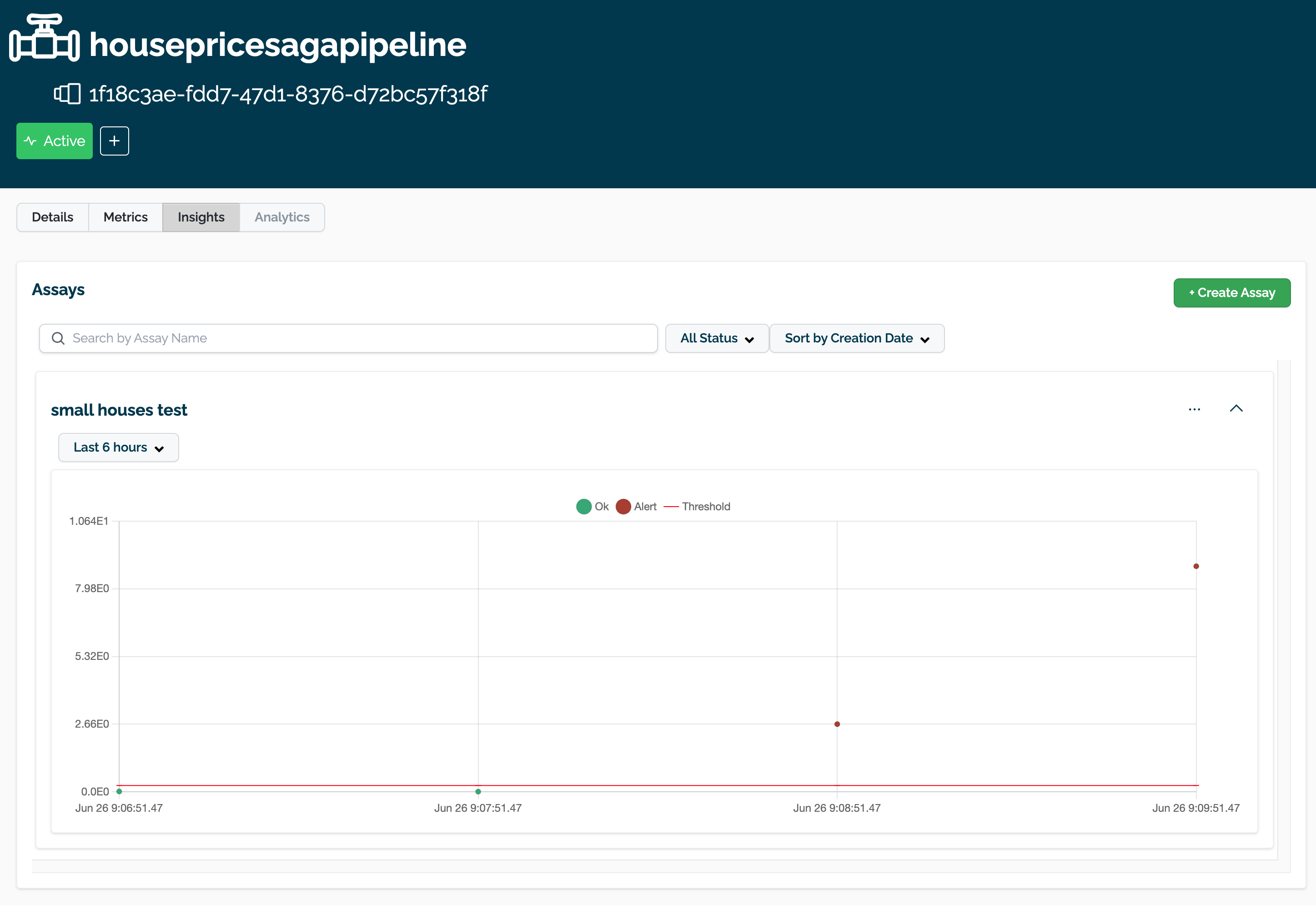

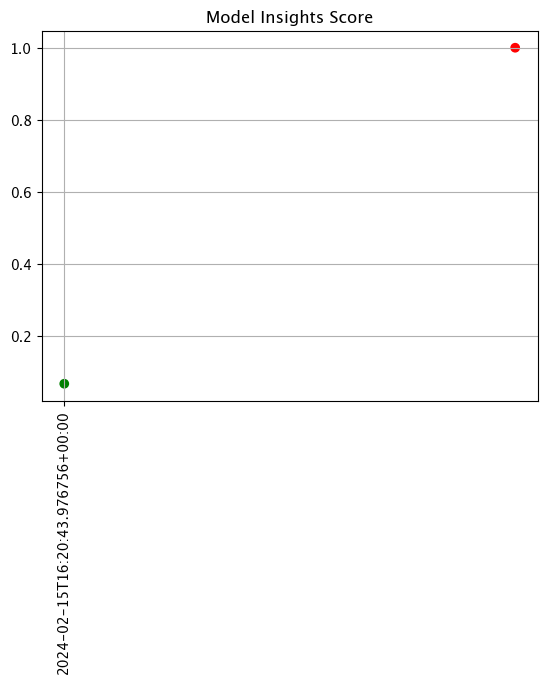

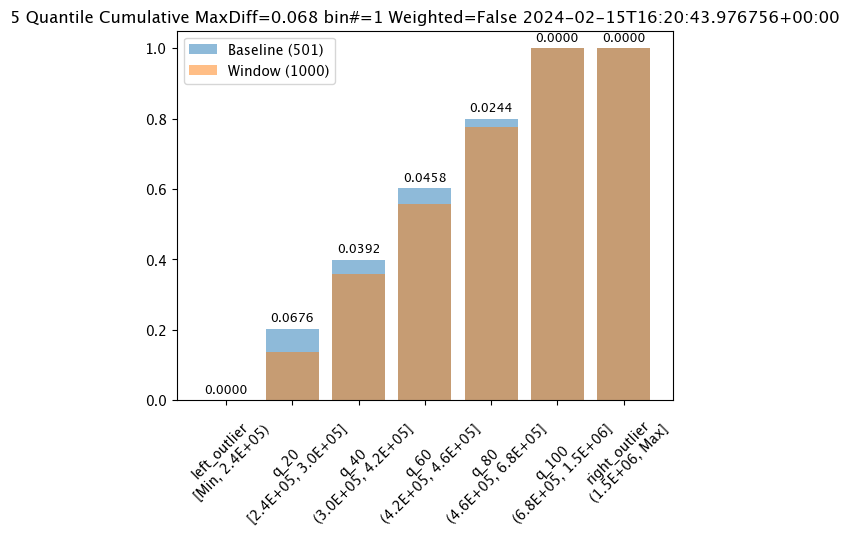

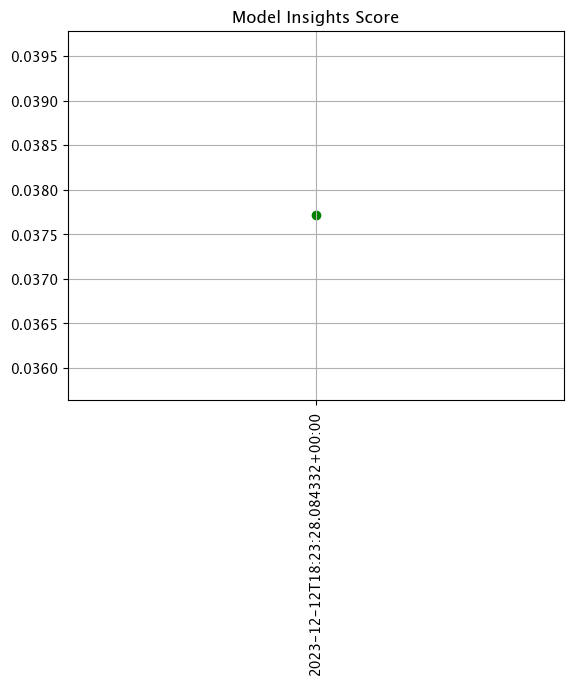

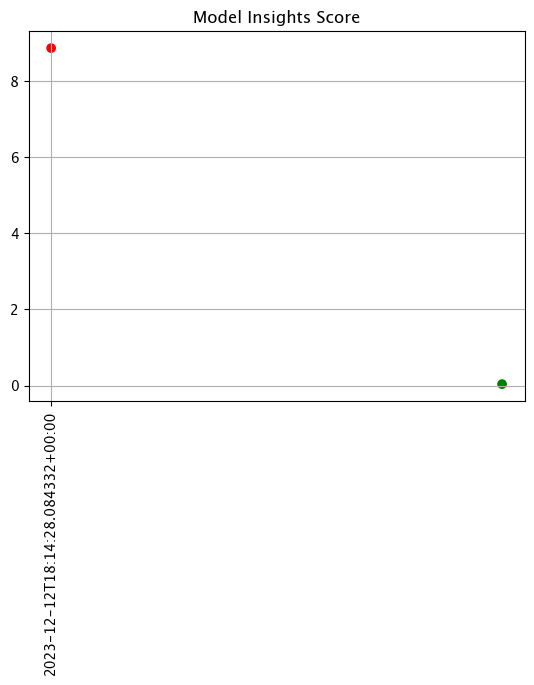

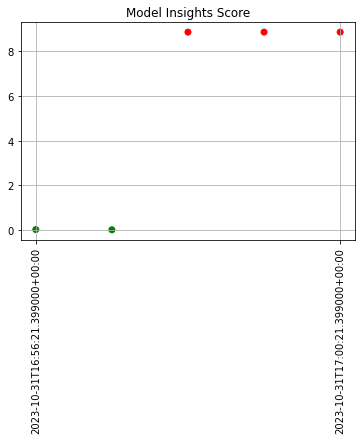

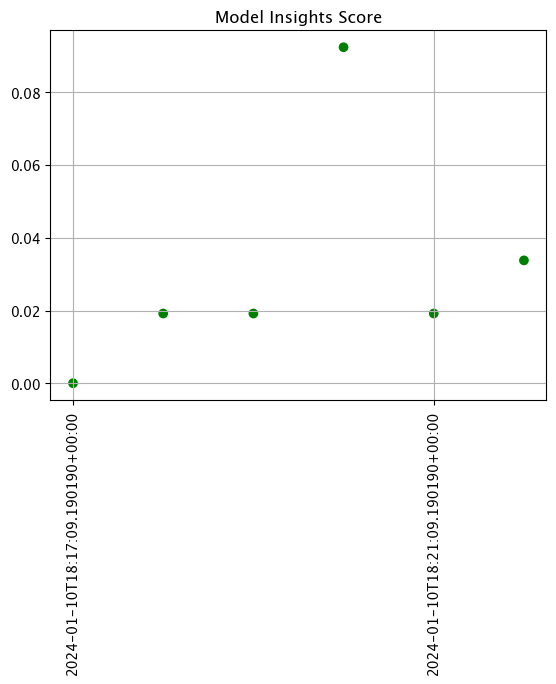

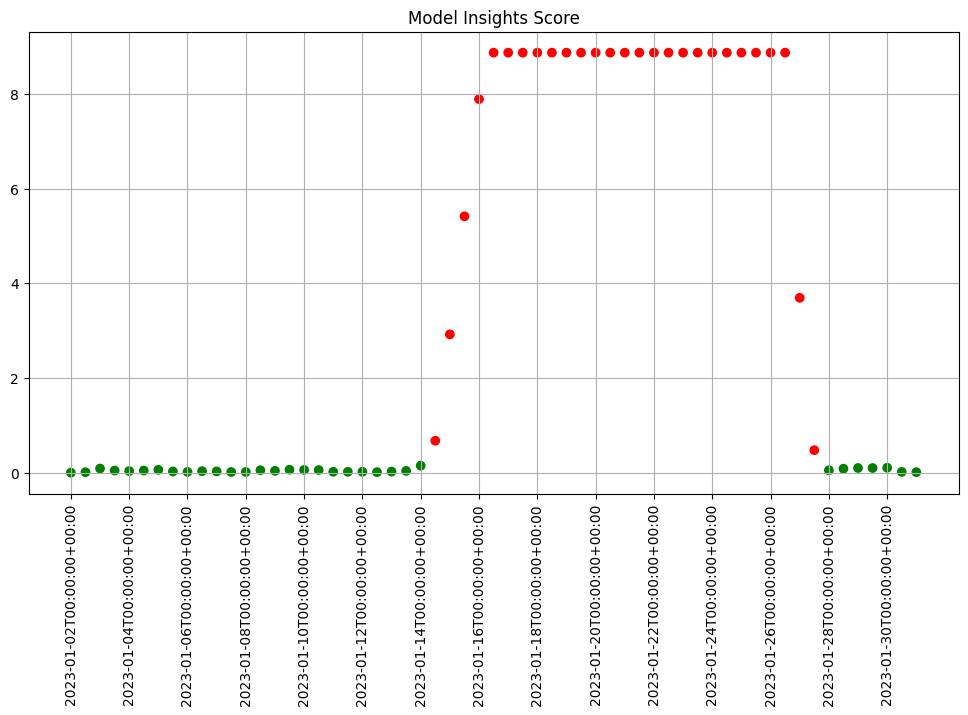

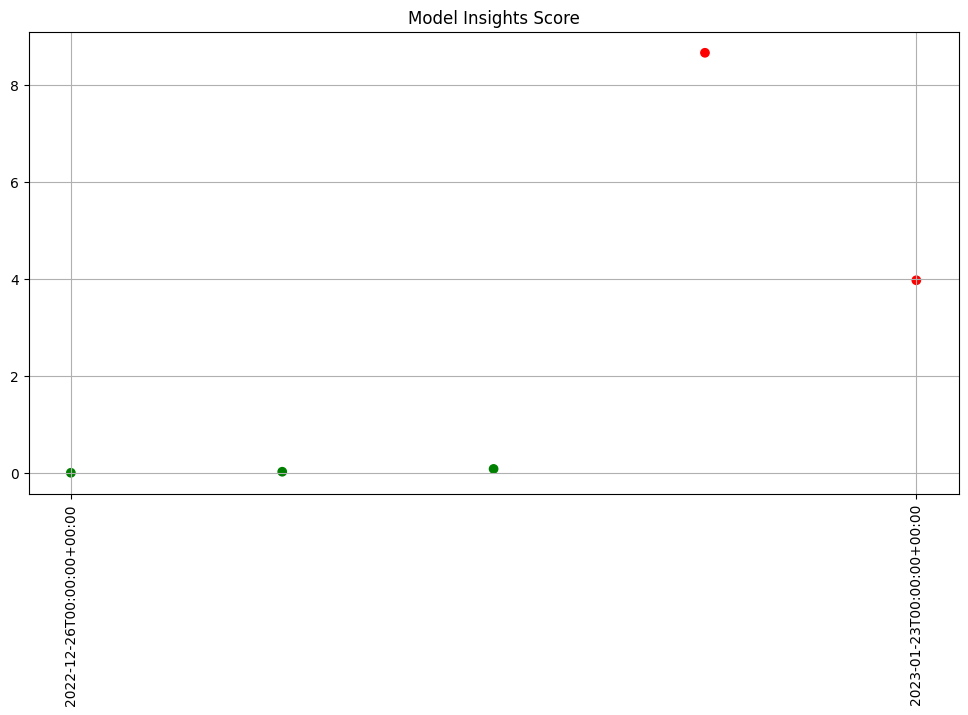

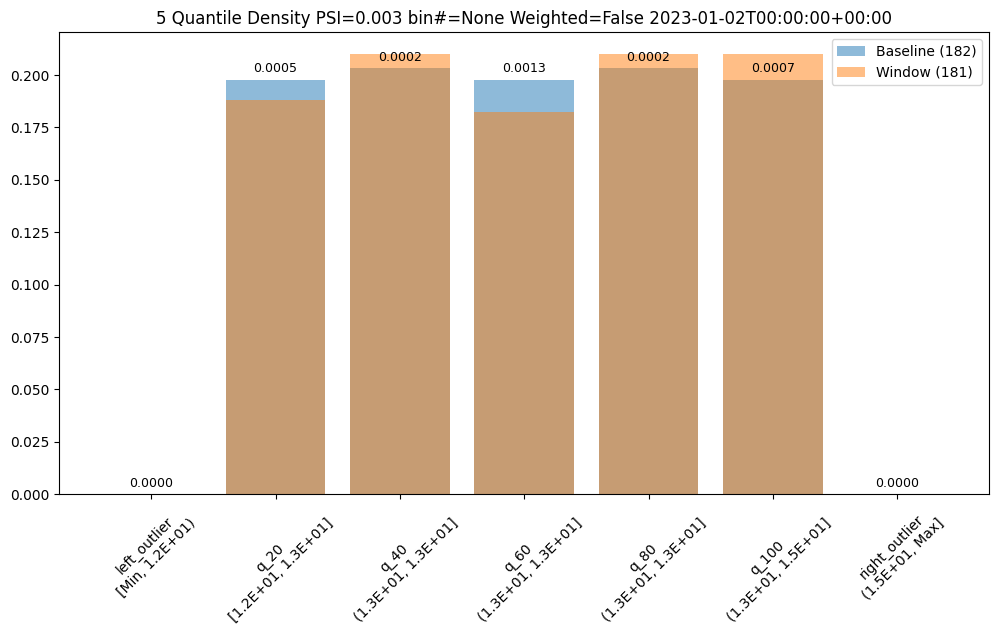

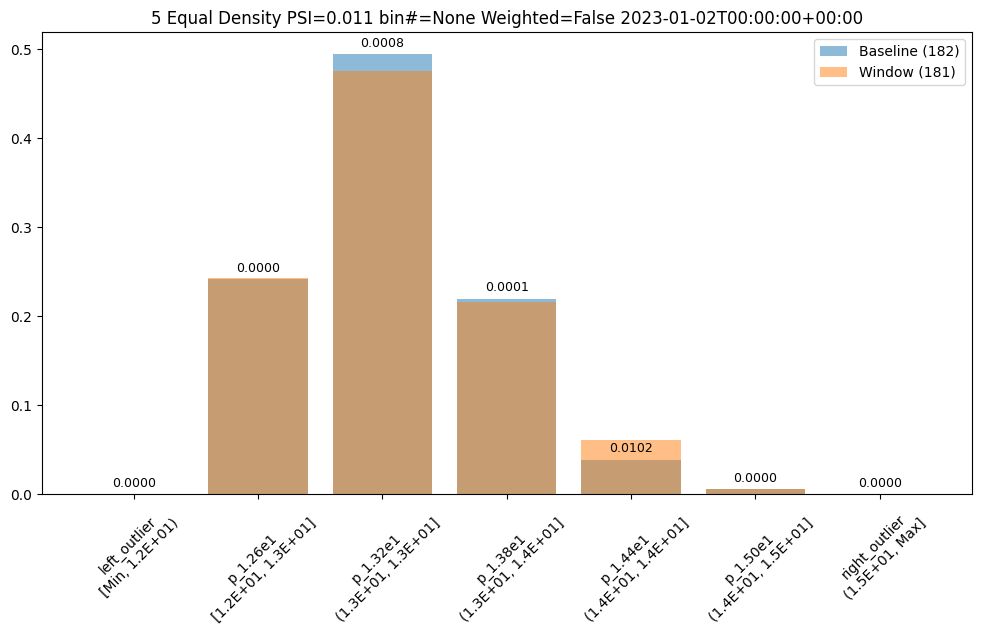

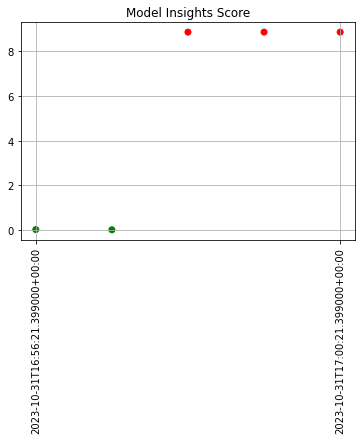

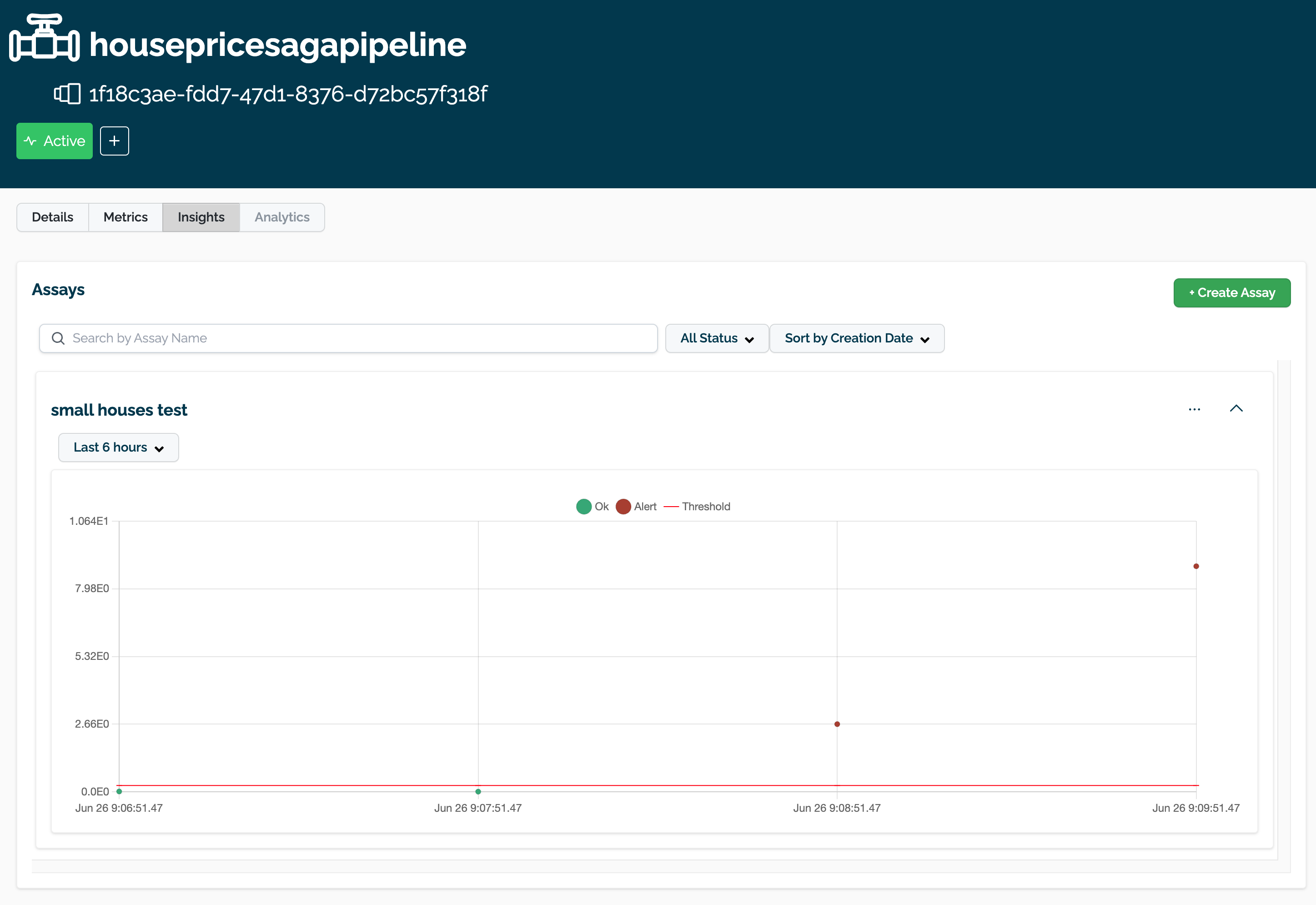

- 3.5: Wallaroo Assays Model Insights Tutorial

- 3.6: Computer Vision Pipeline Logs MLOps API Tutorial

- 3.7: Wallaroo Edge Observability with Classification Financial Models through API

- 3.8: Pipeline Logs MLOps API Tutorial

- 3.9: Pipeline Logs Tutorial

- 3.10: Wallaroo Edge Observability with Wallaroo Assays

- 3.11: House Price Testing Life Cycle

- 4: Model Cookbooks

- 4.1: Aloha Quick Tutorial

- 4.2: Computer Vision Tutorials

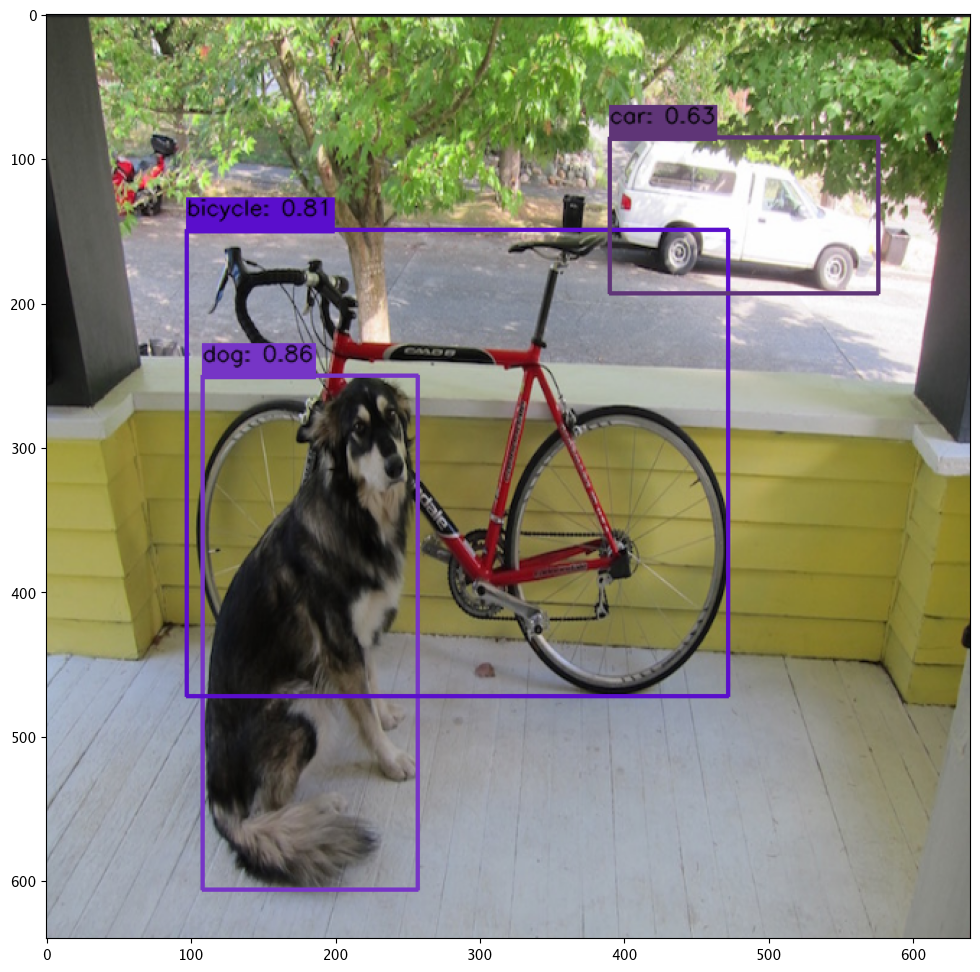

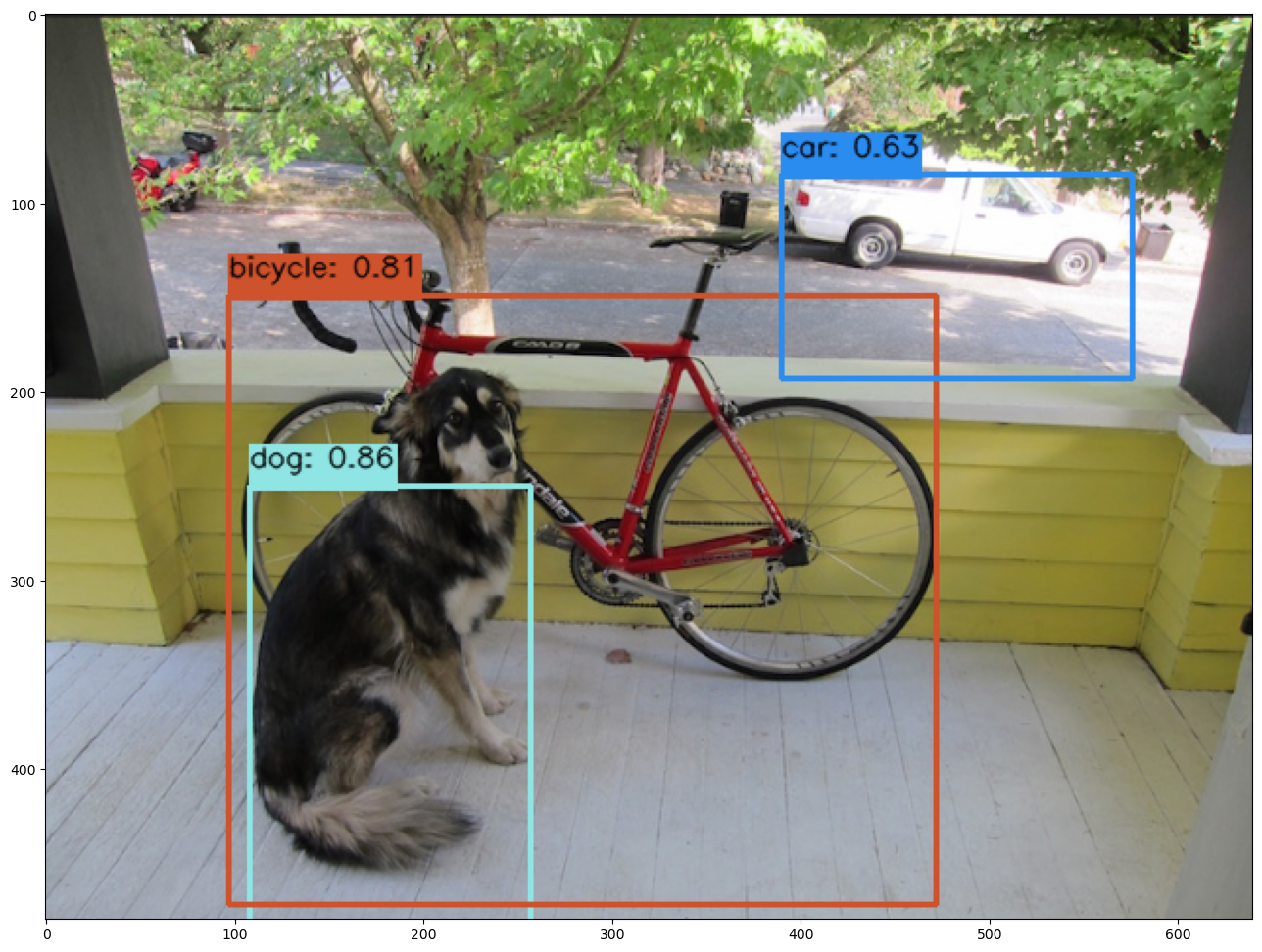

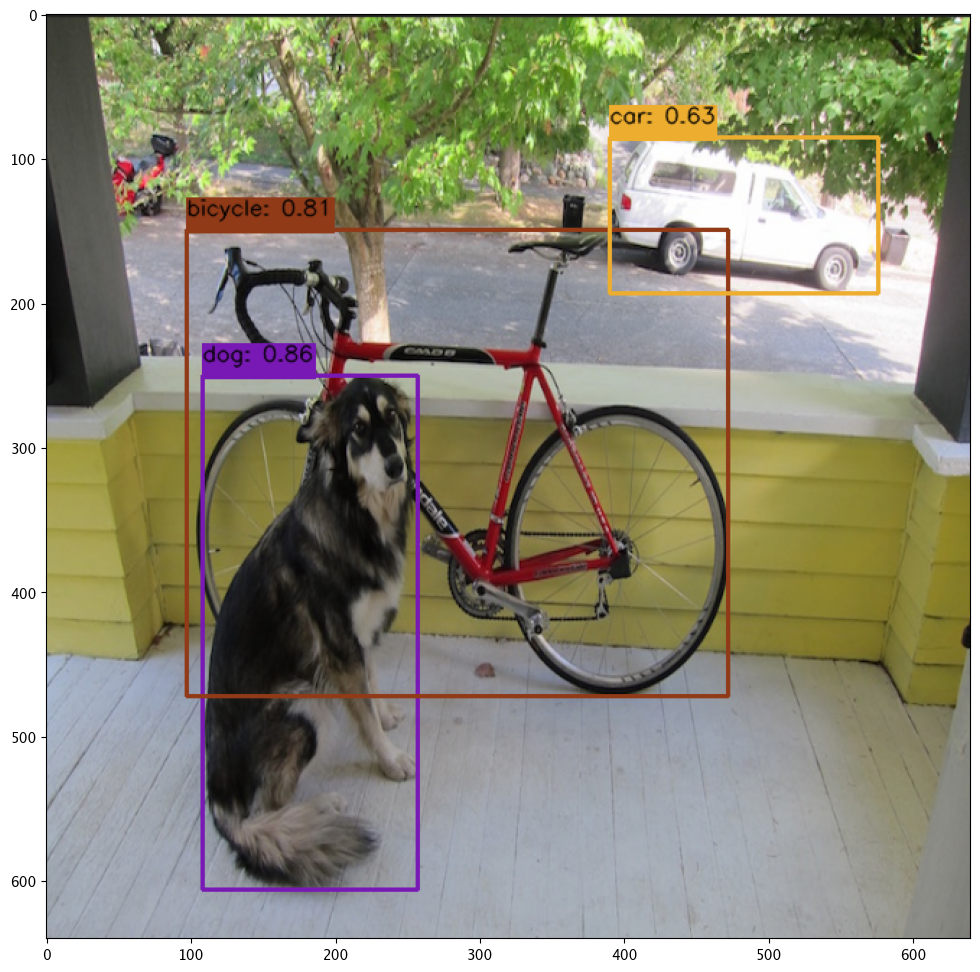

- 4.2.1: Step 01: Detecting Objects Using mobilenet

- 4.2.2: Step 02: Detecting Objects Using resnet50

- 4.2.3: Step 03: mobilenet and resnet50 Shadow Deploy

- 4.3: Computer Vision: Yolov8n Demonstration

- 4.4: Computer Vision: Image Detection for Health Care

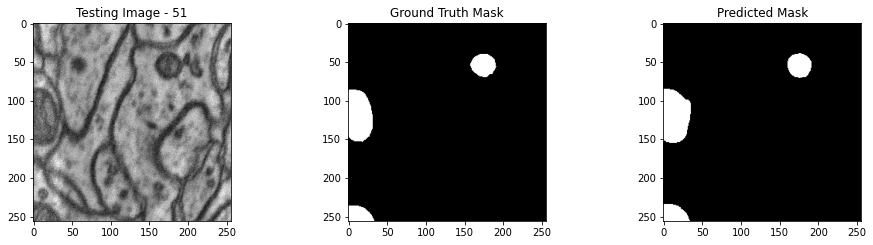

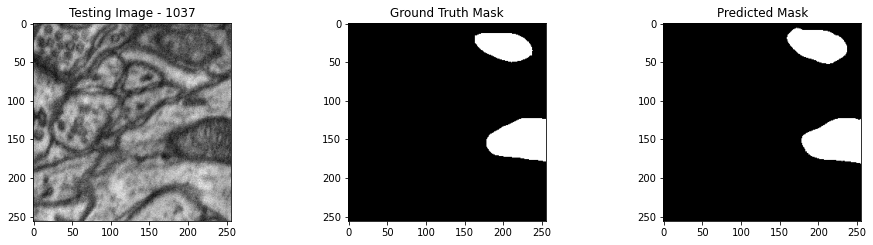

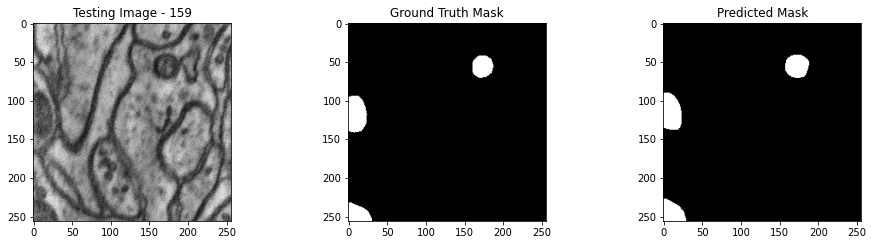

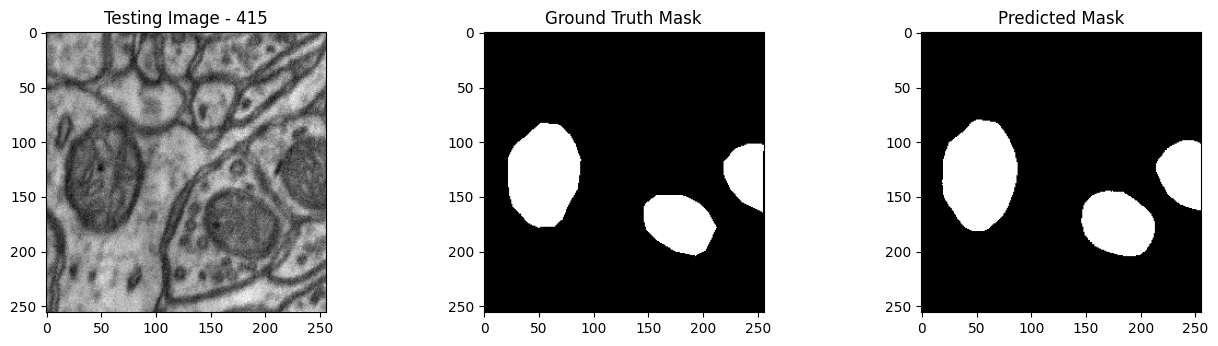

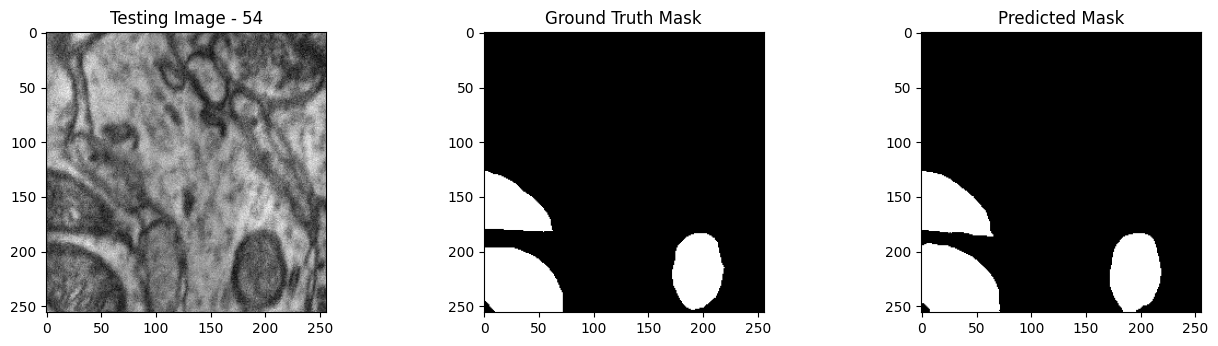

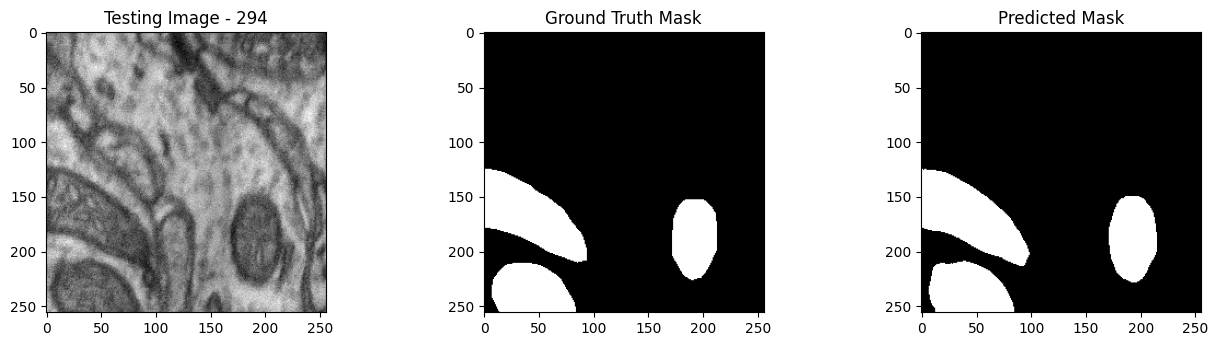

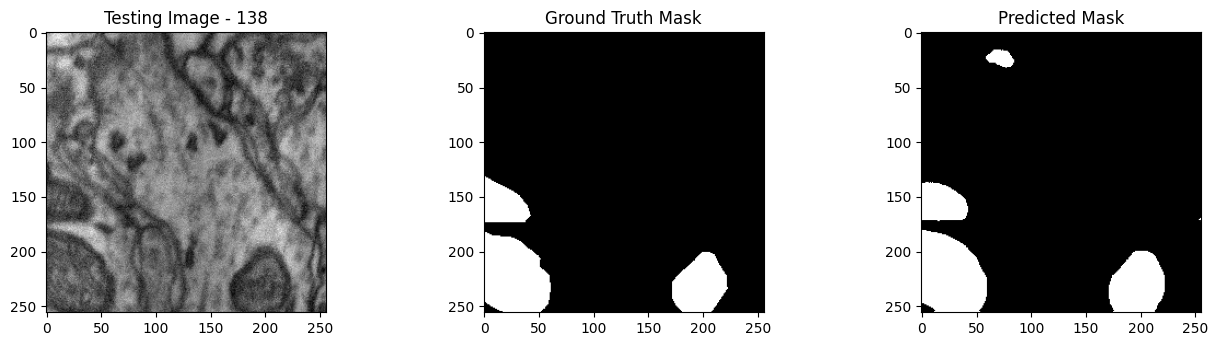

- 4.4.1: Computer Vision: Image Detection for Health Care

- 4.4.2: Computer Vision: Image Detection for Health Care

- 4.5: CLIP ViT-B/32 Transformer Demonstration with Wallaroo

- 4.6: Containerized MLFlow Tutorial

- 4.7: Custom Inference Computer Vision Upload, Auto Packaging, and Deploy Tutorial

- 4.8: Demand Curve Quick Start Guide

- 4.9: IMDB Tutorial

- 4.10: whisper-large-v2 Demonstration with Wallaroo

- 5: Wallaroo Edge Deployment Tutorials

- 5.1: Wallaroo Edge Computer Vision For Health Care Imaging Demonstration: Preparation

- 5.2: Wallaroo Edge Classification Financial Services Deployment via MLOps API Demonstration

- 5.3: Wallaroo Edge Classification Financial Services Deployment Demonstration

- 5.4: Wallaroo Edge Hugging Face LLM Summarization Deployment Demonstration

- 5.5: Wallaroo Edge Computer Vision Deployment Demonstration

- 5.6: Wallaroo Edge Computer Vision Yolov8n Demonstration

- 5.7: Wallaroo Edge Computer Vision Observability

- 5.8: Wallaroo Edge Arbitrary Python Deployment Demonstration

- 5.9: Wallaroo Edge Observability with Classification Financial Models

- 5.10: Wallaroo Edge Observability with Classification Financial Models through API

- 5.11: Wallaroo Edge Observability with Wallaroo Assays

- 5.12: Wallaroo Edge Deployment for U-Net Computer Vision for Brain Segmentation

- 6: Wallaroo Features Tutorials

- 6.1: Hot Swap Models Tutorial

- 6.2: Inference URL Tutorials

- 6.2.1: Wallaroo SDK Inferencing with Pipeline Inference URL Tutorial

- 6.2.2: Wallaroo MLOps API Inferencing with Pipeline Inference URL Tutorial

- 6.3: Large Language Model with GPU Pipeline Deployment in Wallaroo Demonstration

- 6.4: Onnx Deployment with Multi Input-Output Tutorial

- 6.5: Wallaroo Assays Model Insights Tutorial

- 6.6: Computer Vision Pipeline Logs MLOps API Tutorial

- 6.7: Pipeline Logs MLOps API Tutorial

- 6.8: Pipeline Logs Tutorial

- 6.9: Statsmodel Forecast with Wallaroo Features

- 6.9.1: Statsmodel Forecast with Wallaroo Features: Deploy and Test Infer

- 6.9.2: Statsmodel Forecast with Wallaroo Features: Parallel Inference

- 6.9.3: Statsmodel Forecast with Wallaroo Features: Data Connection

- 6.9.4: Statsmodel Forecast with Wallaroo Features: ML Workload Orchestration

- 6.10: Tags Tutorial

- 7: Model Validation and Testing in Wallaroo

- 7.1: A/B Testing Tutorial

- 7.2: Anomaly Testing Tutorial

- 7.3: House Price Testing Life Cycle

- 7.4: Shadow Deployment Tutorial

- 8: Using Jupyter Notebooks in Production

- 8.1: Data Exploration And Model Selection

- 8.2: From Jupyter to Production

- 8.3: Deploy the Model in Wallaroo

- 8.4: Regular Batch Inference

- 9: ML Workload Orchestration Tutorials

- 9.1: Wallaroo ML Workload Orchestration API Tutorial

- 9.2: Wallaroo Connection API with Google BigQuery Tutorial

- 9.3: Wallaroo ML Workload Orchestration Simple Tutorial

- 9.4: Wallaroo ML Workload Orchestration Google BigQuery with House Price Model Tutorial

- 9.5: Wallaroo ML Workload Orchestration Google BigQuery with Statsmodel Forecast Tutorial

- 9.6: Wallaroo ML Workload Orchestration Comprehensive Tutorial

- 10: Wallaroo Pipeline Architecture

- 10.1: ARM BYOP (Bring Your Own Predict) VGG16 Demonstration

- 10.2: ARM Classification Cybersecurity Demonstration

- 10.3: ARM Financial Services Cybersecurity Demonstration

- 10.4: ARM Computer Vision Retail Demonstration

- 10.5: ARM Computer Vision Retail Yolov8 Demonstration

- 10.6: ARM LLM Summarization Demonstration

- 11: Wallaroo Tools Tutorials

1 - Setting Up Wallaroo for Sample Pipelines and Models

Notice

This tutorial and the assets can be downloaded as part of the Wallaroo Tutorials repository.Welcome to Wallaroo! We’re excited to help you get your ML models deployed as quickly as possible. These Quick Start Guides are meant to show simple but thorough steps in creating new pipelines and models. For full guides on using Wallaroo, see the Wallaroo SDK and the Wallaroo Operations Guide.

This first guide is just how to get Wallaroo ready for your first models and pipelines.

Prerequisites

This guide assumes that you’ve installed Wallaroo in a cluster cloud Kubernetes cluster.

Basic Wallaroo Configuration

The following will install the Wallaroo Quick Start samples into your Wallaroo Jupyter Hub environment.

From the Wallaroo Quick Start Samples repository, visit the Releases page. As of the 2023.1 release, each tutorial can be downloaded as a separate .zip file instead of downloading the entire release.

Download the release that matches your version of Wallaroo, Either a

.zipor.tar.gzfile can be downloaded. The example below uses the.zipfile.Access your Jupyter Hub from your Wallaroo environment.

Either drag and drop or use the Upload feature to upload the quick start guide .zip file.

From the launcher, select Terminal.

Unzip the files with

unzip {ZIP FILE NAME}. For example, if the most recent release isWallaroo_Tutorials.zip, the command would be:unzip Wallaroo_Tutorials.zipExit the terminal shell when complete.

The quick start samples along with models and data will be ready for use in the unzipped folder.

2 - Wallaroo ML Model Upload and Registrations

Tutorials on uploading and registering ML models of different frameworks into Wallaroo.

Additional References

2.1 - Model Registry Service with Wallaroo Tutorials

The following tutorials demonstrate how to add ML Models from a model registry service into a Wallaroo instance.

Artifact Requirements

Models are uploaded to the Wallaroo instance as the specific artifact - the “file” or other data that represents the file itself. This must comply with the Wallaroo model requirements framework and version or it will not be deployed. Note that for models that fall outside of the supported model types, they can be registered to a Wallaroo workspace as MLFlow 1.30.0 containerized models.

Supported Models

The following frameworks are supported. Frameworks fall under either Native or Containerized runtimes in the Wallaroo engine. For more details, see the specific framework what runtime a specific model framework runs in.

IMPORTANT NOTE

Verify that the input types match the specified inputs, especially for Containerized Wallaroo Runtimes. For example, if the input is listed as apyarrow.float32(), submitting a pyarrow.float64() may cause an error.| Runtime Display | Model Runtime Space | Pipeline Configuration |

|---|---|---|

tensorflow | Native | Native Runtime Configuration Methods |

onnx | Native | Native Runtime Configuration Methods |

python | Native | Native Runtime Configuration Methods |

mlflow | Containerized | Containerized Runtime Deployment |

flight | Containerized | Containerized Runtime Deployment |

Please note the following.

IMPORTANT NOTICE: FRAMEWORK VERSIONS

The supported frameworks include the specific version of the model framework supported by Wallaroo. It is highly recommended to verify that models uploaded to Wallaroo meet the library and version requirements to ensure proper functioning.Wallaroo ONNX Requirements

Wallaroo natively supports Open Neural Network Exchange (ONNX) models into the Wallaroo engine.

| Parameter | Description |

|---|---|

| Web Site | https://onnx.ai/ |

| Supported Libraries | See table below. |

| Framework | Framework.ONNX aka onnx |

| Runtime | Native aka onnx |

The following ONNX versions models are supported:

| Wallaroo Version | ONNX Version | ONNX IR Version | ONNX OPset Version | ONNX ML Opset Version |

|---|---|---|---|---|

| 2023.4.0 (October 2023) | 1.12.1 | 8 | 17 | 3 |

| 2023.2.1 (July 2023) | 1.12.1 | 8 | 17 | 3 |

For the most recent release of Wallaroo 2023.4.0, the following native runtimes are supported:

- If converting another ML Model to ONNX (PyTorch, XGBoost, etc) using the onnxconverter-common library, the supported

DEFAULT_OPSET_NUMBERis 17.

Using different versions or settings outside of these specifications may result in inference issues and other unexpected behavior.

ONNX models always run in the Wallaroo Native Runtime space.

Data Schemas

ONNX models deployed to Wallaroo have the following data requirements.

- Equal rows constraint: The number of input rows and output rows must match.

- All inputs are tensors: The inputs are tensor arrays with the same shape.

- Data Type Consistency: Data types within each tensor are of the same type.

Equal Rows Constraint

Inference performed through ONNX models are assumed to be in batch format, where each input row corresponds to an output row. This is reflected in the in fields returned for an inference. In the following example, each input row for an inference is related directly to the inference output.

df = pd.read_json('./data/cc_data_1k.df.json')

display(df.head())

result = ccfraud_pipeline.infer(df.head())

display(result)

INPUT

| tensor | |

|---|---|

| 0 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 1 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 2 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 3 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 4 | [0.5817662108, 0.09788155100000001, 0.1546819424, 0.4754101949, -0.19788623060000002, -0.45043448540000003, 0.016654044700000002, -0.0256070551, 0.0920561602, -0.2783917153, 0.059329944100000004, -0.0196585416, -0.4225083157, -0.12175388770000001, 1.5473094894000001, 0.2391622864, 0.3553974881, -0.7685165301, -0.7000849355000001, -0.1190043285, -0.3450517133, -1.1065114108, 0.2523411195, 0.0209441826, 0.2199267436, 0.2540689265, -0.0450225094, 0.10867738980000001, 0.2547179311] |

OUTPUT

| time | in.tensor | out.dense_1 | check_failures | |

|---|---|---|---|---|

| 0 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 1 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 2 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 3 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 4 | 2023-11-17 20:34:17.005 | [0.5817662108, 0.097881551, 0.1546819424, 0.4754101949, -0.1978862306, -0.4504344854, 0.0166540447, -0.0256070551, 0.0920561602, -0.2783917153, 0.0593299441, -0.0196585416, -0.4225083157, -0.1217538877, 1.5473094894, 0.2391622864, 0.3553974881, -0.7685165301, -0.7000849355, -0.1190043285, -0.3450517133, -1.1065114108, 0.2523411195, 0.0209441826, 0.2199267436, 0.2540689265, -0.0450225094, 0.1086773898, 0.2547179311] | [0.0010916889] | 0 |

All Inputs Are Tensors

All inputs into an ONNX model must be tensors. This requires that the shape of each element is the same. For example, the following is a proper input:

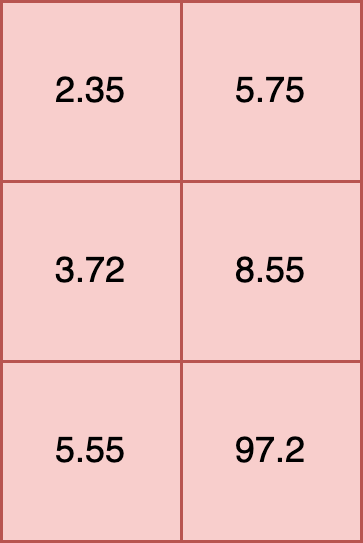

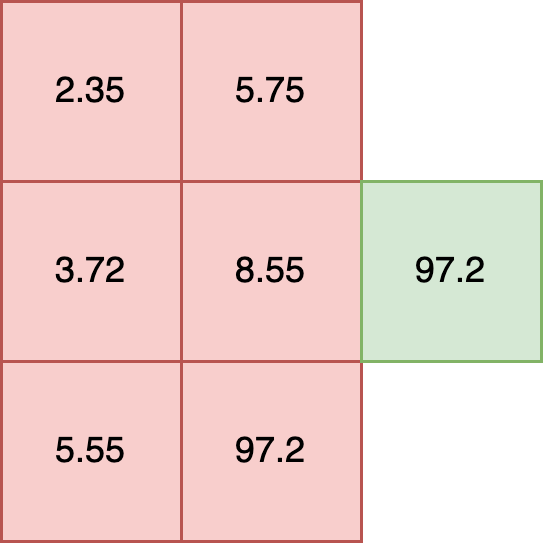

t [

[2.35, 5.75],

[3.72, 8.55],

[5.55, 97.2]

]

Another example is a 2,2,3 tensor, where the shape of each element is (3,), and each element has 2 rows.

t = [

[2.35, 5.75, 19.2],

[3.72, 8.55, 10.5]

],

[

[5.55, 7.2, 15.7],

[9.6, 8.2, 2.3]

]

In this example each element has a shape of (2,). Tensors with elements of different shapes, known as ragged tensors, are not supported. For example:

t = [

[2.35, 5.75],

[3.72, 8.55, 10.5],

[5.55, 97.2]

])

**INVALID SHAPE**

For models that require ragged tensor or other shapes, see other data formatting options such as Bring Your Own Predict models.

Data Type Consistency

All inputs into an ONNX model must have the same internal data type. For example, the following is valid because all of the data types within each element are float32.

t = [

[2.35, 5.75],

[3.72, 8.55],

[5.55, 97.2]

]

The following is invalid, as it mixes floats and strings in each element:

t = [

[2.35, "Bob"],

[3.72, "Nancy"],

[5.55, "Wani"]

]

The following inputs are valid, as each data type is consistent within the elements.

df = pd.DataFrame({

"t": [

[2.35, 5.75, 19.2],

[5.55, 7.2, 15.7],

],

"s": [

["Bob", "Nancy", "Wani"],

["Jason", "Rita", "Phoebe"]

]

})

df

| t | s | |

|---|---|---|

| 0 | [2.35, 5.75, 19.2] | [Bob, Nancy, Wani] |

| 1 | [5.55, 7.2, 15.7] | [Jason, Rita, Phoebe] |

| Parameter | Description |

|---|---|

| Web Site | https://www.tensorflow.org/ |

| Supported Libraries | tensorflow==2.9.3 |

| Framework | Framework.TENSORFLOW aka tensorflow |

| Runtime | Native aka tensorflow |

| Supported File Types | SavedModel format as .zip file |

IMPORTANT NOTE

These requirements are <strong>not</strong> for Tensorflow Keras models, only for non-Keras Tensorflow models in the SavedModel format. For Tensorflow Keras deployment in Wallaroo, see the Tensorflow Keras requirements.

TensorFlow File Format

TensorFlow models are .zip file of the SavedModel format. For example, the Aloha sample TensorFlow model is stored in the directory alohacnnlstm:

├── saved_model.pb

└── variables

├── variables.data-00000-of-00002

├── variables.data-00001-of-00002

└── variables.index

This is compressed into the .zip file alohacnnlstm.zip with the following command:

zip -r alohacnnlstm.zip alohacnnlstm/

ML models that meet the Tensorflow and SavedModel format will run as Wallaroo Native runtimes by default.

See the SavedModel guide for full details.

| Parameter | Description |

|---|---|

| Web Site | https://www.python.org/ |

| Supported Libraries | python==3.8 |

| Framework | Framework.PYTHON aka python |

| Runtime | Native aka python |

Python models uploaded to Wallaroo are executed as a native runtime.

Note that Python models - aka “Python steps” - are standalone python scripts that use the python libraries natively supported by the Wallaroo platform. These are used for either simple model deployment (such as ARIMA Statsmodels), or data formatting such as the postprocessing steps. A Wallaroo Python model will be composed of one Python script that matches the Wallaroo requirements.

This is contrasted with Arbitrary Python models, also known as Bring Your Own Predict (BYOP) allow for custom model deployments with supporting scripts and artifacts. These are used with pre-trained models (PyTorch, Tensorflow, etc) along with whatever supporting artifacts they require. Supporting artifacts can include other Python modules, model files, etc. These are zipped with all scripts, artifacts, and a requirements.txt file that indicates what other Python models need to be imported that are outside of the typical Wallaroo platform.

Python Models Requirements

Python models uploaded to Wallaroo are Python scripts that must include the wallaroo_json method as the entry point for the Wallaroo engine to use it as a Pipeline step.

This method receives the results of the previous Pipeline step, and its return value will be used in the next Pipeline step.

If the Python model is the first step in the pipeline, then it will be receiving the inference request data (for example: a preprocessing step). If it is the last step in the pipeline, then it will be the data returned from the inference request.

In the example below, the Python model is used as a post processing step for another ML model. The Python model expects to receive data from a ML Model who’s output is a DataFrame with the column dense_2. It then extracts the values of that column as a list, selects the first element, and returns a DataFrame with that element as the value of the column output.

def wallaroo_json(data: pd.DataFrame):

print(data)

return [{"output": [data["dense_2"].to_list()[0][0]]}]

In line with other Wallaroo inference results, the outputs of a Python step that returns a pandas DataFrame or Arrow Table will be listed in the out. metadata, with all inference outputs listed as out.{variable 1}, out.{variable 2}, etc. In the example above, this results the output field as the out.output field in the Wallaroo inference result.

| time | in.tensor | out.output | check_failures | |

|---|---|---|---|---|

| 0 | 2023-06-20 20:23:28.395 | [0.6878518042, 0.1760734021, -0.869514083, 0.3.. | [12.886651039123535] | 0 |

| Parameter | Description |

|---|---|

| Web Site | https://huggingface.co/models |

| Supported Libraries |

|

| Frameworks | The following Hugging Face pipelines are supported by Wallaroo.

|

| Runtime | Containerized flight |

During the model upload process, the Wallaroo instance will attempt to convert the model to a Native Wallaroo Runtime. If unsuccessful based , it will create a Wallaroo Containerized Runtime for the model. See the model deployment section for details on how to configure pipeline resources based on the model’s runtime.

Hugging Face Schemas

Input and output schemas for each Hugging Face pipeline are defined below. Note that adding additional inputs not specified below will raise errors, except for the following:

Framework.HUGGING_FACE_IMAGE_TO_TEXTFramework.HUGGING_FACE_TEXT_CLASSIFICATIONFramework.HUGGING_FACE_SUMMARIZATIONFramework.HUGGING_FACE_TRANSLATION

Additional inputs added to these Hugging Face pipelines will be added as key/pair value arguments to the model’s generate method. If the argument is not required, then the model will default to the values coded in the original Hugging Face model’s source code.

See the Hugging Face Pipeline documentation for more details on each pipeline and framework.

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_FEATURE_EXTRACTION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string())

])

output_schema = pa.schema([

pa.field('output', pa.list_(

pa.list_(

pa.float64(),

list_size=128

),

))

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_IMAGE_CLASSIFICATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('top_k', pa.int64()),

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64(), list_size=2)),

pa.field('label', pa.list_(pa.string(), list_size=2)),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_IMAGE_SEGMENTATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('threshold', pa.float64()),

pa.field('mask_threshold', pa.float64()),

pa.field('overlap_mask_area_threshold', pa.float64()),

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64())),

pa.field('label', pa.list_(pa.string())),

pa.field('mask',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=100

),

list_size=100

),

)),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_IMAGE_TO_TEXT |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.list_( #required

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

# pa.field('max_new_tokens', pa.int64()), # optional

])

output_schema = pa.schema([

pa.field('generated_text', pa.list_(pa.string())),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_OBJECT_DETECTION |

Schemas:

input_schema = pa.schema([

pa.field('inputs',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('threshold', pa.float64()),

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64())),

pa.field('label', pa.list_(pa.string())),

pa.field('box',

pa.list_( # dynamic output, i.e. dynamic number of boxes per input image, each sublist contains the 4 box coordinates

pa.list_(

pa.int64(),

list_size=4

),

),

),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_QUESTION_ANSWERING |

Schemas:

input_schema = pa.schema([

pa.field('question', pa.string()),

pa.field('context', pa.string()),

pa.field('top_k', pa.int64()),

pa.field('doc_stride', pa.int64()),

pa.field('max_answer_len', pa.int64()),

pa.field('max_seq_len', pa.int64()),

pa.field('max_question_len', pa.int64()),

pa.field('handle_impossible_answer', pa.bool_()),

pa.field('align_to_words', pa.bool_()),

])

output_schema = pa.schema([

pa.field('score', pa.float64()),

pa.field('start', pa.int64()),

pa.field('end', pa.int64()),

pa.field('answer', pa.string()),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_STABLE_DIFFUSION_TEXT_2_IMG |

Schemas:

input_schema = pa.schema([

pa.field('prompt', pa.string()),

pa.field('height', pa.int64()),

pa.field('width', pa.int64()),

pa.field('num_inference_steps', pa.int64()), # optional

pa.field('guidance_scale', pa.float64()), # optional

pa.field('negative_prompt', pa.string()), # optional

pa.field('num_images_per_prompt', pa.string()), # optional

pa.field('eta', pa.float64()) # optional

])

output_schema = pa.schema([

pa.field('images', pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=128

),

list_size=128

)),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_SUMMARIZATION |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string()),

pa.field('return_text', pa.bool_()),

pa.field('return_tensors', pa.bool_()),

pa.field('clean_up_tokenization_spaces', pa.bool_()),

# pa.field('extra_field', pa.int64()), # every extra field you specify will be forwarded as a key/value pair

])

output_schema = pa.schema([

pa.field('summary_text', pa.string()),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_TEXT_CLASSIFICATION |

Schemas

input_schema = pa.schema([

pa.field('inputs', pa.string()), # required

pa.field('top_k', pa.int64()), # optional

pa.field('function_to_apply', pa.string()), # optional

])

output_schema = pa.schema([

pa.field('label', pa.list_(pa.string(), list_size=2)), # list with a number of items same as top_k, list_size can be skipped but may lead in worse performance

pa.field('score', pa.list_(pa.float64(), list_size=2)), # list with a number of items same as top_k, list_size can be skipped but may lead in worse performance

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_TRANSLATION |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string()), # required

pa.field('return_tensors', pa.bool_()), # optional

pa.field('return_text', pa.bool_()), # optional

pa.field('clean_up_tokenization_spaces', pa.bool_()), # optional

pa.field('src_lang', pa.string()), # optional

pa.field('tgt_lang', pa.string()), # optional

# pa.field('extra_field', pa.int64()), # every extra field you specify will be forwarded as a key/value pair

])

output_schema = pa.schema([

pa.field('translation_text', pa.string()),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_ZERO_SHOT_CLASSIFICATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string()), # required

pa.field('candidate_labels', pa.list_(pa.string(), list_size=2)), # required

pa.field('hypothesis_template', pa.string()), # optional

pa.field('multi_label', pa.bool_()), # optional

])

output_schema = pa.schema([

pa.field('sequence', pa.string()),

pa.field('scores', pa.list_(pa.float64(), list_size=2)), # same as number of candidate labels, list_size can be skipped by may result in slightly worse performance

pa.field('labels', pa.list_(pa.string(), list_size=2)), # same as number of candidate labels, list_size can be skipped by may result in slightly worse performance

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_ZERO_SHOT_IMAGE_CLASSIFICATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', # required

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('candidate_labels', pa.list_(pa.string(), list_size=2)), # required

pa.field('hypothesis_template', pa.string()), # optional

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64(), list_size=2)), # same as number of candidate labels

pa.field('label', pa.list_(pa.string(), list_size=2)), # same as number of candidate labels

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_ZERO_SHOT_OBJECT_DETECTION |

Schemas:

input_schema = pa.schema([

pa.field('images',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=640

),

list_size=480

)),

pa.field('candidate_labels', pa.list_(pa.string(), list_size=3)),

pa.field('threshold', pa.float64()),

# pa.field('top_k', pa.int64()), # we want the model to return exactly the number of predictions, we shouldn't specify this

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64())), # variable output, depending on detected objects

pa.field('label', pa.list_(pa.string())), # variable output, depending on detected objects

pa.field('box',

pa.list_( # dynamic output, i.e. dynamic number of boxes per input image, each sublist contains the 4 box coordinates

pa.list_(

pa.int64(),

list_size=4

),

),

),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_SENTIMENT_ANALYSIS | Hugging Face Sentiment Analysis |

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_TEXT_GENERATION |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

input_schema = pa.schema([

pa.field('inputs', pa.string()),

pa.field('return_tensors', pa.bool_()), # optional

pa.field('return_text', pa.bool_()), # optional

pa.field('return_full_text', pa.bool_()), # optional

pa.field('clean_up_tokenization_spaces', pa.bool_()), # optional

pa.field('prefix', pa.string()), # optional

pa.field('handle_long_generation', pa.string()), # optional

# pa.field('extra_field', pa.int64()), # every extra field you specify will be forwarded as a key/value pair

])

output_schema = pa.schema([

pa.field('generated_text', pa.list_(pa.string(), list_size=1))

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING_FACE_AUTOMATIC_SPEECH_RECOGNITION |

Sample input and output schema.

input_schema = pa.schema([

pa.field('inputs', pa.list_(pa.float32())), # required: the audio stored in numpy arrays of shape (num_samples,) and data type `float32`

pa.field('return_timestamps', pa.string()) # optional: return start & end times for each predicted chunk

])

output_schema = pa.schema([

pa.field('text', pa.string()), # required: the output text corresponding to the audio input

pa.field('chunks', pa.list_(pa.struct([('text', pa.string()), ('timestamp', pa.list_(pa.float32()))]))), # required (if `return_timestamps` is set), start & end times for each predicted chunk

])

| Parameter | Description |

|---|---|

| Web Site | https://pytorch.org/ |

| Supported Libraries |

|

| Framework | Framework.PYTORCH aka pytorch |

| Supported File Types | pt ot pth in TorchScript format |

| Runtime | onnx/flight |

- IMPORTANT NOTE: The PyTorch model must be in TorchScript format. scripting (i.e.

torch.jit.script()is always recommended over tracing (i.e.torch.jit.trace()). From the PyTorch documentation: “Scripting preserves dynamic control flow and is valid for inputs of different sizes.” For more details, see TorchScript-based ONNX Exporter: Tracing vs Scripting.

During the model upload process, the Wallaroo instance will attempt to convert the model to a Native Wallaroo Runtime. If unsuccessful based , it will create a Wallaroo Containerized Runtime for the model. See the model deployment section for details on how to configure pipeline resources based on the model’s runtime.

- IMPORTANT CONFIGURATION NOTE: For PyTorch input schemas, the floats must be

pyarrow.float32()for the PyTorch model to be converted to the Native Wallaroo Runtime during the upload process.

Sci-kit Learn aka SKLearn.

| Parameter | Description |

|---|---|

| Web Site | https://scikit-learn.org/stable/index.html |

| Supported Libraries |

|

| Framework | Framework.SKLEARN aka sklearn |

| Runtime | onnx / flight |

During the model upload process, the Wallaroo instance will attempt to convert the model to a Native Wallaroo Runtime. If unsuccessful based , it will create a Wallaroo Containerized Runtime for the model. See the model deployment section for details on how to configure pipeline resources based on the model’s runtime.

SKLearn Schema Inputs

SKLearn schema follows a different format than other models. To prevent inputs from being out of order, the inputs should be submitted in a single row in the order the model is trained to accept, with all of the data types being the same. For example, the following DataFrame has 4 columns, each column a float.

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

For submission to an SKLearn model, the data input schema will be a single array with 4 float values.

input_schema = pa.schema([

pa.field('inputs', pa.list_(pa.float64(), list_size=4))

])

When submitting as an inference, the DataFrame is converted to rows with the column data expressed as a single array. The data must be in the same order as the model expects, which is why the data is submitted as a single array rather than JSON labeled columns: this insures that the data is submitted in the exact order as the model is trained to accept.

Original DataFrame:

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

Converted DataFrame:

| inputs | |

|---|---|

| 0 | [5.1, 3.5, 1.4, 0.2] |

| 1 | [4.9, 3.0, 1.4, 0.2] |

SKLearn Schema Outputs

Outputs for SKLearn that are meant to be predictions or probabilities when output by the model are labeled in the output schema for the model when uploaded to Wallaroo. For example, a model that outputs either 1 or 0 as its output would have the output schema as follows:

output_schema = pa.schema([

pa.field('predictions', pa.int32())

])

When used in Wallaroo, the inference result is contained in the out metadata as out.predictions.

pipeline.infer(dataframe)

| time | in.inputs | out.predictions | check_failures | |

|---|---|---|---|---|

| 0 | 2023-07-05 15:11:29.776 | [5.1, 3.5, 1.4, 0.2] | 0 | 0 |

| 1 | 2023-07-05 15:11:29.776 | [4.9, 3.0, 1.4, 0.2] | 0 | 0 |

| Parameter | Description |

|---|---|

| Web Site | https://www.tensorflow.org/api_docs/python/tf/keras/Model |

| Supported Libraries |

|

| Framework | Framework.KERAS aka keras |

| Supported File Types | SavedModel format as .zip file and HDF5 format |

| Runtime | onnx/flight |

During the model upload process, the Wallaroo instance will attempt to convert the model to a Native Wallaroo Runtime. If unsuccessful based , it will create a Wallaroo Containerized Runtime for the model. See the model deployment section for details on how to configure pipeline resources based on the model’s runtime.

TensorFlow Keras SavedModel Format

TensorFlow Keras SavedModel models are .zip file of the SavedModel format. For example, the Aloha sample TensorFlow model is stored in the directory alohacnnlstm:

├── saved_model.pb

└── variables

├── variables.data-00000-of-00002

├── variables.data-00001-of-00002

└── variables.index

This is compressed into the .zip file alohacnnlstm.zip with the following command:

zip -r alohacnnlstm.zip alohacnnlstm/

See the SavedModel guide for full details.

TensorFlow Keras H5 Format

Wallaroo supports the H5 for Tensorflow Keras models.

| Parameter | Description |

|---|---|

| Web Site | https://xgboost.ai/ |

| Supported Libraries |

|

| Framework | Framework.XGBOOST aka xgboost |

| Supported File Types | pickle (XGB files are not supported.) |

| Runtime | onnx / flight |

During the model upload process, the Wallaroo instance will attempt to convert the model to a Native Wallaroo Runtime. If unsuccessful based , it will create a Wallaroo Containerized Runtime for the model. See the model deployment section for details on how to configure pipeline resources based on the model’s runtime.

XGBoost Schema Inputs

XGBoost schema follows a different format than other models. To prevent inputs from being out of order, the inputs should be submitted in a single row in the order the model is trained to accept, with all of the data types being the same. If a model is originally trained to accept inputs of different data types, it will need to be retrained to only accept one data type for each column - typically pa.float64() is a good choice.

For example, the following DataFrame has 4 columns, each column a float.

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

For submission to an XGBoost model, the data input schema will be a single array with 4 float values.

input_schema = pa.schema([

pa.field('inputs', pa.list_(pa.float64(), list_size=4))

])

When submitting as an inference, the DataFrame is converted to rows with the column data expressed as a single array. The data must be in the same order as the model expects, which is why the data is submitted as a single array rather than JSON labeled columns: this insures that the data is submitted in the exact order as the model is trained to accept.

Original DataFrame:

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

Converted DataFrame:

| inputs | |

|---|---|

| 0 | [5.1, 3.5, 1.4, 0.2] |

| 1 | [4.9, 3.0, 1.4, 0.2] |

XGBoost Schema Outputs

Outputs for XGBoost are labeled based on the trained model outputs. For this example, the output is simply a single output listed as output. In the Wallaroo inference result, it is grouped with the metadata out as out.output.

output_schema = pa.schema([

pa.field('output', pa.int32())

])

pipeline.infer(dataframe)

| time | in.inputs | out.output | check_failures | |

|---|---|---|---|---|

| 0 | 2023-07-05 15:11:29.776 | [5.1, 3.5, 1.4, 0.2] | 0 | 0 |

| 1 | 2023-07-05 15:11:29.776 | [4.9, 3.0, 1.4, 0.2] | 0 | 0 |

| Parameter | Description |

|---|---|

| Web Site | https://www.python.org/ |

| Supported Libraries | python==3.8 |

| Framework | Framework.CUSTOM aka custom |

| Runtime | Containerized aka flight |

Arbitrary Python models, also known as Bring Your Own Predict (BYOP) allow for custom model deployments with supporting scripts and artifacts. These are used with pre-trained models (PyTorch, Tensorflow, etc) along with whatever supporting artifacts they require. Supporting artifacts can include other Python modules, model files, etc. These are zipped with all scripts, artifacts, and a requirements.txt file that indicates what other Python models need to be imported that are outside of the typical Wallaroo platform.

Contrast this with Wallaroo Python models - aka “Python steps”. These are standalone python scripts that use the python libraries natively supported by the Wallaroo platform. These are used for either simple model deployment (such as ARIMA Statsmodels), or data formatting such as the postprocessing steps. A Wallaroo Python model will be composed of one Python script that matches the Wallaroo requirements.

Arbitrary Python File Requirements

Arbitrary Python (BYOP) models are uploaded to Wallaroo via a ZIP file with the following components:

| Artifact | Type | Description |

|---|---|---|

Python scripts aka .py files with classes that extend mac.inference.Inference and mac.inference.creation.InferenceBuilder | Python Script | Extend the classes mac.inference.Inference and mac.inference.creation.InferenceBuilder. These are included with the Wallaroo SDK. Further details are in Arbitrary Python Script Requirements. Note that there is no specified naming requirements for the classes that extend mac.inference.Inference and mac.inference.creation.InferenceBuilder - any qualified class name is sufficient as long as these two classes are extended as defined below. |

requirements.txt | Python requirements file | This sets the Python libraries used for the arbitrary python model. These libraries should be targeted for Python 3.8 compliance. These requirements and the versions of libraries should be exactly the same between creating the model and deploying it in Wallaroo. This insures that the script and methods will function exactly the same as during the model creation process. |

| Other artifacts | Files | Other models, files, and other artifacts used in support of this model. |

For example, the if the arbitrary python model will be known as vgg_clustering, the contents may be in the following structure, with vgg_clustering as the storage directory:

vgg_clustering\

feature_extractor.h5

kmeans.pkl

custom_inference.py

requirements.txt

Note the inclusion of the custom_inference.py file. This file name is not required - any Python script or scripts that extend the classes listed above are sufficient. This Python script could have been named vgg_custom_model.py or any other name as long as it includes the extension of the classes listed above.

The sample arbitrary python model file is created with the command zip -r vgg_clustering.zip vgg_clustering/.

Wallaroo Arbitrary Python uses the Wallaroo SDK mac module, included in the Wallaroo SDK 2023.2.1 and above. See the Wallaroo SDK Install Guides for instructions on installing the Wallaroo SDK.

Arbitrary Python Script Requirements

The entry point of the arbitrary python model is any python script that extends the following classes. These are included with the Wallaroo SDK. The required methods that must be overridden are specified in each section below.

mac.inference.Inferenceinterface serves model inferences based on submitted input some input. Its purpose is to serve inferences for any supported arbitrary model framework (e.g.scikit,kerasetc.).classDiagram class Inference { <<Abstract>> +model Optional[Any] +expected_model_types()* Set +predict(input_data: InferenceData)* InferenceData -raise_error_if_model_is_not_assigned() None -raise_error_if_model_is_wrong_type() None }mac.inference.creation.InferenceBuilderbuilds a concreteInference, i.e. instantiates anInferenceobject, loads the appropriate model and assigns the model to to the Inference object.classDiagram class InferenceBuilder { +create(config InferenceConfig) * Inference -inference()* Any }

mac.inference.Inference

mac.inference.Inference Objects

| Object | Type | Description |

|---|---|---|

model (Required) | [Any] | One or more objects that match the expected_model_types. This can be a ML Model (for inference use), a string (for data conversion), etc. See Arbitrary Python Examples for examples. |

mac.inference.Inference Methods

| Method | Returns | Description |

|---|---|---|

expected_model_types (Required) | Set | Returns a Set of models expected for the inference as defined by the developer. Typically this is a set of one. Wallaroo checks the expected model types to verify that the model submitted through the InferenceBuilder method matches what this Inference class expects. |

_predict (input_data: mac.types.InferenceData) (Required) | mac.types.InferenceData | The entry point for the Wallaroo inference with the following input and output parameters that are defined when the model is updated.

InferenceDataValidationError exception is raised when the input data does not match mac.types.InferenceData. |

raise_error_if_model_is_not_assigned | N/A | Error when a model is not set to Inference. |

raise_error_if_model_is_wrong_type | N/A | Error when the model does not match the expected_model_types. |

IMPORTANT NOTE

Verify that the inputs and outputs match the InferenceData input and output types: a Dictionary of numpy arrays defined by the input_schema and output_schema parameters when uploading the model to the Wallaroo instance. The following code is an example of a Dictionary of numpy arrays.

preds = self.model.predict(data)

preds = preds.numpy()

rows, _ = preds.shape

preds = preds.reshape((rows,))

return {"prediction": preds} # a Dictionary of numpy arrays.

The example, the expected_model_types can be defined for the KMeans model.

from sklearn.cluster import KMeans

class SampleClass(mac.inference.Inference):

@property

def expected_model_types(self) -> Set[Any]:

return {KMeans}

mac.inference.creation.InferenceBuilder

InferenceBuilder builds a concrete Inference, i.e. instantiates an Inference object, loads the appropriate model and assigns the model to the Inference.

classDiagram

class InferenceBuilder {

+create(config InferenceConfig) * Inference

-inference()* Any

}Each model that is included requires its own InferenceBuilder. InferenceBuilder loads one model, then submits it to the Inference class when created. The Inference class checks this class against its expected_model_types() Set.

mac.inference.creation.InferenceBuilder Methods

| Method | Returns | Description |

|---|---|---|

create(config mac.config.inference.CustomInferenceConfig) (Required) | The custom Inference instance. | Creates an Inference subclass, then assigns a model and attributes. The CustomInferenceConfig is used to retrieve the config.model_path, which is a pathlib.Path object pointing to the folder where the model artifacts are saved. Every artifact loaded must be relative to config.model_path. This is set when the arbitrary python .zip file is uploaded and the environment for running it in Wallaroo is set. For example: loading the artifact vgg_clustering\feature_extractor.h5 would be set with config.model_path \ feature_extractor.h5. The model loaded must match an existing module. For our example, this is from sklearn.cluster import KMeans, and this must match the Inference expected_model_types. |

inference | custom Inference instance. | Returns the instantiated custom Inference object created from the create method. |

Arbitrary Python Runtime

Arbitrary Python always run in the containerized model runtime.

| Parameter | Description |

|---|---|

| Web Site | https://mlflow.org |

| Supported Libraries | mlflow==1.3.0 |

| Runtime | Containerized aka mlflow |

For models that do not fall under the supported model frameworks, organizations can use containerized MLFlow ML Models.

This guide details how to add ML Models from a model registry service into Wallaroo.

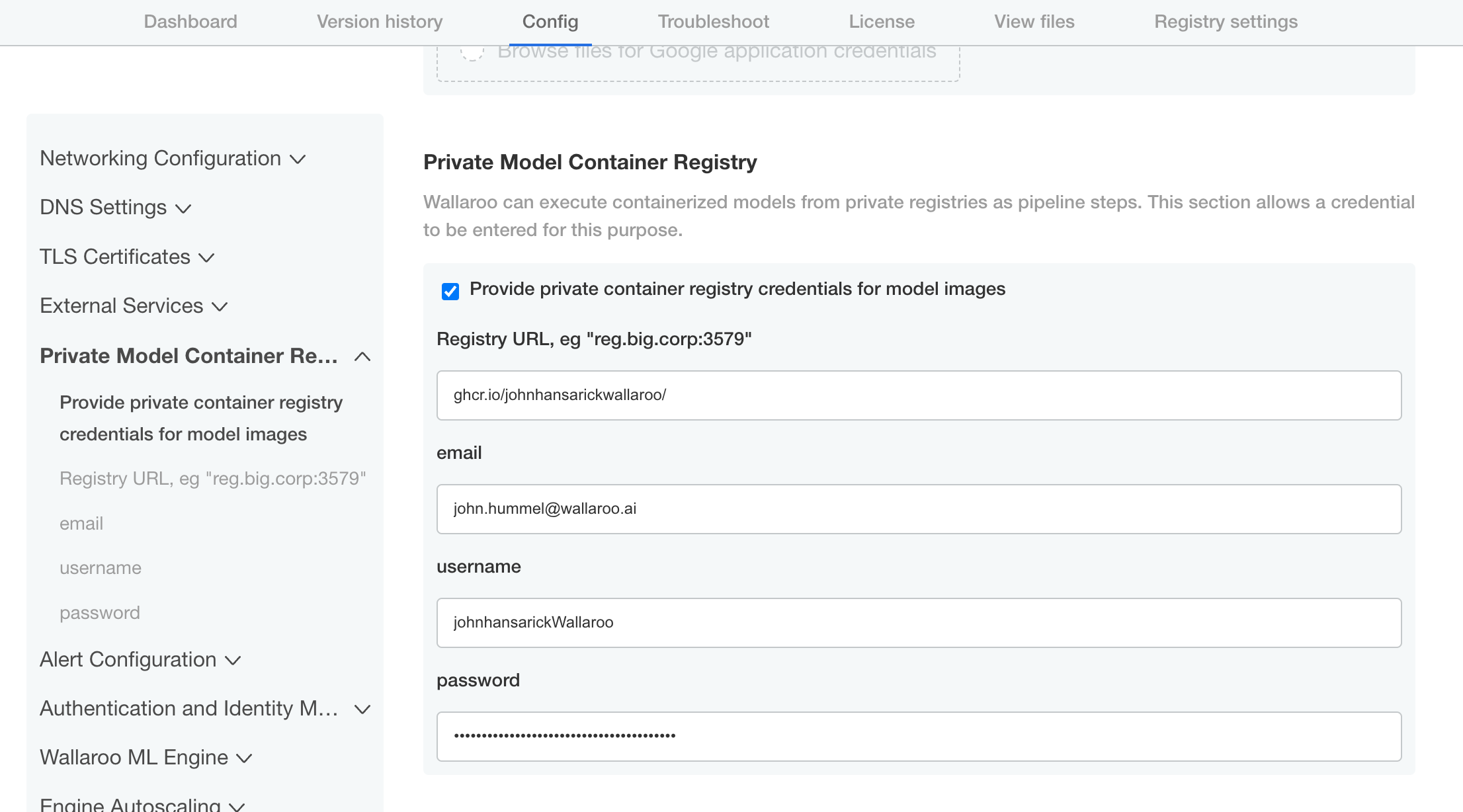

Wallaroo supports both public and private containerized model registries. See the Wallaroo Private Containerized Model Container Registry Guide for details on how to configure a Wallaroo instance with a private model registry.

Wallaroo users can register their trained MLFlow ML Models from a containerized model container registry into their Wallaroo instance and perform inferences with it through a Wallaroo pipeline.

As of this time, Wallaroo only supports MLFlow 1.30.0 containerized models. For information on how to containerize an MLFlow model, see the MLFlow Documentation.

Wallaroo supports both public and private containerized model registries. See the Wallaroo Private Containerized Model Container Registry Guide for details on how to configure a Wallaroo instance with a private model registry.

List Wallaroo Frameworks

Wallaroo frameworks are listed from the Wallaroo.Framework class. The following demonstrates listing all available supported frameworks.

from wallaroo.framework import Framework

[e.value for e in Framework]

['onnx',

'tensorflow',

'python',

'keras',

'sklearn',

'pytorch',

'xgboost',

'hugging-face-feature-extraction',

'hugging-face-image-classification',

'hugging-face-image-segmentation',

'hugging-face-image-to-text',

'hugging-face-object-detection',

'hugging-face-question-answering',

'hugging-face-stable-diffusion-text-2-img',

'hugging-face-summarization',

'hugging-face-text-classification',

'hugging-face-translation',

'hugging-face-zero-shot-classification',

'hugging-face-zero-shot-image-classification',

'hugging-face-zero-shot-object-detection',

'hugging-face-sentiment-analysis',

'hugging-face-text-generation']

2.1.1 - Model Registry Service with Wallaroo SDK Demonstration

This tutorial can be downloaded as part of the Wallaroo Tutorials repository.

MLFLow Registry Model Upload Demonstration

Wallaroo users can register their trained machine learning models from a model registry into their Wallaroo instance and perform inferences with it through a Wallaroo pipeline.

This guide details how to add ML Models from a model registry service into a Wallaroo instance.

Artifact Requirements

Models are uploaded to the Wallaroo instance as the specific artifact - the “file” or other data that represents the file itself. This must comply with the Wallaroo model requirements framework and version or it will not be deployed.

This tutorial will:

- Create a Wallaroo workspace and pipeline.

- Show how to connect a Wallaroo Registry that connects to a Model Registry Service.

- Use the registry connection details to upload a sample model to Wallaroo.

- Perform a sample inference.

Prerequisites

- A Wallaroo version 2023.2.1 or above instance.

- A Model (aka Artifact) Registry Service

References

Tutorial Steps

Import Libraries

We’ll start with importing the libraries we need for the tutorial.

import os

import wallaroo

Connect to the Wallaroo Instance through the User Interface

The next step is to connect to Wallaroo through the Wallaroo client. The Python library is included in the Wallaroo install and available through the Jupyter Hub interface provided with your Wallaroo environment.

This is accomplished using the wallaroo.Client() command, which provides a URL to grant the SDK permission to your specific Wallaroo environment. When displayed, enter the URL into a browser and confirm permissions. Store the connection into a variable that can be referenced later.

If logging into the Wallaroo instance through the internal JupyterHub service, use wl = wallaroo.Client(). For more information on Wallaroo Client settings, see the Client Connection guide.

wl=wallaroo.Client()

Connect to Model Registry

The Wallaroo Registry stores the URL and authentication token to the Model Registry service, with the assigned name. Note that in this demonstration all URLs and token are examples.

registry = wl.create_model_registry(name="JeffRegistry45",

token="dapi67c8c0b04606f730e78b7ae5e3221015-3",

url="https://sample.registry.service.azuredatabricks.net")

registry

| Field | Value |

|---|---|

| Name | JeffRegistry45 |

| URL | https://sample.registry.service.azuredatabricks.net |

| Workspaces | john.hummel@wallaroo.ai - Default Workspace |

| Created At | 2023-17-Jul 19:54:49 |

| Updated At | 2023-17-Jul 19:54:49 |

List Model Registries

Registries associated with a workspace are listed with the Wallaroo.Client.list_model_registries() method.

# List all registries in this workspace

registries = wl.list_model_registries()

registries

| name | registry url | created at | updated at |

|---|---|---|---|

| JeffRegistry45 | https://sample.registry.service.azuredatabricks.net | 2023-17-Jul 17:56:52 | 2023-17-Jul 17:56:52 |

| JeffRegistry45 | https://sample.registry.service.azuredatabricks.net | 2023-17-Jul 19:54:49 | 2023-17-Jul 19:54:49 |

Create Workspace

For this demonstration, we will create a random Wallaroo workspace, then attach our registry to the workspace so it is accessible by other workspace users.

Add Registry to Workspace

Registries are assigned to a Wallaroo workspace with the Wallaroo.registry.add_registry_to_workspace method. This allows members of the workspace to access the registry connection. A registry can be associated with one or more workspaces.

Add Registry to Workspace Parameters

| Parameter | Type | Description |

|---|---|---|

name | string (Required) | The numerical identifier of the workspace. |

# Make a random new workspace

import math

import random

num = math.floor(random.random()* 1000)

workspace_id = wl.create_workspace(f"test{num}").id()

registry.add_registry_to_workspace(workspace_id=workspace_id)

| Field | Value |

|---|---|

| Name | JeffRegistry45 |

| URL | https://sample.registry.service.azuredatabricks.net |

| Workspaces | test68, john.hummel@wallaroo.ai - Default Workspace |

| Created At | 2023-17-Jul 19:54:49 |

| Updated At | 2023-17-Jul 19:54:49 |

Remove Registry from Workspace

Registries are removed from a Wallaroo workspace with the Registry remove_registry_from_workspace method.

Remove Registry from Workspace Parameters

| Parameter | Type | Description |

|---|---|---|

workspace_id | Integer (Required) | The numerical identifier of the workspace. |

registry.remove_registry_from_workspace(workspace_id=workspace_id)

| Field | Value |

|---|---|

| Name | JeffRegistry45 |

| URL | https://sample.registry.service.azuredatabricks.net |

| Workspaces | john.hummel@wallaroo.ai - Default Workspace |

| Created At | 2023-17-Jul 19:54:49 |

| Updated At | 2023-17-Jul 19:54:49 |

List Models in a Registry

A List of models available to the Wallaroo instance through the MLFlow Registry is performed with the Wallaroo.Registry.list_models() method.

registry_models = registry.list_models()

registry_models

| Name | Registry User | Versions | Created At | Updated At |

| logreg1 | gib.bhojraj@wallaroo.ai | 1 | 2023-06-Jul 14:36:54 | 2023-06-Jul 14:36:56 |

| sidekick-test | gib.bhojraj@wallaroo.ai | 1 | 2023-11-Jul 14:42:14 | 2023-11-Jul 14:42:14 |

| testmodel | gib.bhojraj@wallaroo.ai | 1 | 2023-16-Jun 12:38:42 | 2023-06-Jul 15:03:41 |

| testmodel2 | gib.bhojraj@wallaroo.ai | 1 | 2023-16-Jun 12:41:04 | 2023-29-Jun 18:08:33 |

| verified-working | gib.bhojraj@wallaroo.ai | 1 | 2023-11-Jul 16:18:03 | 2023-11-Jul 16:57:54 |

| wine_quality | gib.bhojraj@wallaroo.ai | 2 | 2023-16-Jun 13:05:53 | 2023-16-Jun 13:09:57 |

Select Model from Registry

Registry models are selected from the Wallaroo.Registry.list_models() method, then specifying the model to use.

single_registry_model = registry_models[4]

single_registry_model

| Name | verified-working |

| Registry User | gib.bhojraj@wallaroo.ai |

| Versions | 1 |

| Created At | 2023-11-Jul 16:18:03 |

| Updated At | 2023-11-Jul 16:57:54 |

List Model Versions

The Registry Model attribute versions shows the complete list of versions for the particular model.

single_registry_model.versions()

| Name | Version | Description |

| verified-working | 3 | None |

List Model Version Artifacts

Artifacts belonging to a MLFlow registry model are listed with the Model Version list_artifacts() method. This returns all artifacts for the model.

single_registry_model.versions()[1].list_artifacts()

| File Name | File Size | Full Path |

|---|---|---|

| MLmodel | 559B | https://sample.registry.service.azuredatabricks.net/api/2.0/dbfs/read?path=/databricks/mlflow-registry/9168792a16cb40a88de6959ef31e42a2/models/√erified-working/MLmodel |

| conda.yaml | 182B | https://sample.registry.service.azuredatabricks.net/api/2.0/dbfs/read?path=/databricks/mlflow-registry/9168792a16cb40a88de6959ef31e42a2/models/√erified-working/conda.yaml |

| model.pkl | 829B | https://sample.registry.service.azuredatabricks.net/api/2.0/dbfs/read?path=/databricks/mlflow-registry/9168792a16cb40a88de6959ef31e42a2/models/√erified-working/model.pkl |

| python_env.yaml | 122B | https://sample.registry.service.azuredatabricks.net/api/2.0/dbfs/read?path=/databricks/mlflow-registry/9168792a16cb40a88de6959ef31e42a2/models/√erified-working/python_env.yaml |

| requirements.txt | 73B | https://sample.registry.service.azuredatabricks.net/api/2.0/dbfs/read?path=/databricks/mlflow-registry/9168792a16cb40a88de6959ef31e42a2/models/√erified-working/requirements.txt |

Configure Data Schemas

To upload a ML Model to Wallaroo, the input and output schemas must be defined in pyarrow.lib.Schema format.

from wallaroo.framework import Framework

import pyarrow as pa

input_schema = pa.schema([

pa.field('inputs', pa.list_(pa.float64(), list_size=4))

])

output_schema = pa.schema([

pa.field('predictions', pa.int32()),

pa.field('probabilities', pa.list_(pa.float64(), list_size=3))

])

Upload a Model from a Registry

Models uploaded to the Wallaroo workspace are uploaded from a MLFlow Registry with the Wallaroo.Registry.upload method.

Upload a Model from a Registry Parameters

| Parameter | Type | Description |

|---|---|---|

name | string (Required) | The name to assign the model once uploaded. Model names are unique within a workspace. Models assigned the same name as an existing model will be uploaded as a new model version. |

path | string (Required) | The full path to the model artifact in the registry. |

framework | string (Required) | The Wallaroo model Framework. See Model Uploads and Registrations Supported Frameworks |

input_schema | pyarrow.lib.Schema (Required for non-native runtimes) | The input schema in Apache Arrow schema format. |

output_schema | pyarrow.lib.Schema (Required for non-native runtimes) | The output schema in Apache Arrow schema format. |

model = registry.upload_model(

name="verified-working",

path="https://sample.registry.service.azuredatabricks.net/api/2.0/dbfs/read?path=/databricks/mlflow-registry/9168792a16cb40a88de6959ef31e42a2/models/√erified-working/model.pkl",

framework=Framework.SKLEARN,

input_schema=input_schema,

output_schema=output_schema)

model

| Name | verified-working |

| Version | cf194b65-65b2-4d42-a4e2-6ca6fa5bfc42 |

| File Name | model.pkl |

| SHA | 5f4c25b0b564ab9fe0ea437424323501a460aa74463e81645a6419be67933ca4 |

| Status | pending_conversion |

| Image Path | None |

| Updated At | 2023-17-Jul 17:57:23 |

Verify the Model Status

Once uploaded, the model will undergo conversion. The following will loop through the model status until it is ready. Once ready, it is available for deployment.

import time

while model.status() != "ready" and model.status() != "error":

print(model.status())

time.sleep(3)

print(model.status())

pending_conversion

pending_conversion

pending_conversion

pending_conversion

pending_conversion

pending_conversion

pending_conversion

pending_conversion

pending_conversion

pending_conversion

converting

converting

converting

converting

converting

converting

converting

converting

converting

converting

converting

converting

converting

converting

converting

ready

Model Runtime

Once uploaded and converted, the model runtime is derived. This determines whether to allocate resources to pipeline’s native runtime environment or containerized runtime environment. For more details, see the Wallaroo SDK Essentials Guide: Pipeline Deployment Configuration guide.

model.config().runtime()

'mlflow'

Deploy Pipeline

The model is uploaded and ready for use. We’ll add it as a step in our pipeline, then deploy the pipeline. For this example we’re allocated 0.5 cpu to the runtime environment and 1 CPU to the containerized runtime environment.

import os, json

from wallaroo.deployment_config import DeploymentConfigBuilder

deployment_config = DeploymentConfigBuilder().cpus(0.5).sidekick_cpus(model, 1).build()

pipeline = wl.build_pipeline("jefftest1")

pipeline = pipeline.add_model_step(model)

deployment = pipeline.deploy(deployment_config=deployment_config)

pipeline.status()

{'status': 'Running',

'details': [],

'engines': [{'ip': '10.244.3.148',

'name': 'engine-86c7fc5c95-8kwh5',

'status': 'Running',

'reason': None,

'details': [],

'pipeline_statuses': {'pipelines': [{'id': 'jefftest1',

'status': 'Running'}]},

'model_statuses': {'models': [{'name': 'verified-working',

'version': 'cf194b65-65b2-4d42-a4e2-6ca6fa5bfc42',

'sha': '5f4c25b0b564ab9fe0ea437424323501a460aa74463e81645a6419be67933ca4',

'status': 'Running'}]}}],

'engine_lbs': [{'ip': '10.244.4.203',

'name': 'engine-lb-584f54c899-tpv5b',

'status': 'Running',

'reason': None,

'details': []}],

'sidekicks': [{'ip': '10.244.0.225',

'name': 'engine-sidekick-verified-working-43-74f957566d-9zdfh',

'status': 'Running',

'reason': None,

'details': [],

'statuses': '\n'}]}

Run Inference

A sample inference will be run. First the pandas DataFrame used for the inference is created, then the inference run through the pipeline’s infer method.

import pandas as pd

from sklearn.datasets import load_iris

data = load_iris(as_frame=True)

X = data['data'].values

dataframe = pd.DataFrame({"inputs": data['data'][:2].values.tolist()})

dataframe

| inputs | |

|---|---|

| 0 | [5.1, 3.5, 1.4, 0.2] |

| 1 | [4.9, 3.0, 1.4, 0.2] |

deployment.infer(dataframe)

| time | in.inputs | out.predictions | out.probabilities | check_failures | |

|---|---|---|---|---|---|

| 0 | 2023-07-17 17:59:18.840 | [5.1, 3.5, 1.4, 0.2] | 0 | [0.981814913291491, 0.018185072312411506, 1.43... | 0 |

| 1 | 2023-07-17 17:59:18.840 | [4.9, 3.0, 1.4, 0.2] | 0 | [0.9717552971628304, 0.02824467272952288, 3.01... | 0 |

Undeploy Pipelines

With the tutorial complete, the pipeline is undeployed to return the resources back to the cluster.

pipeline.undeploy()

| name | jefftest1 |

|---|---|

| created | 2023-07-17 17:59:05.922172+00:00 |

| last_updated | 2023-07-17 17:59:06.684060+00:00 |

| deployed | False |

| tags | |

| versions | c2cca319-fcad-47b2-9de0-ad5b2852d1a2, f1e6d1b5-96ee-46a1-bfdf-174310ff4270 |

| steps | verified-working |

2.2 - Wallaroo SDK Upload Tutorials: Arbitrary Python

The following tutorials cover how to upload sample arbitrary python models into a Wallaroo instance.

| Parameter | Description |

|---|---|

| Web Site | https://www.python.org/ |

| Supported Libraries | python==3.8 |

| Framework | Framework.CUSTOM aka custom |

| Runtime | Containerized aka flight |

Arbitrary Python models, also known as Bring Your Own Predict (BYOP) allow for custom model deployments with supporting scripts and artifacts. These are used with pre-trained models (PyTorch, Tensorflow, etc) along with whatever supporting artifacts they require. Supporting artifacts can include other Python modules, model files, etc. These are zipped with all scripts, artifacts, and a requirements.txt file that indicates what other Python models need to be imported that are outside of the typical Wallaroo platform.

Contrast this with Wallaroo Python models - aka “Python steps”. These are standalone python scripts that use the python libraries natively supported by the Wallaroo platform. These are used for either simple model deployment (such as ARIMA Statsmodels), or data formatting such as the postprocessing steps. A Wallaroo Python model will be composed of one Python script that matches the Wallaroo requirements.

Arbitrary Python File Requirements

Arbitrary Python (BYOP) models are uploaded to Wallaroo via a ZIP file with the following components:

| Artifact | Type | Description |

|---|---|---|

Python scripts aka .py files with classes that extend mac.inference.Inference and mac.inference.creation.InferenceBuilder | Python Script | Extend the classes mac.inference.Inference and mac.inference.creation.InferenceBuilder. These are included with the Wallaroo SDK. Further details are in Arbitrary Python Script Requirements. Note that there is no specified naming requirements for the classes that extend mac.inference.Inference and mac.inference.creation.InferenceBuilder - any qualified class name is sufficient as long as these two classes are extended as defined below. |

requirements.txt | Python requirements file | This sets the Python libraries used for the arbitrary python model. These libraries should be targeted for Python 3.8 compliance. These requirements and the versions of libraries should be exactly the same between creating the model and deploying it in Wallaroo. This insures that the script and methods will function exactly the same as during the model creation process. |

| Other artifacts | Files | Other models, files, and other artifacts used in support of this model. |

For example, the if the arbitrary python model will be known as vgg_clustering, the contents may be in the following structure, with vgg_clustering as the storage directory:

vgg_clustering\

feature_extractor.h5

kmeans.pkl

custom_inference.py

requirements.txt

Note the inclusion of the custom_inference.py file. This file name is not required - any Python script or scripts that extend the classes listed above are sufficient. This Python script could have been named vgg_custom_model.py or any other name as long as it includes the extension of the classes listed above.

The sample arbitrary python model file is created with the command zip -r vgg_clustering.zip vgg_clustering/.

Wallaroo Arbitrary Python uses the Wallaroo SDK mac module, included in the Wallaroo SDK 2023.2.1 and above. See the Wallaroo SDK Install Guides for instructions on installing the Wallaroo SDK.

Arbitrary Python Script Requirements

The entry point of the arbitrary python model is any python script that extends the following classes. These are included with the Wallaroo SDK. The required methods that must be overridden are specified in each section below.

mac.inference.Inferenceinterface serves model inferences based on submitted input some input. Its purpose is to serve inferences for any supported arbitrary model framework (e.g.scikit,kerasetc.).classDiagram class Inference { <<Abstract>> +model Optional[Any] +expected_model_types()* Set +predict(input_data: InferenceData)* InferenceData -raise_error_if_model_is_not_assigned() None -raise_error_if_model_is_wrong_type() None }mac.inference.creation.InferenceBuilderbuilds a concreteInference, i.e. instantiates anInferenceobject, loads the appropriate model and assigns the model to to the Inference object.classDiagram class InferenceBuilder { +create(config InferenceConfig) * Inference -inference()* Any }

mac.inference.Inference

mac.inference.Inference Objects

| Object | Type | Description |

|---|---|---|

model (Required) | [Any] | One or more objects that match the expected_model_types. This can be a ML Model (for inference use), a string (for data conversion), etc. See Arbitrary Python Examples for examples. |

mac.inference.Inference Methods

| Method | Returns | Description |

|---|---|---|

expected_model_types (Required) | Set | Returns a Set of models expected for the inference as defined by the developer. Typically this is a set of one. Wallaroo checks the expected model types to verify that the model submitted through the InferenceBuilder method matches what this Inference class expects. |

_predict (input_data: mac.types.InferenceData) (Required) | mac.types.InferenceData | The entry point for the Wallaroo inference with the following input and output parameters that are defined when the model is updated.

InferenceDataValidationError exception is raised when the input data does not match mac.types.InferenceData. |

raise_error_if_model_is_not_assigned | N/A | Error when a model is not set to Inference. |

raise_error_if_model_is_wrong_type | N/A | Error when the model does not match the expected_model_types. |

IMPORTANT NOTE

Verify that the inputs and outputs match the InferenceData input and output types: a Dictionary of numpy arrays defined by the input_schema and output_schema parameters when uploading the model to the Wallaroo instance. The following code is an example of a Dictionary of numpy arrays.

preds = self.model.predict(data)

preds = preds.numpy()

rows, _ = preds.shape

preds = preds.reshape((rows,))

return {"prediction": preds} # a Dictionary of numpy arrays.

The example, the expected_model_types can be defined for the KMeans model.

from sklearn.cluster import KMeans

class SampleClass(mac.inference.Inference):

@property

def expected_model_types(self) -> Set[Any]:

return {KMeans}

mac.inference.creation.InferenceBuilder

InferenceBuilder builds a concrete Inference, i.e. instantiates an Inference object, loads the appropriate model and assigns the model to the Inference.

classDiagram

class InferenceBuilder {

+create(config InferenceConfig) * Inference

-inference()* Any

}Each model that is included requires its own InferenceBuilder. InferenceBuilder loads one model, then submits it to the Inference class when created. The Inference class checks this class against its expected_model_types() Set.

mac.inference.creation.InferenceBuilder Methods

| Method | Returns | Description |

|---|---|---|

create(config mac.config.inference.CustomInferenceConfig) (Required) | The custom Inference instance. | Creates an Inference subclass, then assigns a model and attributes. The CustomInferenceConfig is used to retrieve the config.model_path, which is a pathlib.Path object pointing to the folder where the model artifacts are saved. Every artifact loaded must be relative to config.model_path. This is set when the arbitrary python .zip file is uploaded and the environment for running it in Wallaroo is set. For example: loading the artifact vgg_clustering\feature_extractor.h5 would be set with config.model_path \ feature_extractor.h5. The model loaded must match an existing module. For our example, this is from sklearn.cluster import KMeans, and this must match the Inference expected_model_types. |

inference | custom Inference instance. | Returns the instantiated custom Inference object created from the create method. |

Arbitrary Python Runtime

Arbitrary Python always run in the containerized model runtime.

2.2.1 - Wallaroo SDK Upload Arbitrary Python Tutorial: Generate VGG16 Model

This tutorial can be downloaded as part of the Wallaroo Tutorials repository.

Wallaroo SDK Upload Arbitrary Python Tutorial: Generate Model

This tutorial demonstrates how to use arbitrary python as a ML Model in Wallaroo. Arbitrary Python allows organizations to use Python scripts that require specific libraries and artifacts as models in the Wallaroo engine. This allows for highly flexible use of ML models with supporting scripts.

Tutorial Goals

This tutorial is split into two parts:

- Wallaroo SDK Upload Arbitrary Python Tutorial: Generate Model: Train a dummy

KMeansmodel for clustering images using a pre-trainedVGG16model oncifar10as a feature extractor. The Python entry points used for Wallaroo deployment will be added and described.- A copy of the arbitrary Python model

models/model-auto-conversion-BYOP-vgg16-clustering.zipis included in this tutorial, so this step can be skipped.

- A copy of the arbitrary Python model

- Arbitrary Python Tutorial Deploy Model in Wallaroo Upload and Deploy: Deploys the

KMeansmodel in an arbitrary Python package in Wallaroo, and perform sample inferences. The filemodels/model-auto-conversion-BYOP-vgg16-clustering.zipis provided so users can go right to testing deployment.

Arbitrary Python Script Requirements

The entry point of the arbitrary python model is any python script that must include the following.