Wallaroo Air-Gapped IBM Cloud Installation Guide

Table of Contents

The following guide is for organizations with clusters that meet the Wallaroo OpenShift prerequisites in an “air-gapped” environment in IBM CLoud.

Some knowledge of the following will be useful in working with this guide:

- Working knowledge of OpenShift and IBM Cloud.

- Working knowledge of Kubernetes, mainly

kubectlandkots.

For more information, Contact Us for additional details.

Before starting, select which method to install Wallaroo.

helm: Primarily script and configuration file based install method.kots: Primarily a GUI based installation process.

Wallaroo Image Storage

Wallaroo installation images are stored in the private image repository in the following format.

registry.wallaroo.ai:1234/wallaroo/conductor-wallsvc:2025.2.2-6555

------------------------ -------- ---------------- ----------

\ \ \ \

Registry Host Name namespace repository tag

- Registry Host Name: The FQDN of the registry host. For example:

registry.example.ai. - Namespace: The registry namespace where all Wallaroo images are stored under.

- Repository: The specific image.

- Tag: The version of the image.

IBM Cloud Registry

To create a registry in IBM Cloud, see Getting started with Container Registry. The credentials for pushing images to this registry are gathered by the command:

ibmcloud iam oauth-tokens | sed -ne '/IAM token/s/.* //p'

These credentials expire after 60 minutes.

The default registry username is iambearer.

Install Software Requirements

The following software or runtimes are required for Wallaroo 2025.2. Most are automatically available through the supported cloud providers.

| Software or Runtime | Description | Minimum Supported Version | Preferred Version(s) |

|---|---|---|---|

| OpenShift | Container Platform | 4.17 | 4.18 |

| Kubernetes | Cluster deployment management | 1.29 with Container Management set to containerd. | 1.31 |

| kubectl | Kubernetes administrative console application | 1.31 | 1.31 |

IBM Cloud based requirements:

| Software or Runtime | Description | Minimum Supported Version | Preferred Version(s) |

|---|---|---|---|

| IBM Cloud CLI | IBM Cloud administrative console application. | 2.32.2 | 2.32.2 |

| ks plug-in | KS aka Container Service Plugin for IBM Cloud. This plugin is required. | N/A | N/A |

With IBM Cloud CLI the IBM Cloud CLI Kubernetes Plugin is also required. This is installed with the following command:

ibmcloud plugin install kubernetes-service

After installing the Kubernetes service plugin, The ks plugin is installed with:

ibmcloud plugin install ks

Taints and Labels Requirements

Nodepools created in Wallaroo require the following taints and labels.

These taints and labels are applied if using the the scripts provided in this guide. For custom taints and labels, see the Custom Taints and Labels Guide.| Nodepool | Taints | Labels | Description |

|---|---|---|---|

| general | N/A | wallaroo.ai/node-purpose: general | For general Wallaroo services. No taints are applied to this nodepool to allow any process not assigned with a deployment label to run in this space. |

| persistent | wallaroo.ai/persistent=true:NoSchedule | wallaroo.ai/node-purpose: persistent | For Wallaroo services with a persistentVolume settings, including JupyterHub, Minio, etc. |

| pipelines-x86 | wallaroo.ai/pipelines=true:NoSchedule | wallaroo.ai/node-purpose: pipelines | For deploying pipelines for default x86 architectures. The taints and label must be applied to any nodepool used for model deployments. |

OpenShift Cluster Preparation

The following steps are examples of setting up an OpenShift cluster in IBM Cloud that meets the Wallaroo installation requirements. Modify these steps as needed for your organizations needs.

Prerequisites

The following tools are required for these steps.

- kubectl

jqversion 1.7.1

Information Gathering

The following steps gathers the information needed for the installation script variables. Update the script with the following collected details.

CLUSTER_NAME: The name of the cluster. For example:CLUSTER_NAME="samplecluster"

OCP_VERSION: The openshift version. Retrieved withibmcloud oc versions. For example:OCP_VERSION="4.17.40_openshift"

VPC_ID: The ID of the IBM Cloud® Virtual Private Cloud. Retrieved withibmcloud oc vpcs. For example:VPC_ID="abcdefg"

COS_INSTANCE_CRN: The Cloud Resource Name (CRN). Retrieved withibmcloud resource service-instances --long --service-name cloud-object-storage. For example:COS_INSTANCE_CRN="crn:abcdefg"

- Three zones. Note that the script demonstrated can be modified for single zone installations.

Retrieve the zones for the region with

ibmcloud oc zones --provider vpc-gen2.Run the following the retrieve the zone details for each selected zone.

ibmcloud oc subnets --provider vpc-gen2 --vpc-id <your-vpc-id> --zone <your-zone-name>Set the following variables for each zone:

ZONE_1SUBNET_ID_1ZONE_2SUBNET_ID_2ZONE_3SUBNET_ID_3

For example:

# Zone 1 ZONE_1="YOUR ZONE 1" SUBNET_ID_1="YOUR SUBNET ID 1" # Zone 2 ZONE_2="YOUR ZONE 2" SUBNET_ID_2="YOUR SUBNET ID 2" # Zone 3 ZONE_3="YOUR ZONE 3" SUBNET_ID_3="YOUR SUBNET ID 3"

Set Workers for Pools

The following values are used to set the workers per pool per zone.

- Set the workers per zone for the pipelines pool. The following values are the minimum values:

PIPELINES_MIN_WORKERS=1PIPELINES_MAX_WORKERS=3

- (OPTIONAL) Set the L4 GPU pools. To include them, set

CREATE_L4_PIPELINES_POOL=true. If enabled, the following are the minimum values for GPU pools.L4_POOL_MIN_WORKERS=1L4_POOL_MAX_WORKERS=2

Set Worker Node Flavors

The following zone flavors are provided based ont GPU types available in the target zone. Execute the following:

ibmcloud oc flavors --zone <your-zone-name> --provider vpc-gen2

The following are set in the script as recommended flavors. Update based on those available in your zone.

# Flavor for the initial default pool.

DEFAULT_POOL_FLAVOR="bx3d.4x20"

# Flavor for the general, persistent, and standard pipelines pools.

STANDARD_POOL_FLAVOR="cx2.8x16"

# Flavor for the optional L4 GPU pipelines pool.

L4_GPU_FLAVOR="gx3.16x80.l4"

Update the Execute Script

Update the variables in the following script, available for download here: create_openshift_cluster.sh.

Once configured, make the script executable (chmod +x create_openshift_cluster.sh) and run it (./create_openshift_cluster.sh).

#!/bin/bash

# This script provisions a multi-zone OpenShift cluster on IBM Cloud VPC

# with standard, persistent, and optional GPU worker pools.

#

# Prerequisites: ibmcloud CLI, kubernetes-service plugin, and jq.

# Exit immediately if a command exits with a non-zero status.

set -e

# Print commands and their arguments as they are executed.

set -x

# --- CONFIGURATION ---

# A unique name for your cluster.

CLUSTER_NAME="samplecluster"

# The OpenShift version.

OCP_VERSION="4.17.40_openshift"

# The ID of your VPC.

VPC_ID="YOUR VPC ID"

# The CRN of your Cloud Object Storage instance.

COS_INSTANCE_CRN="YOUR COS INSTANCE CRN"

# --- Worker Node Flavors ---

# Flavor for the initial default pool.

DEFAULT_POOL_FLAVOR="bx3d.4x20"

# Flavor for the general, persistent, and standard pipelines pools.

STANDARD_POOL_FLAVOR="cx2.8x16"

# Flavor for the optional L4 GPU pipelines pool.

L4_GPU_FLAVOR="gx3.16x80.l4"

# --- Multi-Zone Configuration (3 Zones) ---

# Zone 1

ZONE_1="YOUR ZONE 1"

SUBNET_ID_1="YOUR SUBNET ID 1"

# Zone 2

ZONE_2="YOUR ZONE 2"

SUBNET_ID_2="YOUR SUBNET ID 2"

# Zone 3

ZONE_3="YOUR ZONE 3"

SUBNET_ID_3="YOUR SUBNET ID 3"

# --- Optional L4 GPU Pool ---

# Set to 'true' to create the pipelines-l4 pool, or 'false' to skip it.

CREATE_L4_PIPELINES_POOL=true

L4_POOL_MIN_WORKERS=1

L4_POOL_MAX_WORKERS=2

# Max workers *per zone* for the standard pipelines pool

PIPELINES_MIN_WORKERS=1

PIPELINES_MAX_WORKERS=3

# --- HELPER FUNCTION ---

# Waits for worker nodes in a specific pool to reach the 'normal' state.

function waitForWorkers() {

local CLUSTER_NAME="$1"

local POOL_NAME="$2"

local EXPECTED_COUNT="$3"

echo "--> Waiting for $EXPECTED_COUNT worker(s) in pool '$POOL_NAME' to become 'normal'..."

while true; do

local NORMAL_COUNT

NORMAL_COUNT=$(ibmcloud oc worker ls --cluster "$CLUSTER_NAME" --worker-pool "$POOL_NAME" --output json | jq -r '[.[] | select(.health.message=="Ready")] | length')

if [[ "$NORMAL_COUNT" -ge "$EXPECTED_COUNT" ]]; then

echo "--> Success: $NORMAL_COUNT of $EXPECTED_COUNT worker(s) are 'normal' in pool '$POOL_NAME'."

break

else

echo "--> $NORMAL_COUNT of $EXPECTED_COUNT workers are 'normal'. Checking again in 30 seconds..."

sleep 30

fi

done

}

echo "Step 1: Starting OpenShift cluster creation for $CLUSTER_NAME..."

ibmcloud oc cluster create vpc-gen2 \

--name "$CLUSTER_NAME" \

--version "$OCP_VERSION" \

--vpc-id "$VPC_ID" \

--subnet-id "$SUBNET_ID_1" \

--flavor "$DEFAULT_POOL_FLAVOR" \

--workers 2 \

--zone "$ZONE_1" \

--cos-instance "$COS_INSTANCE_CRN"

echo "Step 2: Waiting for cluster to be in 'normal' state. This will take 40+ minutes..."

while [[ $(ibmcloud oc cluster get --cluster $CLUSTER_NAME --output json | jq -r .state) != "normal" ]]; do

echo "Cluster is not ready yet. Current state: $(ibmcloud oc cluster get --cluster $CLUSTER_NAME --output json | jq -r .state). Checking again in 60 seconds."

sleep 60

done

echo "Cluster is ready. Proceeding with worker pool configuration."

# --- Create General Worker Pool ---

echo "Step 3: Creating 'general' worker pool with flavor $STANDARD_POOL_FLAVOR..."

ibmcloud oc worker-pool create vpc-gen2 --cluster "$CLUSTER_NAME" --name general --flavor "$STANDARD_POOL_FLAVOR" --size-per-zone 1 --label wallaroo.ai/node-purpose=general

echo "Adding zones to 'general' pool..."

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_1" --subnet-id "$SUBNET_ID_1" --cluster "$CLUSTER_NAME" --worker-pool general

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_2" --subnet-id "$SUBNET_ID_2" --cluster "$CLUSTER_NAME" --worker-pool general

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_3" --subnet-id "$SUBNET_ID_3" --cluster "$CLUSTER_NAME" --worker-pool general

# --- Create Persistent Worker Pool (and apply taint) ---

echo "Step 4: Creating 'persistent' worker pool..."

ibmcloud oc worker-pool create vpc-gen2 --cluster "$CLUSTER_NAME" --name persistent --flavor "$STANDARD_POOL_FLAVOR" --size-per-zone 1 --label wallaroo.ai/node-purpose=persistent

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_1" --subnet-id "$SUBNET_ID_1" --cluster "$CLUSTER_NAME" --worker-pool persistent

# --- Create Standard Pipelines Worker Pool (and apply taint) ---

echo "Step 5: Creating 'pipelines' worker pool..."

ibmcloud oc worker-pool create vpc-gen2 --cluster "$CLUSTER_NAME" --name pipelines --flavor "$STANDARD_POOL_FLAVOR" --size-per-zone 1 --label wallaroo.ai/node-purpose=pipelines

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_1" --subnet-id "$SUBNET_ID_1" --cluster "$CLUSTER_NAME" --worker-pool pipelines

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_2" --subnet-id "$SUBNET_ID_2" --cluster "$CLUSTER_NAME" --worker-pool pipelines

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_3" --subnet-id "$SUBNET_ID_3" --cluster "$CLUSTER_NAME" --worker-pool pipelines

# --- Create Optional L4 GPU Pipelines Worker Pool (and apply taints) ---

if [ "$CREATE_L4_PIPELINES_POOL" = true ]; then

echo "Step 6: Creating optional 'pipelines-l4' GPU worker pool..."

ibmcloud oc worker-pool create vpc-gen2 --cluster "$CLUSTER_NAME" --name pipelines-l4 --flavor "$L4_GPU_FLAVOR" --size-per-zone 1 \

--label wallaroo.ai/node-purpose=pipelines \

--label wallaroo.ai/accelerator=l4

ibmcloud oc zone add vpc-gen2 --zone "$ZONE_1" --subnet-id "$SUBNET_ID_1" --cluster "$CLUSTER_NAME" --worker-pool pipelines-l4

fi

# --- Wait for all worker nodes to finish provisioning ---

echo "Step 4: Waiting for all worker pools to finish provisioning..."

waitForWorkers "$CLUSTER_NAME" "general" 3

waitForWorkers "$CLUSTER_NAME" "persistent" 1

waitForWorkers "$CLUSTER_NAME" "pipelines" 3

if [ "$CREATE_L4_PIPELINES_POOL" = true ]; then

waitForWorkers "$CLUSTER_NAME" "pipelines-l4" 1

fi

ibmcloud oc cluster config --cluster "$CLUSTER_NAME" --admin

# --- Apply Taints using kubectl ---

echo "Step 5: Applying taints to worker pools..."

echo "Waiting for nodes in 'persistent' pool to be ready before tainting..."

kubectl wait --for=condition=Ready node -l ibm-cloud.kubernetes.io/worker-pool-name=persistent --timeout=10m

echo "Tainting 'persistent' pool nodes..."

kubectl taint nodes -l ibm-cloud.kubernetes.io/worker-pool-name=persistent wallaroo.ai/persistent=true:NoSchedule --overwrite

echo "Waiting for nodes in 'pipelines' pool to be ready before tainting..."

kubectl wait --for=condition=Ready node -l ibm-cloud.kubernetes.io/worker-pool-name=pipelines --timeout=10m

echo "Tainting 'pipelines' pool nodes..."

kubectl taint nodes -l ibm-cloud.kubernetes.io/worker-pool-name=pipelines wallaroo.ai/pipelines=true:NoSchedule --overwrite

if [ "$CREATE_L4_PIPELINES_POOL" = true ]; then

echo "Waiting for nodes in 'pipelines-l4' pool to be ready before tainting..."

kubectl wait --for=condition=Ready node -l ibm-cloud.kubernetes.io/worker-pool-name=pipelines-l4 --timeout=15m

echo "Tainting 'pipelines-l4' pool nodes..."

kubectl taint nodes -l ibm-cloud.kubernetes.io/worker-pool-name=pipelines-l4 wallaroo.ai/pipelines=true:NoSchedule --overwrite

kubectl taint nodes -l ibm-cloud.kubernetes.io/worker-pool-name=pipelines-l4 nvidia.com/gpu=l4:NoSchedule --overwrite

fi

# --- Configure Autoscaling ---

echo "Step 6: Enabling and configuring cluster autoscaler..."

ibmcloud oc cluster addon enable cluster-autoscaler --cluster "$CLUSTER_NAME"

echo "Waiting for autoscaler addon to become active..."

while [[ $(ibmcloud oc cluster addon ls --cluster $CLUSTER_NAME --output json | jq -r '.[] | select(.name=="cluster-autoscaler") | .healthState') != "normal" ]]; do

echo "Autoscaler addon is not active yet. Checking again in 20 seconds."

sleep 20

done

echo "Building autoscaler configuration using jq..."

JSON_CONFIG=$(jq -n \

--argjson pipelines_min "$PIPELINES_MIN_WORKERS" \

--argjson pipelines_max "$PIPELINES_MAX_WORKERS" \

--arg create_l4 "$CREATE_L4_PIPELINES_POOL" \

--argjson l4_min "$L4_POOL_MIN_WORKERS" \

--argjson l4_max "$L4_POOL_MAX_WORKERS" \

'

# Start with the static pools

[

{"name":"general", "minSize":1, "maxSize":1, "enabled":false},

{"name":"persistent", "minSize":1, "maxSize":1, "enabled":false},

{"name":"pipelines", "minSize":$pipelines_min, "maxSize":$pipelines_max, "enabled":true}

]

# Conditionally add the L4 pool

| if $create_l4 == "true" then

. + [{"name":"pipelines-l4", "minSize":$l4_min, "maxSize":$l4_max, "enabled":true}]

else

.

end

'

)

# --- Final Cleanup ---

echo "Step 7: Removing the temporary 'default' worker pool..."

waitForWorkers "$CLUSTER_NAME" "default" 2

ibmcloud oc worker-pool rm --cluster "$CLUSTER_NAME" --worker-pool default -f

echo "---"

echo "✅ SUCCESS: Cluster $CLUSTER_NAME is provisioned and configured."

echo "Run the following command to connect to your cluster:"

echo "ibmcloud oc cluster config --cluster $CLUSTER_NAME --admin"

echo "---"

Create New Default Storage Class

The Wallaroo installation in Wallaroo requires the Storage Class VolumeBindingMode set to WaitForFirstConsumer. The following creates a storage class by copying the an existing one, and putting the letter a so it is selected first when setting up the cluster and not overwritten by the IBM Cloud default. For this example, the Storage Class ibmc-vpc-block-metro-10iops-tier is used. Update as needed for your organizations requirements.

The following example is specific to IBM Cloud.

# create our new storageclass

kubectl get storageclass ibmc-vpc-block-metro-10iops-tier -o yaml | \

sed -e 's/name: ibmc-vpc-block-metro-10iops-tier/name: aibmc-vpc-block-metro-10iops-tier/' \

-e '/resourceVersion:/d' \

-e '/uid:/d' \

-e '/creationTimestamp:/d' | \

kubectl apply -f -

# change the default to one with WaitForFirstConsumer

kubectl get storageclass -o name | xargs -I {} kubectl patch {} -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}' && kubectl patch storageclass aibmc-vpc-block-metro-10iops-tier -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

Optional Step: Install NVIDIA Drivers

If using NVIDIA GPUs with the cluster, see the OpenShift NVIDIA GPU Operator guide for instructions on installing the necessary drivers.

Follow the instructions to the step Create the cluster policy using the web console, then perform the following:

After the step:

select Operators > Installed Operators, and click NVIDIA GPU Operator

Add the following steps:

Select ClusterPolicy.

Select gpu-cluster-policy.

Select YAML.

Scroll to the key

daemonsetsand add the following section:tolerations: - effect: NoSchedule key: nvidia.com/gpu operator: Exists - effect: NoSchedule key: wallaroo.ai/pipelines operator: ExistsFor example:

daemonsets: rollingUpdate: maxUnavailable: '1' tolerations: - effect: NoSchedule key: nvidia.com/gpu operator: Exists - effect: NoSchedule key: wallaroo.ai/pipelines operator: Exists updateStrategy: RollingUpdateSelect Save, then continue with the instructions as provided at see the OpenShift NVIDIA GPU Operator guide.

Install Wallaroo

Select from the following methods to install Wallaroo:

helmkots

Install Wallaroo via Helm

Air-Gapped Installation Preparation for Helm

Before starting an air-gapped installation of Wallaroo, complete the following preparation steps.

The general process follows these steps:

- Pre Setup Checklist for Helm: The necessary installation files are available and values set for installation.

- Wallaroo Image Retrieval and Installation Preparation for Helm: Retrieve the installation images from Wallaroo and store them in a private container registry available from the Target Cluster.

Pre Setup Checklist for Helm

The following checklist ensures that required items are ready before starting the process.

- The installation environment meets the general Wallaroo Installation Prerequisites.

- The Domain Name for the Wallaroo instance is registered in a private DNS accessible from the air-gapped installation.

- TLS certificate and private key matching the Domain Name available for the Target Cluster. This can be registered to a private certificate service.

- Access to private image registry that hosts the Wallaroo install images with the following permissions:

- Read

- Write

- List

- The following Bash scripts:

-

load-images.bash: Loads the Wallaroo install images into the private image registry and generates theimage-values.yamlfile. Available here: load-images.bash -

install-nvidia-driver.bash(Optional): Installs Nvidia drivers for the Target Cluster. Available here: install-nvidia-driver.bash

-

- Wallaroo Image Download Details: This is provided by a Wallaroo Support Representative and are stored as the following variables environmental variables for the installation scripts:

LICENSE_CHANNEL: The registry channel used based on the version of Wallaroo being installed, currently2025-2.VERSION: The Wallaroo version to be installed. For example:2025.2.2-6555.WALLAROO_LICENSE: The Wallaroo license identifier.WALLAROO_LICENSE_USERNAME: The username associated with the Wallaroo license.WALLAROO_LICENSE_PASSWORD: The password associated with the Wallaroo license.

- The following environmental variables for connecting to the private model registry:

REGISTRY_HOST: The fully qualified domain name of the private image registry. For example:registry.wallaroo.ai.REGISTRY_NAMESPACE: Namespace where the Wallaroo images are stored.REGISTRY_USERNAME: Username for authentication to the registry.REGISTRY_PASSWORD: Authentication credential for private registry. Often this is either a password or a token.

- Administration Host Software Requirements: The administrative host that submits the installation commands to the target cluster requires the following software.

dockercurljqversion 1.7.1helmkubectlversion 1.31

Note that the convenience variables are used for helm based installations of Wallaroo.

Wallaroo Image Retrieval and Installation Preparation for Helm

For air-gapped installations, the Wallaroo installation images are downloaded and stored in a private registry through the following process.

The image load script below expects ECR root level access and add the prefix wallaroo/ prefix for all the Wallaroo images. Access to the ECR must include the following permissions:

- Read

- List

- Write

From a terminal with access to the Kubernetes cluster hosting the Wallaroo instance and read/write access to the private model registry, use the following procedure.

Set the following convenience variables.

REGISTRY_HOST=YOUR PRIVATE IMAGE REGISTRY URL REGISTRY_NAMESPACE=YOUR WALLAROO CONTAINER NAMESPACE LICENSE_CHANNEL=YOUR LICENSE CHANNEL VERSION=YOUR VERSION WALLAROO_LICENSE=YOUR WALLAROO LICENSE ID WALLAROO_LICENSE_USERNAME=YOUR WALLAROO INSTALL USER NAME WALLAROO_LICENSE_PASSWORD=YOUR WALLAROO INSTALL USER PASSWORD REGISTRY_USERNAME=YOUR REGISTRY AUTHENTICATION USER NAME REGISTRY_PASSWORD=YOUR REGISTRY AUTHENTICATION CREDENTIALFor example:

REGISTRY_HOST=registry.wallaroo.ai REGISTRY_NAMESPACE=wallaroo LICENSE_CHANNEL=2025-2 VERSION=2025.2.2-6555 WALLAROO_LICENSE=99999xxxyyyzzz0000 WALLAROO_LICENSE_USERNAME=abcdefg WALLAROO_LICENSE_PASSWORD=12345679 REGISTRY_USERNAME=ABC123 REGISTRY_PASSWORD=ZYX987Login to the private image registry via

dockerusing the following command:echo $REGISTRY_PASSWORD | docker login -u $REGISTRY_USERNAME --password-stdin $REGISTRY_HOSTUpon successful login, the following is returned.

Login SucceededLoad images to the private registry using the

load-images.bashscript. When complete, this outputs the fileimage-values.yamlwith the relevant installation data.bash load-images.bash \ --wallaroo-version $WALLAROO_VERSION \ --wallaroo-license $WALLAROO_LICENSE \ --wallaroo-username $WALLAROO_USERNAME \ --registry-namespace $REGISTRY_NAMESPACE \ --registry-host $REGISTRY_HOST

Save the file image-values.yaml and use it for the step Install Wallaroo.

Once complete, the Wallaroo images are stored and ready for installation.

Installation Client Helm Requirements

The following software is required for the client with administrative access to the Kubernetes cluster that will host Wallaroo via Helm.

- For Helm installs:

helm: Install Helm- Minimum supported version: Helm 3.11.2

krew: Install Krewkrew preflightandkrew support-bundle. Install with the following commands:kubectl krew install support-bundlekubectl krew install preflight

The following details how to install Wallaroo via helm. Note that these procedures require the Air-Gapped Installation Preparation be completed.

Registration Login

The first step in the Wallaroo installation process via Helm is to connect to the Kubernetes environment that will host the Wallaroo Enterprise instance and login to the Wallaroo container registry through the command provided by the Wallaroo support staff. The command will take the following format, replacing $WALLAROO_LICENSE_USERNAME and $WALLAROO_LICENSE_PASSWORD with the respective username and password provided.

helm registry login registry.replicated.com --username $WALLAROO_LICENSE_USERNAME --password $WALLAROO_LICENSE_PASSWORD

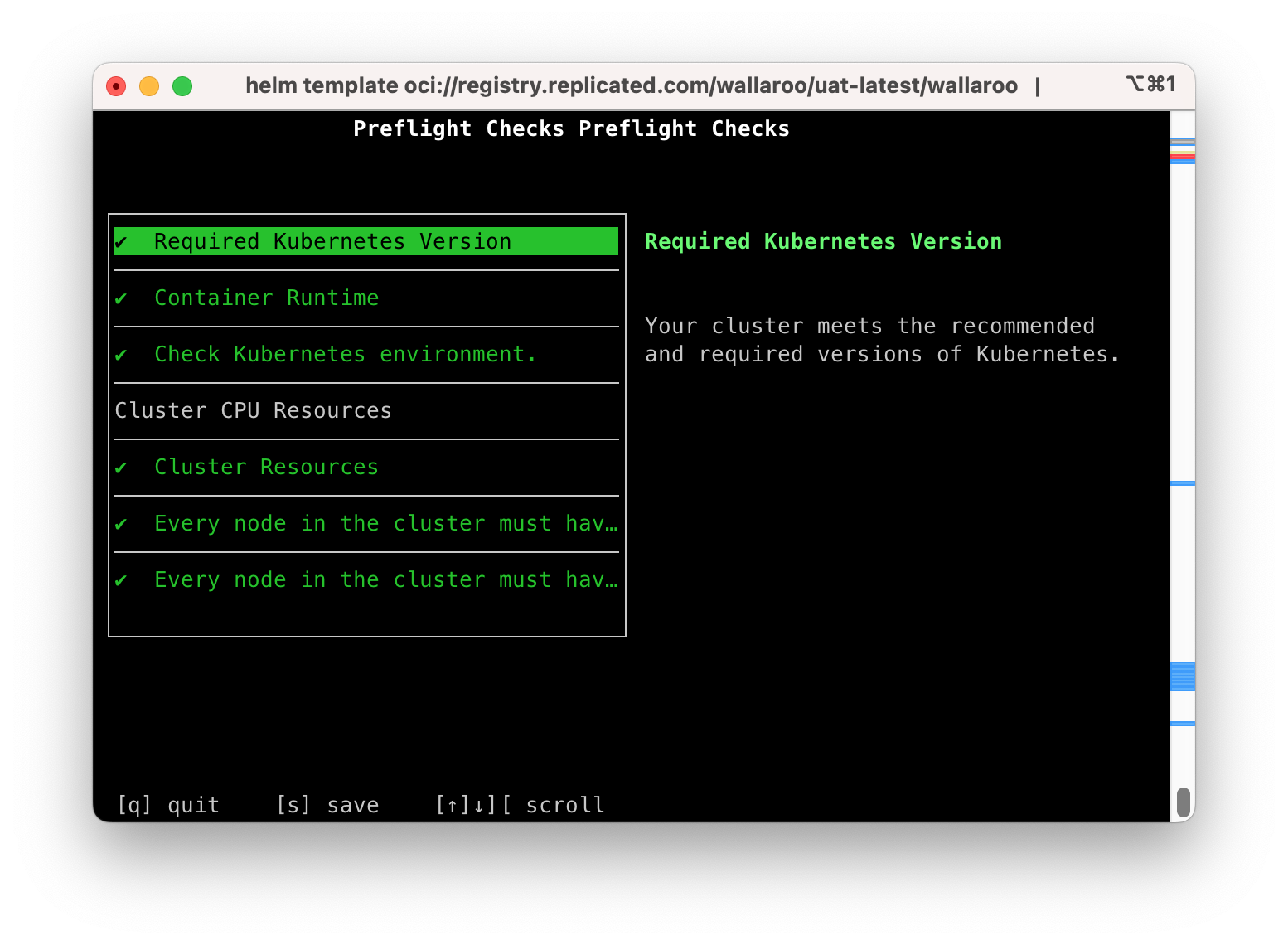

Preflight Verification

IMPORTANT NOTE

The preflight test is not programmatically enforced during installation via Helm and should be performed manually before installation. If the Kubernetes environment does not meet the requirements the Wallaroo installation may fail or perform erratically. Please verify that all preflight test run successfully before proceeding to install Wallaroo.Preflight verification is performed with the following command format. The variables LICENSE_CHANNEL and VERSION is supplied by your Wallaroo support representative.

helm template oci://registry.replicated.com/wallaroo/$LICENSE_CHANNEL/wallaroo --version $VERSION | kubectl preflight -

For example, the LICENSE_CHANNEL=2025-2 and the VERSION=2025.2.2-6555

helm template oci://registry.replicated.com/wallaroo/2025-2/wallaroo --version 2025.2.2-6555 | kubectl preflight -

This displays the Preflight Checks report.

The following commands are available:

s: Save the report to a text file as the filepreflight-checks-results-DATETIME.txt. For example:preflight-checks-results-2024-03-19T13_30_41.txt.q: Exit the preflight report.Up ArroworDown Arrow: Scroll through the preflight elements and view the report details.

The following example shows a successful preflight test.

Preflight Checks Preflight Checks

Check PASS

Title: Required Kubernetes Version

Message: Your cluster meets the recommended and required versions of Kubernetes.

------------

Check PASS

Title: Container Runtime

Message: Containerd container runtime was found.

------------

Check PASS

Title: Check Kubernetes environment.

Message: GKE is a supported distribution

------------

Title: Cluster CPU Resources

Message:

------------

Check PASS

Title: Cluster Resources

Message: Cluster resources are satisfactory

------------

Check PASS

Title: Every node in the cluster must have at least 12Gi of memory

Message: All nodes have at least 12 GB of memory capacity

------------

Check PASS

Title: Every node in the cluster must have at least 8 cpus allocatable.

Message: All nodes have at least 8 CPU capacity

------------

Prepare Helm Installation

The following instructions detail how to install Wallaroo Enterprise via Helm for Kubernetes cloud environments such as Microsoft Azure, Amazon Web Service, and Google Cloud Platform.

Helm Network Configuration

apilb.serviceType settings have the following effects.

| Setting | Cloud Kubernetes | |

|---|---|---|

| Internal Only Connections | ClusterIP | |

| External Connections | LoadBalancer |

Refer to the instructions for environment host for details on IP address allocation and support.

With the preflight checks and prerequisites met, Wallaroo can be installed via Helm through the following process:

Create namespace. By default, the namespace

wallaroois used:kubectl create namespace wallarooSet the new namespace as the current namespace:

kubectl config set-context --current --namespace wallaroo

- Set the TLS certificate secret in the Kubernetes environment:

Create the certificate and private key. It is recommended to name it after the domain name of your Wallaroo instance. For example:

wallaroo.example.com. For production environments, organizations are recommended to use certificates from their certificate authority. Note that the Wallaroo SDK will not connect from an external connection without valid certificates. For more information on using DNS settings and certificates, see the Wallaroo DNS Integration Guide.Convert the files to base64 encoded and store the results. For example:

The cert file:

base64 -i cert_file.pemThe key file:

base64 -i key_file.pemSet the base64 encoded files into the helm values file with the following settings:

deploymentStage: cust: This value must becust.custTlsCert: The base64 encoded certificate chain.custTlsKey: The base64 encoded key.

For example:

#> To provide TLS certificates, (1) set deploymentStage to "cust", then (2) provide base64 encoded secrets #> in custTlsCert and custTlsKey. deploymentStage: cust # Deployment stage, must be set to "cust" when deployed custTlsCert: "abcdefg" # Customer provided certificate chain when deploymentStage is "cust". custTlsKey: "zyxwvu" # Customer provided private key when deploymentStage is "cust".

Create the image pull secret for the private image registry. IMPORTANT NOTE: This assumes that the

docker logincommand was run earlier in the step Wallaroo Image Storage.kubectl create secret docker-registry regcred --from-file=$HOME/.docker/config.json(Optional) If using NVIDIA drivers as per the step Optional: Load NVIDIA Gpu Drivers, create a secret in the

kube-systemnamespace with the following command:kubectl create secret docker-registry regcred --from-file=$HOME/.docker/config.json --name kube-system

Default Helm Installation Settings

A default Helm install of Wallaroo contains various default settings. The local values file overwrites values based on the organization needs. The following represents the minimum mandatory values for a Wallaroo installation using certificates and the default LoadBalancer for a cloud Kubernetes cluster. The configuration details below is saved as values.yaml for these examples.

Note the following required settings:

kubernetes_distribution: Must be set toopenShiftfor OpenShift based installations.wallarooDomain: Used to set the DNS domain name for the Wallaroo instance. For more information, see the Wallaroo DNS Integration Guide.deploymentStage: cust: This value must becust.custTlsCert: The base64 encoded certificate chain.custTlsKey: The base64 encoded key.ingress_mode: How the Wallaroo instance is reached through the Kubernetes network settings.If the environment has a load balancer controller, select

external. If the environment does not have a load balancer controller, configure their load balancer as required to point at a K8s or OpenShift service, then selectnoneas the networking setting in configuration. For any questions or other conditions not listed, contact the Wallaroo support representative.Options include:

internal(Default): An internal cloud load balancer and associated resources are created. Network users outside the Kubernetes cluster – but on the same internal network – can connect directly using DNS names, and do not need to use port forward or related configurations.external: An external, Internet-facing cloud load balancer, public IP, and associated resources are created. This is highly discouraged. Public DNS is also required. This is the default for Wallaroo Community Edition.none: Services are local to the Kubernetes cluster.kubectl-port forwardor some other means is required to access them. If all work will be done in-cluster, select this option.

dashboard: The name displayed when users login to the Wallaroo Ops center Dashboard. For example, “My Company” or “Sales Division”, etc.imageRegistry: This is required for air-gapped installations and must match theREGISTRY_HOSTandREGISTRY_NAMESPACEof private container registry where the Wallaroo install images are installed as per step Wallaroo Image Retrieval and Installation Preparation. For example, if theREGISTRY_HOST=registry.wallaroo.aiand theREGISTRY_NAMESPACE=wallaroo, then theimageRegistryvalue isregistry.wallaroo.ai/wallaroo.privatePypi(Optional): Enables private Python repositories for custom libraries and sources. For more details, see Python Private Repositories.

The following example shows the minimum required options with uncommented keys for the minimum required settings and additional commented optional settings. For full details on helm values for Wallaroo installations, see the Wallaroo Helm Reference Guides.

wallarooDomain: "wallaroo.example.com" # change to match the actual domain name

custTlsSecretName: cust-cert-secret

ingress_mode: internal # internal (Default), external,or none

dashboard:

clientName: "Wallaroo Helm Example" # Insert the name displayed in the Wallaroo Dashboard

kubernetes_distribution: openShift

# Must be a full registry address calculated by:

# $REGISTRY_HOST/$REGISTRY_NAMESPACE

# For example:

imageRegistry: registry.wallaroo.ai:1234/wallaroo

# enable Wallaroo assays. Select **one** of the following: `v1` or `v2`, or leave commented out to disable assays.

# v2 is enabled by default.

#assays:

# enabled: true

# v1: false

# v2: true

# Enable edge deployment

#ociRegistry:

# enabled: true # true enables the Edge Server registry information, false disables it.

# registry: ""# The registry url. For example: reg.big.corp:3579.

# repository: ""# The repository within the registry. This may include the cloud account, or the full path where the Wallaroo published pipelines should be kept. For example: account123/wallaroo/pipelines.

# email: "" # Optional field to track the email address of the registry credential.

# username: "" # The username to the registry. This may vary based on the provider. For example, GCP Artifact Registry with service accounts uses the username _json_key_base64 with the p`ass`word as a base64 processed token of the credential information.

# password: "" # The password or token for the registry service.

# Enable edge deployment observability

# edgelb:

# enabled: true

# Private PyPI repository configuration for installing Python packages from a private index.

# When enabled, this configures access to a private PyPI repository for Python package installation in BYOP and Workload Orchestrations.

# privatePypi:

# enabled: false # If true, configure access to a private PyPI repository

# secretName: private-pypi-secret

# url: "" # Private PyPI repository URL, eg "https://pypi.example.com/simple/"

# username: "" # Username for private PyPI authentication

# password: "" # Password for private PyPI authentication

# privateOnly: false # If true, only use private PyPI repository (no fallback to public PyPI)

# The nodeSelector and tolerations for all components

# This does not apply to nats, fluent-bit, or minio so needs to be applied separately

# nodeSelector:

# wallaroo.ai/reserved: true

# tolerations:

# - key: "wallaroo.ai/reserved"

# operator: "Exists"

# effect: "NoSchedule"

# To change the pipeline taint or nodeSelector,

# best practice is to change engine, enginelb, and engineAux

# together unless they will be in different pools.

# engine:

# nodeSelector:

# wallaroo.ai/node-purpose: pipelines

# tolerations:

# - key: "wallaroo.ai/pipelines"

# operator: "Exists"

# effect: "NoSchedule"

# enginelb:

# nodeSelector:

# wallaroo.ai/node-purpose: pipelines

# tolerations:

# - key: "wallaroo.ai/pipelines"

# operator: "Exists"

# effect: "NoSchedule"

# engineAux:

# nodeSelector:

# wallaroo.ai/node-purpose: pipelines

# tolerations:

# - key: "wallaroo.ai/pipelines"

# operator: "Exists"

# effect: "NoSchedule"

# For each service below, adjust the disk size and resources as required.

# If the nodeSelector or tolerations are changed for one service,

# the other services nodeSelector and tolerations **must** be changed to match

#

#

# plateau:

# diskSize: 100Gi

# resources:

# limits:

# memory: 4Gi

# cpu: 1000m

# requests:

# memory: 128Mi

# cpu: 100m

# nodeSelector:

# wallaroo.ai/node-purpose: persistent

# tolerations:

# - key: "wallaroo.ai/persistent"

# operator: "Exists"

# effect: "NoSchedule"

# Jupyter has both hub and lab nodeSelectors and tolerations

# They default to the same persistent pool, but can be assigned to different ones

# jupyter:

# nodeSelector: # Node placement for Hub administrative pods

# wallaroo.ai/node-purpose: persistent

# tolerations:

# - key: "wallaroo.ai/persistent"

# operator: "Exists"

# effect: "NoSchedule"

# labNodeSelector: # Node placement for Hub-spawned jupyterlab pods

# wallaroo.ai/node-purpose: persistent

# labTolerations:

# - key: "wallaroo.ai/persistent"

# operator: "Exists"

# effect: "NoSchedule"

# memory:

# limit: "4" # Each Lab - memory limit in GB

# guarantee: "2" # Each Lab - lemory guarantee in GB

# cpu:

# limit: "2.0" # Each Lab - fractional CPU limit

# guarantee: "1.0" # Each Lab - fractional CPU guarantee

# storage:

# capacity: "50" # Each Lab - disk storage capacity in GB

# minio:

# persistence:

# size: 100Gi

# nodeSelector:

# wallaroo.ai/node-purpose: persistent

# tolerations:

# - key: wallaroo.ai/persistent

# operator: "Exists"

# effect: "NoSchedule"

# resources:

# requests:

# memory: 1Gi

# postgres:

# diskSize: 100Gi

# nodeSelector:

# wallaroo.ai/node-purpose: persistent

# tolerations:

# - key: "wallaroo.ai/persistent"

# operator: "Exists"

# effect: "NoSchedule"

# resources:

# limits:

# memory: 2Gi

# cpu: 500m

# requests:

# memory: 512Mi

# cpu: 100m

#> Prometheus Metrics. Data will be retained until either retention size or retention time is

#> exceeded, whichever comes first. It's a little difficult to predict which, because pipelines are

#> transient and the rate they generate metrics is variable. Scrape interval is fixed at 5s.

# prometheus:

# server:

# global:

# scrape_interval: 5s # How frequently to scrape targets by default

# evaluation_interval: 5s # How frequently to evaluate rules

# scrape_timeout: 4s # How long until a scrape request times out

# resources:

# limits:

# memory: 6Gi

# cpu: 2000m

# requests:

# memory: 512Mi

# cpu: 100m

# retention: 90d

# retentionSize: 80GB # This should be 80% of the persistentVolume.size.

# nodeSelector:

# wallaroo.ai/node-purpose: persistent

# tolerations:

# - key: "wallaroo.ai/persistent"

# operator: "Exists"

# effect: "NoSchedule"

# persistentVolume:

# size: 100Gi # When updating this value make sure to also update the 'retentionsSize' value above to be 80% of this value.

# nats:

# podTemplate:

# merge:

# spec:

# nodeSelector:

# wallaroo.ai/node-purpose: persistent

# tolerations:

# - key: "wallaroo.ai/persistent"

# operator: "Exists"

# effect: NoSchedule

# wallsvc:

# nodeSelector:

# wallaroo.ai/node-purpose: persistent

# tolerations:

# - key: "wallaroo.ai/persistent"

# operator: "Exists"

# effect: "NoSchedule"

persistentVolume Settings

Wallaroo services that have a persistentVolume have the following default nodeSelector label and tolerations:

nodeSelector- Label:

wallaroo.ai/node-purpose - Value:

persistent

- Label:

tolerations- Key: wallaroo.ai/persistent

- Operator: “Exists”

- Effect: “NoSchedule”

For example:

nodeSelector:

wallaroo.ai/node-purpose: persistent

tolerations:

- key: wallaroo.ai/persistent

operator: "Exists"

effect: "NoSchedule"

If the nodeSelector or tolerations are changed for any service with a persistentVolume, all other services must be edited to match.

For additional information on taints and tolerations settings, see the Taints and Labels Guide.

Install Wallaroo with Helm

Install Wallaroo: The Wallaroo support representative will provide the installation command for the Helm install that will use the Wallaroo container registry. This assumes that the preflight checks were successful. This assumes some of these helper variables are set from the previous procedure Air-Gapped Installation Preparation.

$RELEASE: The name of the Helm release. By default,wallaroo.$REGISTRY_URI: The URl for the Wallaroo container registry service.$VERSION: The version of Wallaroo to install. For this example,2025.2.2-6555.$LOCALVALUES: The .yaml file containing the local values overrides. For this example,values.yaml.image-values.yamlfile generated created in the step Wallaroo Image Retrieval and Installation Preparation.

helm install wallaroo \ oci://registry.replicated.com/wallaroo/2025-2/wallaroo \ --version 2025.2.2-6555 \ --values values.yaml \ --values image-values.yaml \ --timeout 10m \ --wait \ --wait-for-jobs

Verify Installation

If any required elements are missing from the values.yaml file, an error is displayed. For example, leaving out the kubernetes_distribution field returns the following:

Warning - kubernetes_distribution must be set in user provided values.yaml

Upon successful installation, notes are published indicating the installed version, where to find documentation, etc.:

NOTES:

.

Welcome to Wallaroo 2025.1.0

1. Deployment Information:

Name: 2025.1.0

Release notes: https://docs.wallaroo.ai/wallaroo-release-notes/wallaroo-release-202501

Version: v2025.1.0-5187

2. Accessing Wallaroo

Documentation: https://docs.wallaroo.ai

Dashboard: https://sample.wallaroocommunity.ninja

3. Useful Commands:

- Helm tests are available by using: `helm test wallaroo`.

- External load balancer hostname can be found by using:

kubectl get svc api-lb-ext -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'

- List Wallaroo namespaces, including pipeline deployments, but not including the main `wallaroo` namespace:

kubectl get namespaces -l wallaroo-managed=true

- In order to change any helm values:

helm upgrade --install wallaroo oci://registry.replicated.com/wallaroo/uat-latest/wallaroo --version v2025.1.0-5187 --values $LOCALVALUES_YAML --timeout 10m --wait --wait-for-jobs

4. Uninstall:

1. To uninstall/delete the Wallaroo deployment, run:

kubectl delete ns wallaroo && kubectl delete \

all,secret,configmap,clusterroles,clusterrolebindings,storageclass,crd \

--selector app.kubernetes.io/part-of=wallaroo --selector kots.io/app-slug=wallaroo

2. To delete all pipelines, run:

kubectl delete ns -l wallaroo-managed=true

.

Once the installation is complete, verify the installation with the

helm test $RELEASEcommand. A condensed display usesegrepto show only the test suite and phase status as follows. Replacewallaroowith the name of thehelmrelease used.helm test wallaroo | egrep 'SUITE:|Phase:'A successful result shows the following:

TEST SUITE: wallaroo-fluent-bit-test-connection Phase: Succeeded TEST SUITE: nats-test-request-reply Phase: Succeeded TEST SUITE: wallaroo-wallaroo-test-connections-hook Phase: Succeeded TEST SUITE: wallaroo-test-objects-hook Phase: SucceededThe following will show the full

helmtest output with notes.helm test wallaroowhich displays the following:

NAME: wallaroo LAST DEPLOYED: Fri May 17 14:00:04 2024 NAMESPACE: wallaroo STATUS: deployed REVISION: 2 TEST SUITE: wallaroo-fluent-bit-test-connection Last Started: Fri May 17 14:04:48 2024 Last Completed: Fri May 17 14:04:51 2024 Phase: Succeeded TEST SUITE: nats-test-request-reply Last Started: Fri May 17 14:04:43 2024 Last Completed: Fri May 17 14:04:48 2024 Phase: Succeeded TEST SUITE: sample-wallaroo-test-connections-hook Last Started: Fri May 17 14:04:24 2024 Last Completed: Fri May 17 14:04:31 2024 Phase: Succeeded TEST SUITE: sample-wallaroo-test-objects-hook Last Started: Fri May 17 14:04:31 2024 Last Completed: Fri May 17 14:04:43 2024 Phase: Succeeded NOTES: . Welcome to Wallaroo 2025.1.0 1. Deployment Information: Name: 2025.1.0 Release notes: https://docs.wallaroo.ai/wallaroo-release-notes/wallaroo-release-202501 Version: v2025.1.0-5187 2. Accessing Wallaroo Documentation: https://docs.wallaroo.ai Dashboard: https://sample.wallaroocommunity.ninja 3. Useful Commands: - Helm tests are available by using: `helm test wallaroo`. - External load balancer hostname can be found by using: kubectl get svc api-lb-ext -o jsonpath='{.status.loadBalancer.ingress[0].hostname}' - List Wallaroo namespaces, including pipeline deployments, but not including the main `wallaroo` namespace: kubectl get namespaces -l wallaroo-managed=true - In order to change any helm values: helm upgrade --install wallaroo oci://registry.replicated.com/wallaroo/2025-2/wallaroo --version 2025.2.2-6555 --values $LOCALVALUES_YAML --timeout 10m --wait --wait-for-jobs 4. Uninstall: 1. To uninstall/delete the Wallaroo deployment, run: kubectl delete ns wallaroo && kubectl delete \ all,secret,configmap,clusterroles,clusterrolebindings,storageclass,crd \ --selector app.kubernetes.io/part-of=wallaroo --selector kots.io/app-slug=wallaroo 2. To delete all pipelines, run: kubectl delete ns -l wallaroo-managed=true .

At this point, the installation is complete and can be accessed through the fully qualified domain names set in the installation process above.

To add the initial users if they were not set up through Helm values, see the Wallaroo Enterprise User Management guide.

Install Wallaroo via Kots

Before starting an air-gapped installation of Wallaroo using Kots, complete the following preparation steps.

The general process follows these steps:

- Pre Setup Checklist for Kots: The necessary installation files are available and values set for installation.

- Wallaroo Image Retrieval and Installation Preparation for Kots: Retrieve the installation images from Wallaroo and store them in a private container registry available from the Target Cluster.

Pre Setup Checklist for Kots

The following checklist ensures that required items are ready before starting the process.

- The installation environment meets the general Wallaroo Installation Prerequisites.

- The Domain Name for the Wallaroo instance is registered in a private DNS accessible from the air-gapped installation.

- TLS certificate and private key matching the Domain Name available for the Target Cluster. This can be registered to a private certificate service.

- Access to private image registry that hosts the Wallaroo install images with the following permissions:

- Read

- Write

- List

- The following Bash scripts:

-

install-nvidia-driver.bash(Optional): Installs Nvidia drivers for the Target Cluster. Available here: install-nvidia-driver.bash

-

- Wallaroo Image Download Details: This is provided by a Wallaroo Support Representative and are stored as the following variables environmental variables:

LICENSE_CHANNEL: The registry channel used based on the version of Wallaroo being installed, currently2025-2.VERSION: The Wallaroo version to be installed. For example:2025.2.2-6555.KOTS_CLIENT_URL: The URL for downloading the Kots client filekots_linux_amd64.tar.gzwith thekotsversion used for the Wallaroo air-gapped installation.KOTS_ADMIN_URL: The URL for downloading the Kots admin bundlekotsadm.tar.gzwith thekotsversion used for the Wallaroo air-gapped installation.WALLAROO_BUNDLE_URL: The customer specific URL for downloading the Wallaroo installation package. IMPORTANT NOTE: This URL has an expiration time of around 60 minutes, and the final downloaded file is 30 GB in size.

- The following artifacts provided by the Wallaroo Support representative:

- License File: This is a

yamlfile that contains the Wallaroo license information for your organization. For this procedure, it is referred to aslicense.yaml.

- License File: This is a

- The following environmental variables for connecting to the private model registry:

REGISTRY_ADDRESS: The fully qualified domain name of the private image registry with the registry namespace used to install Wallaroo. For example:registry.wallaroo.ai/wallaroo. Thewallarooin the URL is the namespace.REGISTRY_USERNAME: Username for authentication to the registry.REGISTRY_PASSWORD: Authentication credential for private registry. Often this is either a password or a token.

- Administration Host Software Requirements: The administrative host that submits the installation commands to the target cluster requires the following software.

dockercurljqversion 1.7.1kubectlversion 1.31

Wallaroo Image Retrieval and Installation Preparation for Kots

For air-gapped installations, the Wallaroo installation images are downloaded and stored in a private registry through the following process.

The image load script below expects root level access and add the prefix wallaroo/ prefix for all the Wallaroo images. Access to the registry must include the following permissions:

- Read

- List

- Write

From a terminal with access to the Kubernetes cluster hosting the Wallaroo instance and read/write access to the private model registry, use the following procedure.

This installation assumes the user will

sshinto the target installation system with port forwarding enabled. For example,ssh -L 8800:localhost:8800 hostname. This is so later in the process the Kots Administrative Dashboard is access through the urlhttp://localhost:8080.Set the following convenience variables.

REGISTRY_ADDRESS=YOUR PRIVATE IMAGE REGISTRY URL AND NAMESPACE LICENSE_CHANNEL=YOUR LICENSE CHANNEL REGISTRY_USERNAME=YOUR REGISTRY AUTHENTICATION USER NAME REGISTRY_PASSWORD=YOUR REGISTRY AUTHENTICATION CREDENTIAL KOTS_CLIENT_URL=YOUR KOTS CLIENT URL KOTS_ADMIN_URL=YOUR KOTS ADMIN URL WALLAROO_BUNDLE_URL=YOUR WALLAROO BUNDLE URL WALLAROO_DOWNLOAD_AUTHORIZATION=YOUR AUTHORIZATION CODEIf using the IBM Cloud Registry service, the credentials are retrieved with the command

ibmcloud iam oauth-tokens | sed -ne '/IAM token/s/.* //p', and the username isiambearer. Note that these credentials typically expire withing 60 minutes.For example:

REGISTRY_ADDRESS=registry.wallaroo.ai/wallaroo LICENSE_CHANNEL=2025-2 REGISTRY_USERNAME=iambearer REGISTRY_PASSWORD=ZYX987 KOTS_CLIENT_URL="https://github.com/replicatedhq/kots/releases/download/v1.124.18/kots_linux_amd64.tar.gz" KOTS_ADMIN_URL="https://github.com/replicatedhq/kots/releases/download/v1.124.18/kotsadm.tar.gz" WALLAROO_BUNDLE_URL="https://s3.amazonaws.com/airgap.replicated.com/abcdefg/2.airgap?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIA3UMYHRA5LHP4KTHN%2F20250708%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20250708T160517Z&X-Amz-Expires=3600&X-Amz-Security-Token=abcdefg"Download the following via

curlvia the following commands:- Kots CLI:

curl -LO $KOTS_CLIENT_URL. This downloads the filekots_linux_amd64.tar.gz. - Kots admin bundle:

curl -LO $KOTS_ADMIN_URL. This downloads the filekotsadm.tar.gz. - Wallaroo Installation Bundle:

curl -Lo wallaroo.airgap $WALLAROO_BUNDLE_URL. This saves the file aswallaroo.airgap. IMPORTANT NOTE: This URL has an expiration time of around 60 minutes, and the final downloaded file is 30 GB in size.

- Kots CLI:

Install Kots client via the following commands:

tar zxf kots_linux_amd64.tar.gz sudo mv kots /usr/local/bin/kubectl-kots sudo chmod a+x /usr/local/bin/kubectl-kotsPush the Kots administrative bundle to the private registry via the following command:

kubectl kots admin-console \ push-images kotsadm.tar.gz \ $REGISTRY_ADDRESS \ --registry-username $REGISTRY_USERNAME \ --registry-password $REGISTRY_PASSWORDInstall Wallaroo via the following command. This takes approximately 30 minutes, and will pause as each image is loaded. The following parameters are manually set:

--shared-password: The password to the Kots Administrative Dashboard after installation is complete. For example,--shared-password wallaroo--license-file: The file name of the license file. For example:--license-file license.yaml

kubectl kots install $CHANNEL \ --namespace wallaroo --shared-password wallaroo \ --airgap-bundle wallaroo.airgap --license-file license.yaml \ --kotsadm-registry $REGISTRY_ADDRESS \ --registry-username $REGISTRY_USERNAME \ --registry-password $REGISTRY_PASSWORD \ --disable-image-pushOnce complete, a prompt is displayed showing the Kots Administrative Dashboard is displayed. Use this URL in a browser to proceed to the next stage in the installation process.

• Deploying Admin Console • Creating namespace ✓ • Waiting for datastore to be ready ✓ Enter a new password to be used for the Admin Console: ••••••••••••• • Waiting for Admin Console to be ready ✓ • Press Ctrl+C to exit • Go to http://localhost:8800 to access the Admin Console

Initial Wallaroo Config

Once Wallaroo has been installed via kots for the first time, we can perform initial configuration.

If the Kots Administrative Console has not started, launch it with the following command:

❯ kubectl kots admin-console --namespace wallaroo • Press Ctrl+C to exit • Go to http://localhost:8800 to access the Admin ConsoleEnter the Kots Administrative Console address into a browser. You will be prompted for the default password as set in the step above. Enter it and select Log in.

Upload your license file. If the license file was already selected from the Automated installation, this step is skipped.

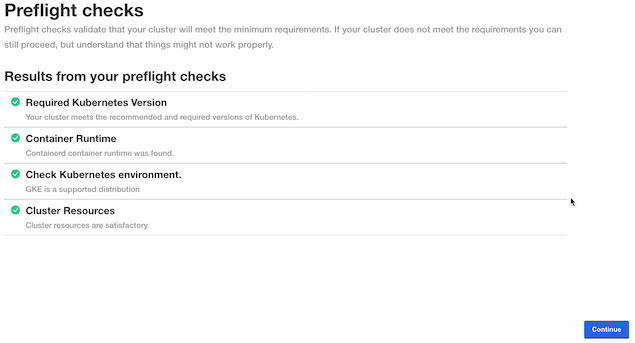

The Kots Administrative Console will run the preflight checks to verify that all of the minimum requirements are met. This may take a few minutes. If there are any issues, Wallaroo can still be launched but may not function properly. When ready, select Continue.

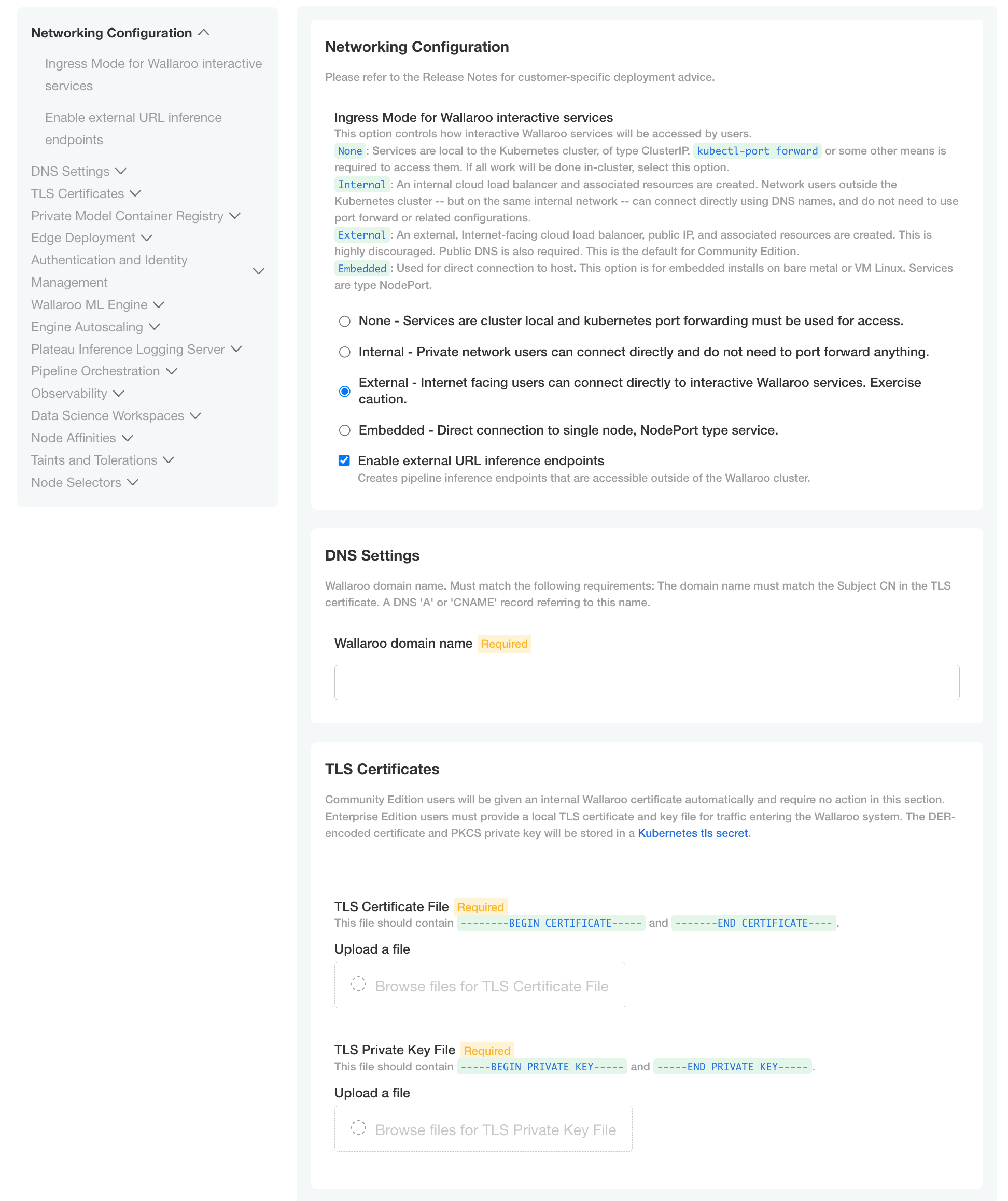

The Configure Wallaroo page will be displayed which allows you to customize your Wallaroo environment. The following are the minimum required settings.

Networking Configuration: Set whether the Wallaroo instance is available from outside the Kubernetes cluster, or only from within it.

- Ingress Mode for Wallaroo Endpoints: If a load balance controller is used, select External. If there is no load balance controller configured, the load balancer must be configured to point at a K8s or OpenShift service, then select None as the networking setting in configuration. For any questions or other conditions not listed, contact the Wallaroo support representative.

- None: Services are local to the Kubernetes cluster.

kubectl-port forwardor some other means is required to access them. If all work will be done in-cluster, select this option. - Internal: An internal cloud load balancer and associated resources are created. Network users outside the Kubernetes cluster – but on the same internal network – can connect directly using DNS names, and do not need to use port forward or related configurations.

- External: An external, Internet-facing cloud load balancer, public IP, and associated resources are created. This is highly discouraged. Public DNS is also required. This is the default for Wallaroo Community Edition.

- Enable external URL inference endpoints: Creates pipeline inference endpoints. For more information, see Model Endpoints Guide.

- None: Services are local to the Kubernetes cluster.

- Ingress Mode for Wallaroo Endpoints: If a load balance controller is used, select External. If there is no load balance controller configured, the load balancer must be configured to point at a K8s or OpenShift service, then select None as the networking setting in configuration. For any questions or other conditions not listed, contact the Wallaroo support representative.

From the Wallaroo Dashboard, select Config and set the following:

DNS Settings

- Wallaroo domain name (Required): The domain for the Wallaroo instance.

TLS Certificates

- Use custom TLS Certs: Checked

- TLS Certificate: Enter your TLS Certificate (.crt file).

- TLS Private Key: Enter your TLS private key (.key file).

Once complete, scroll to the bottom of the Config page and select Deploy.

At this point, continue to Required Installation Configurations for the required configuration settings.

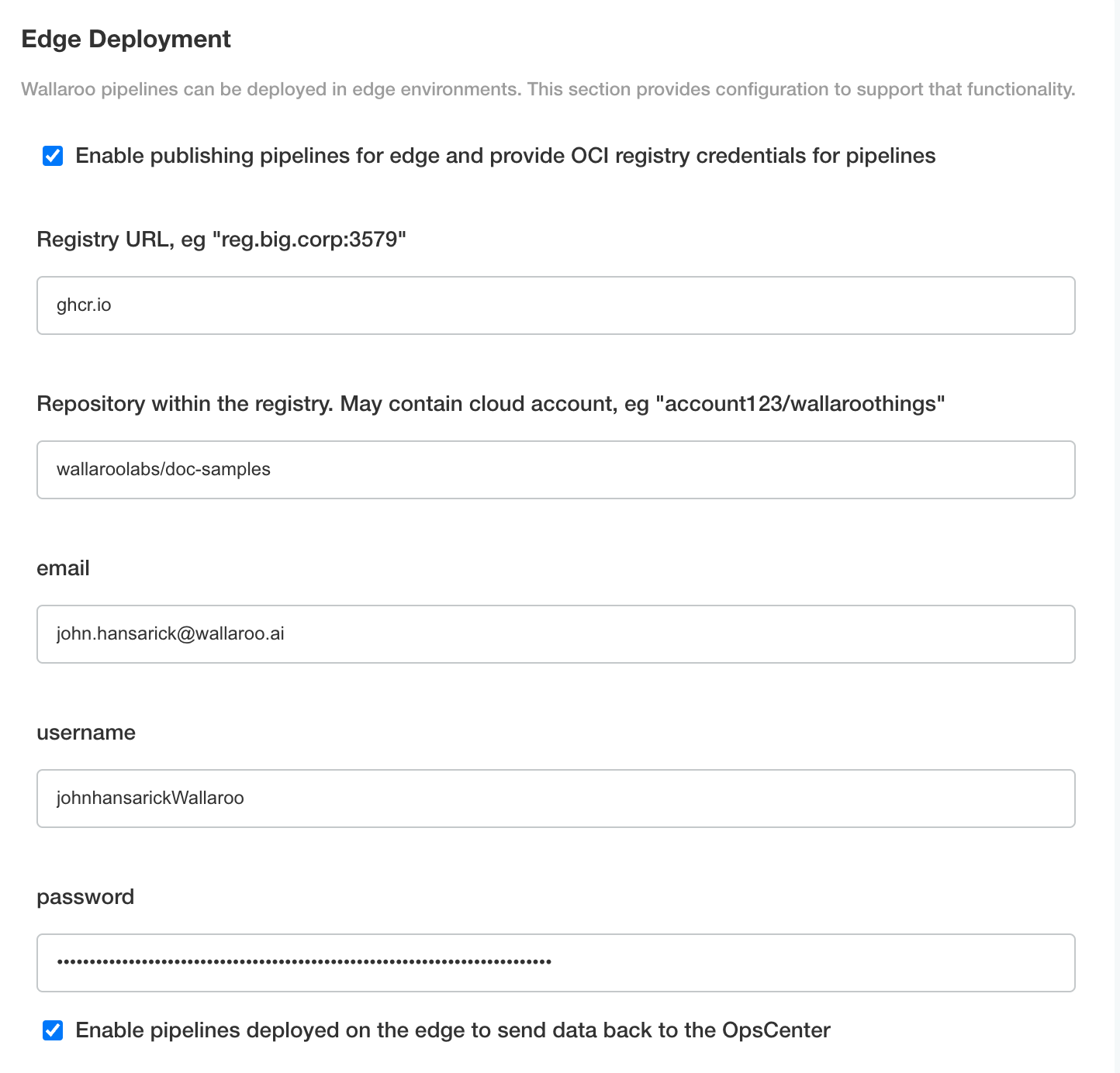

Edge Deployment Configuration

The following optional configurations to enable OCI Registry integration with Wallaroo for deploying models in edge and multi-cloud environments through Wallaroo. For more details, see Inference Anywhere.

To set the Edge Registry Settings through the Kots Administrative Dashboard:

Launch the Kots Administrative Dashboard using the following command, replacing the

--namespaceparameter with the Kubernetes namespace for the Wallaroo instance:kubectl kots admin-console --namespace wallarooOpen a browser at the URL detailed in the step above and authenticate using the console password set as described in the as detailed in the Wallaroo Install Guides.

From the top menu, select Config then scroll to Edge Deployment.

Enable Provide OCI registry credentials for pipelines.

Enter the following:

- Registry URL: The address of the registry service. For example:

us-west1-docker.pkg.dev. - email: The email address of the user account used to authenticate to the service.

- username: The account used to authenticate to the registry service.

- password: The password or token used to authenticate to the registry service.

- To enable edge observability, enable Enable pipelines deployed on the edge to send data back to the OpsCenter.

- Registry URL: The address of the registry service. For example:

Save the updated configuration, then deploy it. Once complete, the edge registry settings will be available.

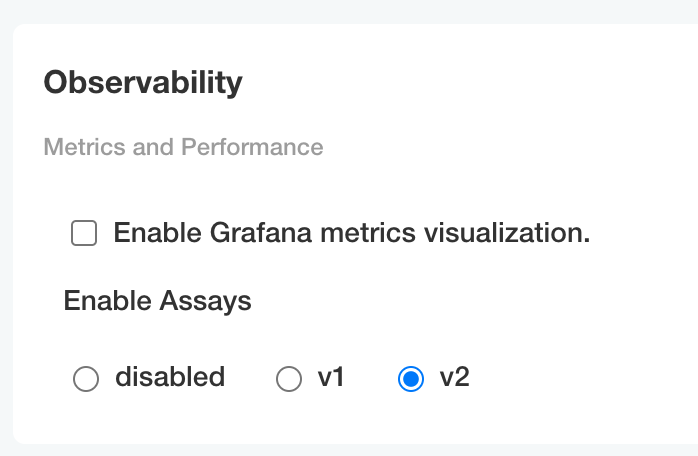

Set Assay Version

To enable Wallaroo assays via kots:

From the Config page, scroll to Config -> Observability -> Enable Assays.

Select from one of the following options:

- Disable: Disable assays.

- v1: Enable Wallaroo Assays V1.

- v2: Enable Wallaroo Assays V2 (Default).

Complete the deployment as needed.

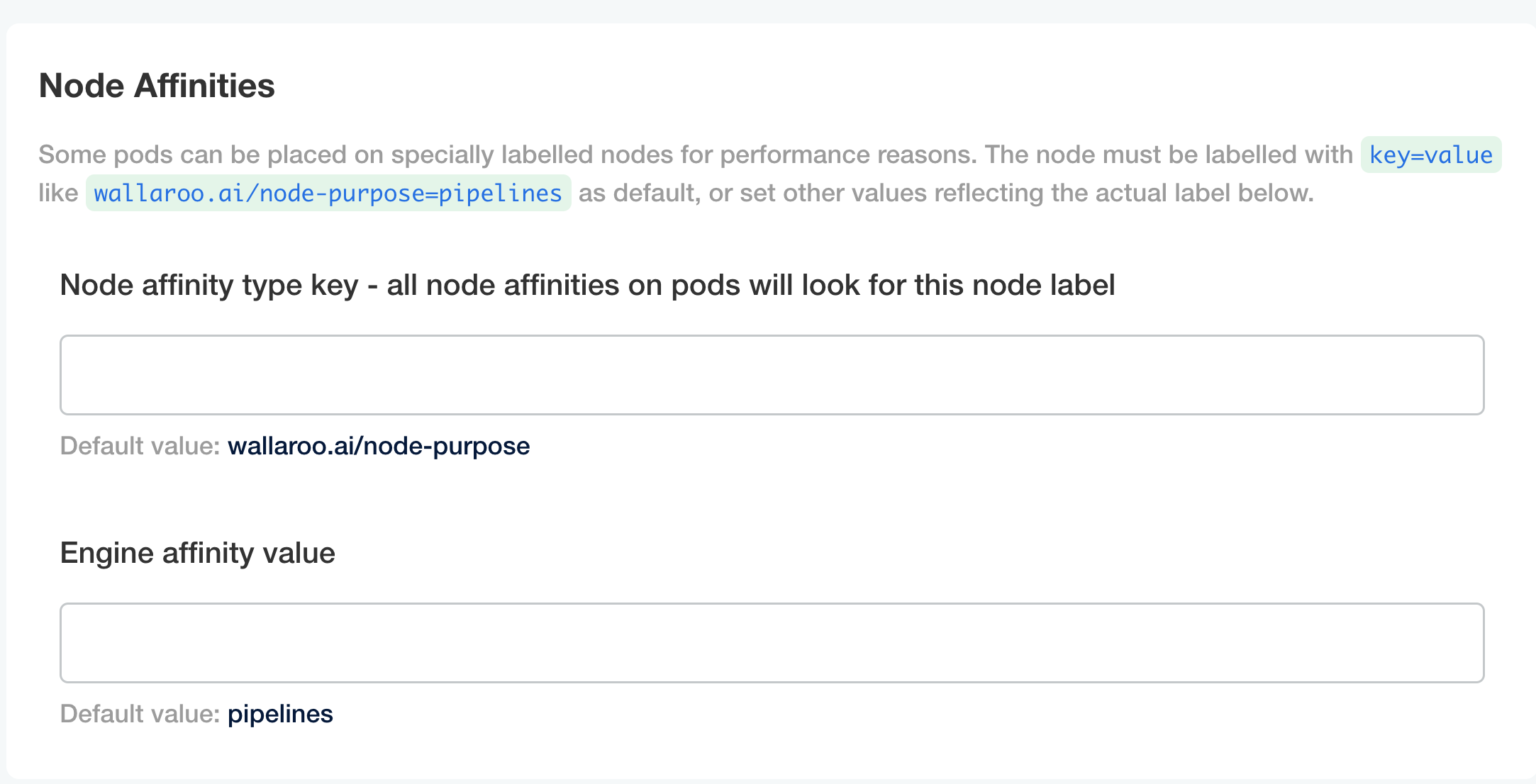

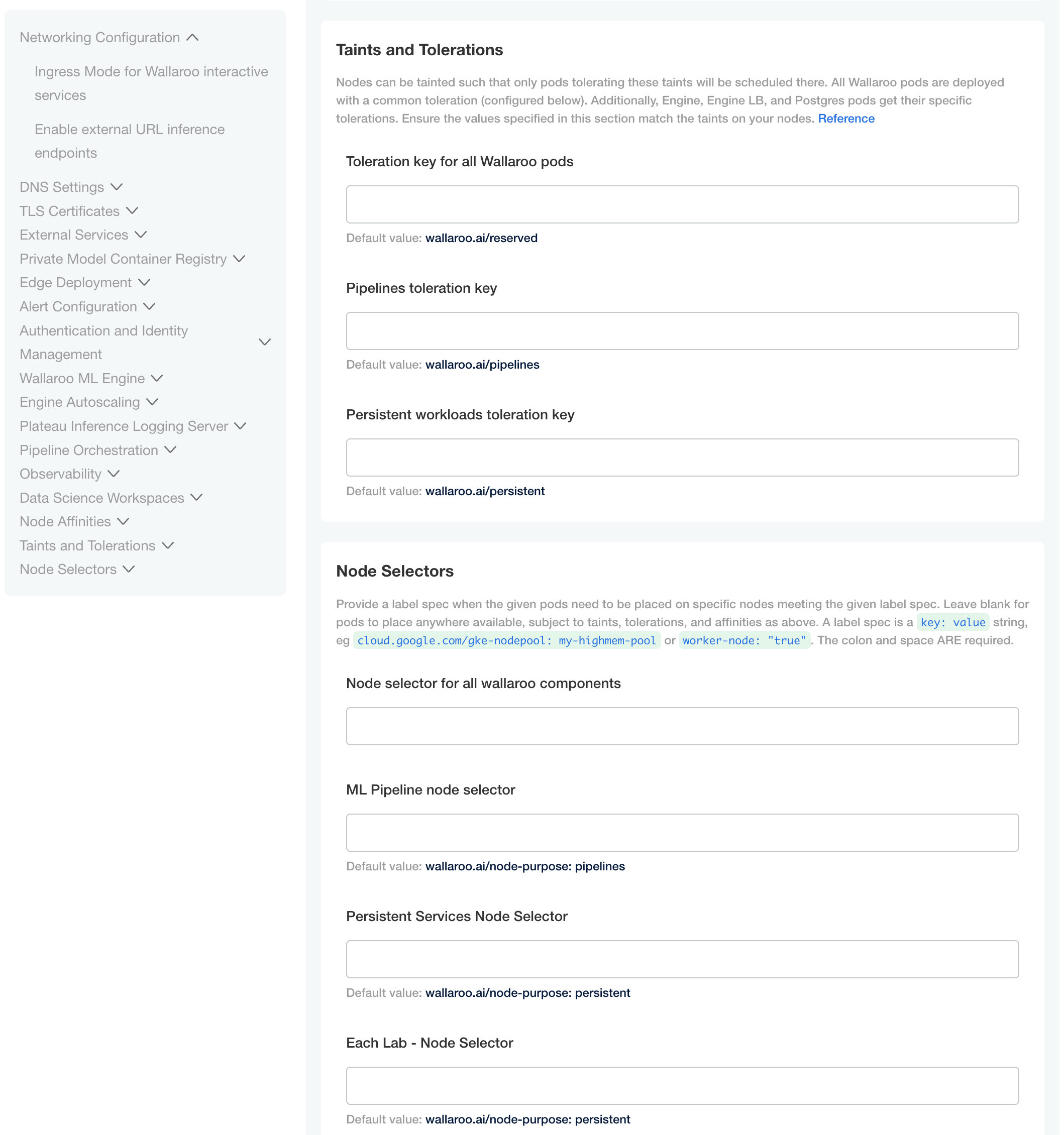

Custom Tolerations and Node Selectors Configuration

By default, the following taints and labels are applied to nodepools used for Wallaroo installations.

Nodepools created in Wallaroo require the following taints and labels.

These taints and labels are applied if using the the scripts provided in this guide.

For custom taints and labels, see the Custom Taints and Labels Guide.

| Nodepool | Taints | Labels | Description |

|---|---|---|---|

| general | N/A | wallaroo.ai/node-purpose: general | For general Wallaroo services. No taints are applied to this nodepool to allow any process not assigned with a deployment label to run in this space. |

| persistent | wallaroo.ai/persistent=true:NoSchedule | wallaroo.ai/node-purpose: persistent | For Wallaroo services with a persistentVolume settings, including JupyterHub, Minio, etc. |

| pipelines-x86 | wallaroo.ai/pipelines=true:NoSchedule | wallaroo.ai/node-purpose: pipelines | For deploying pipelines for default x86 architectures. The taints and label must be applied to any nodepool used for model deployments. |

For organizations that use custom Kubernetes taints and labels for their nodepools, the tolerations and node selectors for Wallaroo services will have to be modified to match. For full details, see the Custom Taints and Labels Guide.

To modify the tolerations and node selector labels to match the taints and labels set for the nodepools:

- From the Kots Administrative Dashboard, select Config.

- Update each of the following as needed:

Node Affinities:

Node affinity type key: Verify that the node affinity key matches the label for the nodepools.

Engine affinity value: Set the engine affinity - the affinity used for pipeline deployment - to match the label.

Taints and Tolerations. Set the custom tolerations to match the taints applied to the nodepools, and the node selectors to match the labels used for the nodepools.

Node Selectors: Update the node selector to match the custom nodepools labels for each service.

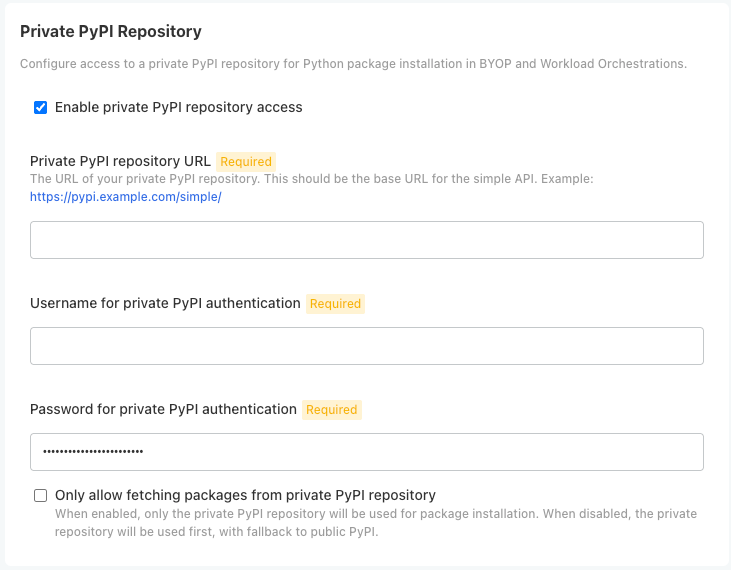

Private Python Repository via Kots

Administrators can configure Wallaroo with a Python private package repository (for example, Nexus, Github) into Wallaroo from within the same local network. This is useful for providing access to custom Python libraries for:

To enable Python private model registries via kots, enable the following settings:

From the Kots Administrative Dashboard, select Config.

From the section Private PyPi Repository, set the following:

- Enable private PyPi repository access: Enables the private Python repository. If not enabled, the following settings are ignored.

- Private PyPI repository URL: The URL for the private repository in the format

https://{HOST_NAME}. - Username for private PyPI authentication: Sets the username authentication credential to the private repository.

- Password for private PyPI authentication: Sets the password authentication credential to the private repository.

- Only allow fetching packages from private PiPI repository: If enabled, only uses the defined private PyPi repository; any public PyPI links are ignored.

Required Installation Configurations

Once installed, the following actions are required to complete the setup process process.

DNS Services Integration

DNS services integration is required for Wallaroo Enterprise to provide access to the various supporting services that are part of the Wallaroo instance. These include:

- Simplified user authentication and management.

- Centralized services for accessing the Wallaroo Dashboard, Wallaroo SDK and Authentication.

- Collaboration features allowing teams to work together.

- Managed security, auditing and traceability.

The following guide is for standard DNS services.

Once integrated, users can access the following services directly from a URL starting with the Wallaroo Domain - this is the domain name the DNS enetry is set to. For example, if the Wallaroo Domain is wallaroo.example.com, then the Wallaroo Ops instance is accessed from https://wallaroo.example.com/{service}.

Note that even when accessing specific Wallaroo services directly that the user must authenticate through Wallaroo or another authorized authentication service.

| Service | Path | Description |

|---|---|---|

| Wallaroo Dashboard | wallaroo domain | Provides access to a user interface for updating workspaces, pipelines, and models. Also provides access to the integrated JupyterHub service. |

| API | v1/api | Provides access to the Wallaroo API. For example, wallaroo.example.com/v1/api. |

| Authentication | auth | Access to authentication management including SSO configurations. For example: wallaroo.example.com/auth. For details on user management, see Wallaroo User Management. For details on user management services, see Wallaroo Authentication Configuration Guides. |

| Edge Observability | edge | Optional service for edge and multicloud deployments to report inference results back to the Wallaroo Ops instance. For example: wallaroo.example.com/edge. |

Prerequisites

- Install Wallaroo Enterprise into a qualified environment. For more details, see the Wallaroo Install Guides and the Wallaroo Enterprise Install Guides.

- Generate or update the the SSL Certificates

- Have access to internal corporate DNS configurations that can be updated. A subdomain for the Wallaroo instance will be created through this process.

- Have the IP address for the Wallaroo instance.

- Install kubectl into the Kubernetes cluster administrative node.

Wallaroo IP Address Retrieval Methods

The first step is to retrieve the IP address that is set to the DNS services.

Retrieve LoadBalancer IP with kubectl

For most organizations that install Wallaroo into a cloud based Kubernetes cluster such as Microsoft Azure, AWS, etc the external IP address is tied to Wallaroo LoadBalancer service. This can be retrieved with the kubectl command as follows:

Retrieve the external IP address for your Wallaroo instance LoadBalancer. For example, this can be performed through the following kubectl command:

kubectl get svc api-lb-ext -n wallaroo -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'

b9b4fd63-us-south.lb.appdomain.cloud

Other Methods

For organizations that install Wallaroo other methods may find the kubectl get svc api-lb-ext command only returns the internal IP address.

Depending on the instance, there are different methods of acquiring that IP address. The links below reference difference sources.

- AWS: How do I get the ID and IP address of an Amazon EC2 instance for an AWS Batch job?

- GCP: Locating IP addresses for an instance

- Azure: IP addresses in Azure Functions

Refer to your Wallaroo support representative if further assistance is needed.

DNS Entries

Create DNS the following entries based on the list above for the Wallaroo instance’s IP address. Select the cloud environment used for DNS services.

- For AWS Clusters:

- Wallaroo domain: CN (CNAME) record referring to the AWS EKS cluster endpoint. For example:

wallaroo.example.com.

- Wallaroo domain: CN (CNAME) record referring to the AWS EKS cluster endpoint. For example:

- For all other cloud services:

- Wallaroo domain:

Arecord,NS(Name Server) record,SOA(Start Of Authority) record. For example:wallaroo.example.com

- Wallaroo domain:

- References:

Edge Observability Enablement

For organizations that deploy Wallaroo pipelines on edge devices as Wallaroo Servers, see the DNS settings from the Edge Deployment Registry Guide.

Setup Users

User management is managed through the Wallaroo Dashboard, via the Platform Admin Dashboard page. See the Wallaroo User Management for full guides on setting up users, identity providers, and other user configuration options.

The following is an abbreviated guide on setting up new Wallaroo users.

IMPORTANT NOTE

At least one user must be created before using Wallaroo.The process includes the following steps:

- Obtain the User Admin Credentials

- Create a New User with the Admin Role

Obtain the User Admin Credentials

Obtaining the admin User Credentials

The standard Wallaroo installation creates the user admin by default and assigns them a randomly generated password. The admin user credentials are obtained which may be obtained directly from Kubernetes with the following commands, assuming the Wallaroo instance namespace is wallaroo.

Retrieve Admin Username

kubectl -n wallaroo \ get secret keycloak-admin-secret \ -o go-template='{{.data.KEYCLOAK_ADMIN_USER | base64decode }}'Retrieve Admin Password

kubectl -n wallaroo \ get secret keycloak-admin-secret \ -o go-template='{{.data.KEYCLOAK_ADMIN_PASSWORD | base64decode }}'

Create a New User with the Admin Role

Creating users is managed through the Platform Admin Dashboard. The following steps are used to create an initial user with the role admin.

- Access the Wallaroo Dashboard through the DNS name set up in the DNS Services Integration step. For example, if the DNS name of the Wallaroo Ops center is

wallaroo.example.com, the Wallaroo Dashboard is available athttps://wallaroo.example.com. - Login with the username

adminand the password retrieved in the step Obtaining the admin User Credentials. - Select Create Users and enter the following:

- User Email: The email address for the user. This must be in the valid email address format.

- Assign Type: Select Admin.

- Password: Enter the user’s password. This user password be sent to the new user.

- Temporary or Permanent:

- Temporary: The user will be forced to change their login password upon their next login (Recommended).

- Permanent: The user will keep their password.

- Create any additional users as needed. When finished, select the Wallaroo icon drop down and select Logout.

At this point, users can log in to Wallaroo Dashboard with their provided identities. For guides on setting up Single Sign-On (SSO) and other features, see Wallaroo User Management for full guides on setting up users, identity providers, and other user configuration options.

Optional: Load NVIDIA GPU Drivers

To install NVIDIA GPU drivers in an OpenShift environment, see Introduction to NVIDIA GPU Operator on OpenShiftUninstall

To uninstall Wallaroo from an air-gapped environment, see How to Uninstall Wallaroo from a Cluster.