LLM Monitoring Listeners

Table of Contents

LLM Monitoring Listeners are offline monitors that leverage Wallaroo Inference Automation. LLM Listeners perform offline scoring of LLM inferences and generated text with a set of standard metrics/scores such as:

- Toxicity

- Sentiment

- Profanity

- Hate

- Etc

Users can also create custom LLM Listeners to score LLMs offline against custom metrics. LLM monitoring Listeners are composed of models trained to evaluate LLM outputs, so can be updated or refined according to the organization’s needs.

These steps are monitored with Wallaroo assays to analyze the scores each validation step produces and publish assay analyses based on established criteria.

LLM Listeners are executed either as:

- Run Once: The LLM Listener runs once and evaluates a set of LLM outputs.

- Run Scheduled: The LLM Listener is executed on a user defined schedule (run once an hour, several times a day, etc).

- Based on events: Feature coming soon!

The results of these evaluations are stored and retrieved in the same way as LLM inference results are stored as Inference Results, so each LLM Listener evaluation result can be used to detect LLM model drift or other issues.

LLM Listener Examples

The following condensed examples shows the process of deploying a LLM Listener as a Workload Orchestration in Wallaroo. The LLM Listener monitors a Llama V3 Instruct model named llama3-instruct. For a full example, see LLM Listener Monitoring Example or download the sample Jupyter Notebooks from the Wallaroo Tutorials repository.

This LLM has the following outputs.

| Field | Type | Description |

|---|---|---|

generated_text | String | The text output of the LLama v3 inference. |

The following example shows an inference from this deployed LLM. The input is a pandas DataFrame.

data = pd.DataFrame({'text': ['What is Wallaroo.AI?']})

result = llm_pipeline.infer(data)

result['out.generated_text'][0]

'Wallaroo.AI is an AI platform that enables developers to build, deploy, and manage AI and machine learning models at scale. It provides a cloud-based infrastructure for building, training, and deploying AI models, as well as a set of tools and APIs for integrating AI into various applications.\n\nWallaroo.AI is designed to make it easy for developers to build and deploy AI models, regardless of their level of expertise in machine learning. It provides a range of features, including support for popular machine learning frameworks such as TensorFlow and PyTorch, as well as a set of pre-built AI models and APIs for common use cases such as image and speech recognition, natural language processing, and predictive analytics.\n\nWallaroo.AI is particularly well-suited for developers who are looking to build AI-powered applications, but may not have extensive expertise in machine learning or AI development. It provides a range of tools and resources to help developers get started with building AI-powered applications, including a cloud-based development environment, a set of pre-built AI models and APIs, and a range of tutorials and documentation.'

The LLM Listener tracks the LLM’s inferences and scores the outputs on a variety of different criteria.

The LLM Listener is composed of two models:

toxic_bert: A Hugging Face Text Classification model that evaluates LLM outputs and outputs an array of scores.postprocess: A Python model that takes thetoxic_bertoutputs and converts them into the following field outputs, scored from 0 to 1, with 1 being the worst:identity_hateinsultobscenesevere_toxicthreattoxic

The LLM Lister is deployed as a Workload Orchestration that performs the following:

- Accept the following arguments to determine which LLM to evaluate:

llm_workspace: The name of the workspace the LLM is deployed from.llm_pipeline: The pipeline the LLM is deployed from.llm_output_field: The LLM’s text output field.monitor_workspace: The workspace the LLM Listener models are deployed from.monitor_pipeline: The pipeline the LLM listener models are deployed from.window_length: The amount of time to evaluate from when the task is executed in hours. For example,1would evaluate the past hour. Use-1for no limits. This will gather the standard inference results window.n_toxlabels: The number of toxic labels. For ourtoxic_bertLLM Listener, the number of fields is 6.

- Deploy LLM Listener models.

- Gathers the

llama3-instruct’s Inference Results, and processes theout.generated_textfield through the LLM Listener models.- These either either the default inference result outputs, or specified by a date range of inference results.

- The LLM listener then scores the LLM’s outputs and provides the scores listed above. These are extracted at any time as its own Inference Results.

As a Workload Orchestration, the LLM Listener is executed either as a Run Once - which executes once, reports its results, then stops, or Run Scheduled, which is executed on set schedule (every 5 minutes, every hour, etc).

Orchestrate LLM Monitoring Listener via the Wallaroo SDK

The following shows running the LLM Listener as a Run Once task via the Wallaroo SDK that evaluates the llama3-instruct LLM. The LLM Listener arguments can be modified to evaluate any other deployed LLMs with their own text output fields.

This assumes that the LLM Listener was already uploaded and is ready to accept new tasks, and we have saved it to the variable llm_listener.

Here we create the Run Once task for the LLM Listener and provide it the deployed LLM’s workspace and pipeline, and the LLM Listener’s models workspace and name. We give the task the name sample monitor.

args = {

'llm_workspace' : 'llm-models' ,

'llm_pipeline': 'llamav3-instruct',

'llm_output_field': 'out.generated_text',

'monitor_workspace': 'llm-models',

'monitor_pipeline' : 'full-toxmonitor-pipeline',

'window_length': -1, # in hours. If -1, no limit (for testing)

'n_toxlabels': 6,

}

task = llm_listener.run_once(name="sample_monitor", json_args=args, timeout=1000)

We can wait for the task to finish. Here we’ll list out the tasks from a Wallaroo client saved to wl, and verify that the task finished with Success.

wl.list_tasks()

| id | name | last run status | type | active | schedule | created at | updated at |

|---|---|---|---|---|---|---|---|

| 2b939bc4-f6d7-4467-a8b9-016841de1bc0 | monitor-initial-test | success | Temporary Run | True | - | 2024-02-May 23:37:49 | 2024-02-May 23:38:05 |

With the task completed, we check the LLM Listener logs and use the evaluation fields to determine if there are any toxicity issues, etc.

llm_evaluation_results = llm_listener_pipeline.logs()

display(llm_evaluation_results)

| time | in.inputs | in.top_k | out.identity_hate | out.insult | out.obscene | out.severe_toxic | out.threat | out.toxic | anomaly.count |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 2024-05-02 23:38:11.716 | Wallaroo.AI is an AI platform that enables developers to build, deploy, and manage AI and machine learning models at scale. It provides a cloud-based infrastructure for building, training, and deploying AI models, as well as a set of tools and APIs for integrating AI into various applications.\n\nWallaroo.AI is designed to make it easy for developers to build and deploy AI models, regardless of their level of expertise in machine learning. It provides a range of features, including support for popular machine learning frameworks such as TensorFlow and PyTorch, as well as a set of pre-built AI models and APIs for common use cases such as image and speech recognition, natural language processing, and predictive analytics.\n\nWallaroo.AI is particularly well-suited for developers who are looking to build AI-powered applications, but may not have extensive expertise in machine learning or AI development. It provides a range of tools and resources to help developers get started with building AI-powered applications, including a cloud-based development environment, a set of pre-built AI models and APIs, and a range of tutorials and documentation. | 6 | [0.00014974642544984818] | [0.00017831822333391756] | [0.00018145183275919408] | [0.00012232053268235177] | [0.00013229982869233936] | [0.0006922021857462823] |

If this task is scheduled to run regularly, each log entry will represent when the LLM Listener task was executed.

Orchestrate the LLM Monitoring Listeners via the Wallaroo UI

LLM Monitoring Listeners are orchestrated through the Wallaroo Dashboard. These follow these general steps:

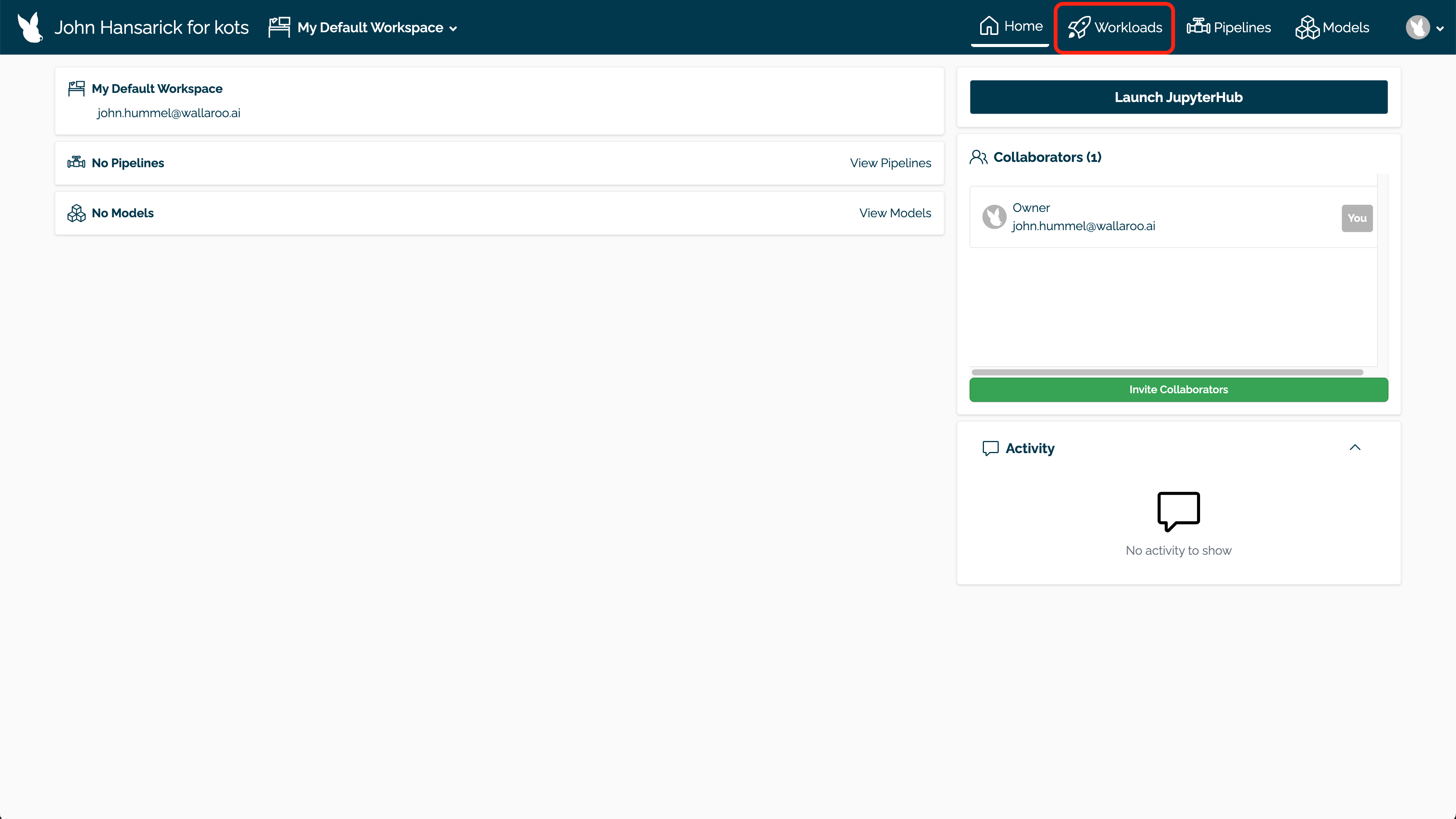

From the Wallaroo Dashboard, select the workspace where the workloads were uploaded to.

From the upper right corner, select Workloads.

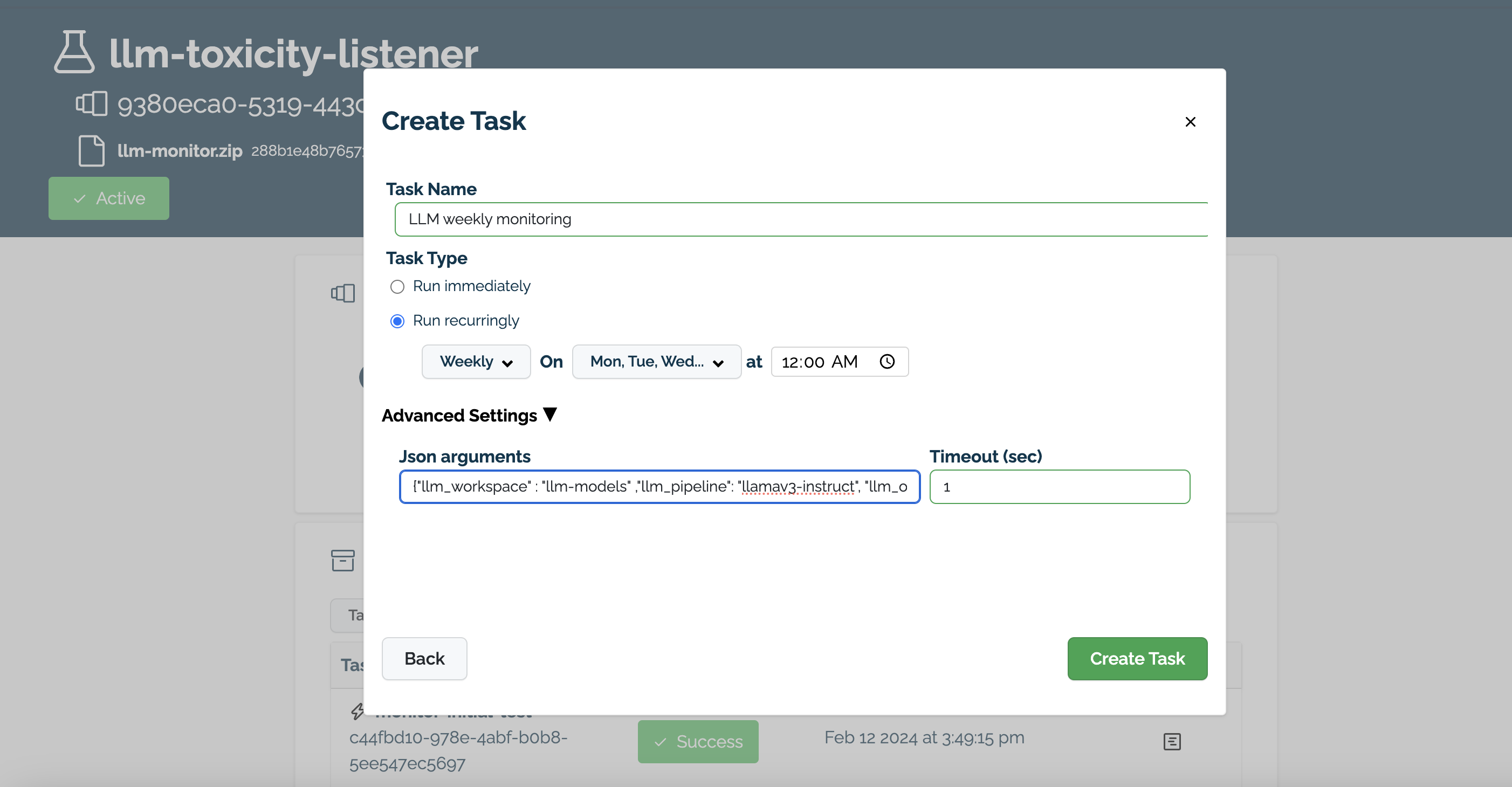

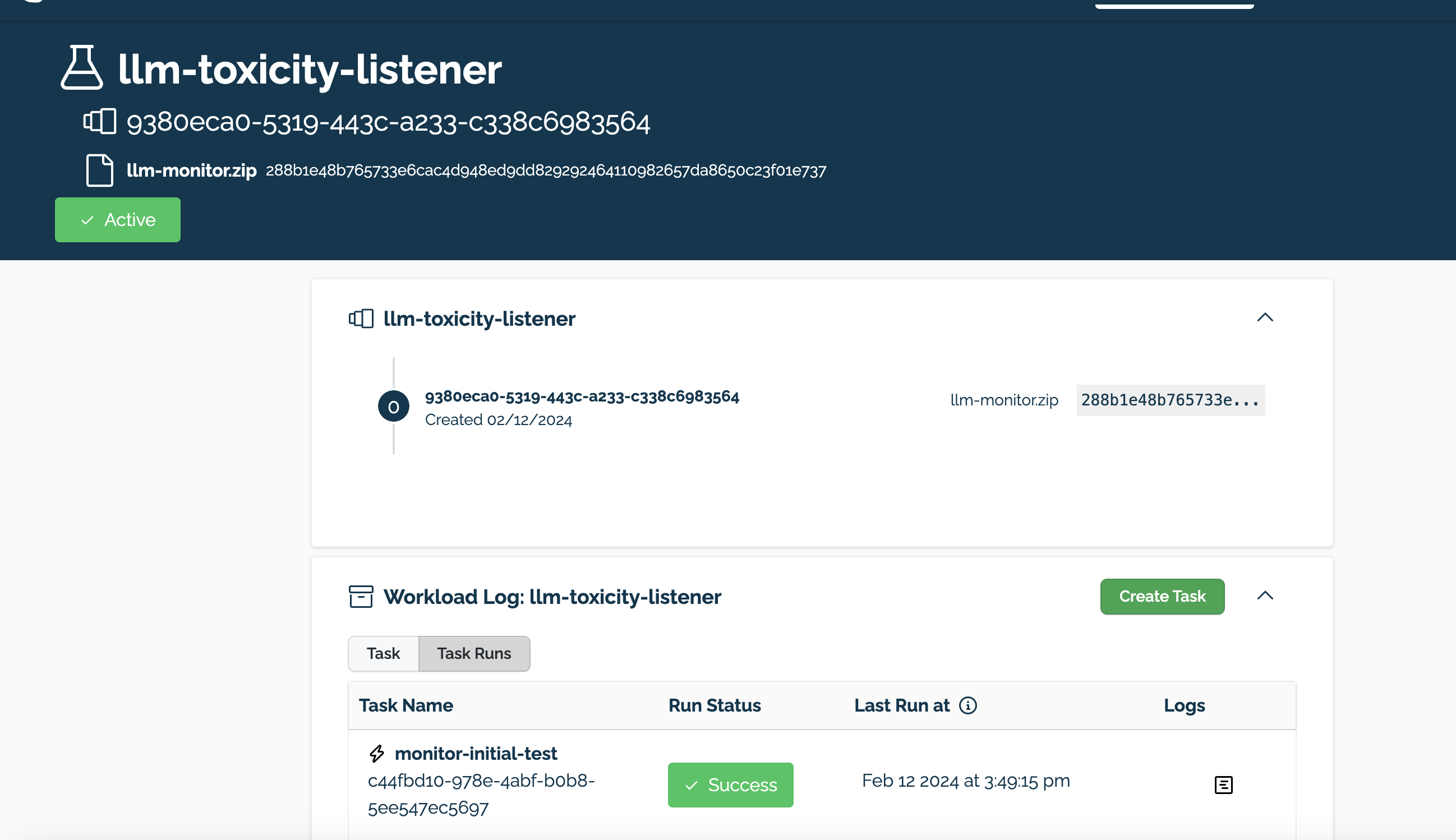

Select the LLM Listener and create either a Run Once or Run Scheduled task based on a set schedule, and provide the required arguments.

Once a task is completed, the results are available. The Listener’s inference logs are available for monitoring through Wallaroo assays.

For access to these sample models and a demonstration on using LLMs with Wallaroo:

- Contact your Wallaroo Support Representative OR

- Schedule Your Wallaroo.AI Demo Today