Wallaroo AI Starter Kit Virtual Assistant Tutorial

This tutorial and the assets can be downloaded as part of the Wallaroo Tutorials repository.

The following is a demonstration of downloading, deploying, and inferring from a RAG based virtual assistant from the Wallaroo AI Starter Kit for IBM Power.

Wallaroo AI Starter Kit for IBM: Virtual Assistant Deployment Guide

This tutorial functions as a practical example of using the Wallaroo AI Starter Kit for IBM Power to deploy the Virtual Assistant model in an IBM Logical Partition (LPAR).

Prerequisites

Before starting, verify that the Wallaroo AI Start Kit LPAR (Logical Partition) Prerequisites are complete.

Procedure

Retrieve the Deployment Command

Proceed to the Wallaroo AI Starter Kit URL.

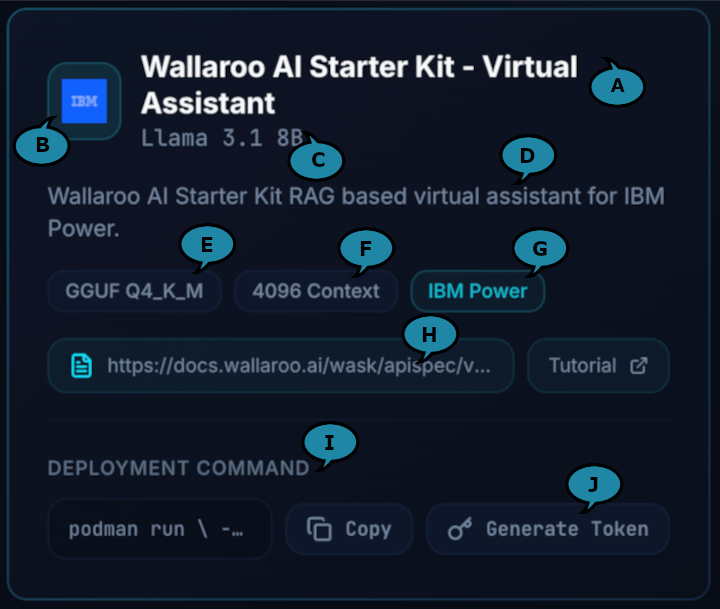

Select the Model Card for the Wallaroo AI Starter Kit - Virtual Assistant and copy the Deployment Command.

The following shows an example of this command.

podman run \ -p $EDGE_PORT:8080 \ -e OCI_USERNAME="$USERNAME" \ -e OCI_PASSWORD="$GENERATED_TOKEN" \ -e SYSTEM_PROMPT="$SYSTEM_PROMPT" \ -e MODEL_CONTEXT_FILENAME="/$(basename $MODEL_CONTEXT_FILENAME)" \ -v $MODEL_CONTEXT_FILENAME:/$(basename $MODEL_CONTEXT_FILENAME) \ -e PIPELINE_URL=quay.io/wallarooai/wask/wask-va-pipeline:bfb42a37-45ea-4d99-8ebf-c37a1de7dd70 \ -e CONFIG_CPUS=1.0 --cpus=15.0 --memory=20g \ quay.io/wallarooai/wask/fitzroy-mini-ppc64le:v2025.2.0-6480Retrieve the Generated Token by selecting Generate Token next to the Deploy Command. If this token is lost, return to the Wallaroo AI Starter Kit page and generate a new token.

Set the Deployment Command

Login to the LPAR through a terminal shell - for example, ssh.

Set the following variables:

EDGE_PORT: The external IP port used to make inference requests. Verify this port is open and accessible from the requesting systems.SYSTEM_PROMPT: This is a system prompt, for example: “Answer the question in 10 words or less using the following context”.MODEL_CONTEXT_FILENAME: This is a reference to a JSON file.

Create the

MODEL_CONTEXT_FILENAMEfile in the same path specified (for example:context.json). This file is in the following format:{ "type": "array", "items": { "type": "object", "properties": { "question": { "type": "string", "description": "The question being asked" }, "answer": { "type": "string", "description": "The answer to the question" } }, "required": ["question", "answer"] } }The following is an example of this file contents:

[ { "question": "How do I launch DX+", "answer": "You can launch DX+ by opening the DX+ application from your desktop or web portal and signing in using your credentials." }, { "question": "What credentials do I use to sign into DX+", "answer": "Use your assigned company username and password to sign into DX+." }, { "question": "What should I do if I forget my DX+ password", "answer": "Click the 'Forgot Password' link on the login screen or contact your system administrator to reset it." } ]

The following shows an example of these values declared in the command:

podman run \

-p 3030:8080 \

-e OCI_USERNAME="$USERNAME" \

-e OCI_PASSWORD="$GENERATED_TOKEN" \

-e SYSTEM_PROMPT="Answer the question in 10 words or less using the following context" \

-e MODEL_CONTEXT_FILENAME="./context.json" \

-v $MODEL_CONTEXT_FILENAME:./context.json \

-e PIPELINE_URL=quay.io/wallarooai/wask/wask-va-pipeline:bfb42a37-45ea-4d99-8ebf-c37a1de7dd70 \

-e CONFIG_CPUS=1.0 --cpus=15.0 --memory=20g \

quay.io/wallarooai/wask/fitzroy-mini-ppc64le:v2025.2.0-6480

Once ready, deploy the model by running the updated Deploy Command for your environment.

Inference

Inference requests are made by submitting Apache Arrow tables or Pandas Tables in Record Format as JSON.

The Inference URL is in the format:

$HOSTNAME:$PORT/infer

For example, if the hostname is localhost and the port is 3030, the Inference URL is:

localhost:3030/infer

The following shows an example of performing the inference request on the deployed Virtual Assistant via the curl command.

Note that this command is run within a Jupyter Notebook for the tutorial; in a terminal shell, remove the !curl and replace it with curl.

!curl POST localhost:3030/infer \

-H "Content-Type: application/json" \

-v --data '[{"query":"How do I launch DX+?"}]'

[{"time":1768252715611,"in":{"query":"How do I launch DX+?"},"out":{"generated_text":"Open the DX+ application from your desktop or web portal and sign in using your credentials."},"anomaly":{"count":0},"metadata":{"last_model":"{"model_name":"wask-qa-bot-byop-model","model_sha":"175e19b1cabd8a05c7888c43f4bff90b4442be407d89364826505726d53d347b"}","pipeline_version":"bfb42a37-45ea-4d99-8ebf-c37a1de7dd70","elapsed":[6309508,41709268743],"dropped":[],"partition":"b6578b9c5d36"}}]