Wallaroo SDK Essentials Guide: Model Uploads and Registrations: Model Registry Services

Table of Contents

Wallaroo users can register their trained machine learning models from a model registry into their Wallaroo instance and perform inferences with it through a Wallaroo pipeline.

This guide details how to add ML Models from a model registry service into a Wallaroo instance.

Artifact Requirements

Models are uploaded to the Wallaroo instance as the specific artifact - the “file” or other data that represents the file itself. This must comply with the Wallaroo model requirements framework and version or it will not be deployed. Note that for models that fall outside of the supported model types, they can be registered to a Wallaroo workspace as MLFlow 1.30.0 containerized models.

Registry Services Roles

Registry service use in Wallaroo typically falls under the following roles.

| Role | Recommended Actions | Description |

|---|---|---|

| DevOps Engineer | Create Model Registry | Create the model (AKA artifact) registry service |

| Retrieve Model Registry Tokens | Generate the model registry service credentials. | |

| MLOps Engineer | Connect Model Registry to Wallaroo | Add the Registry Service URL and credentials into a Wallaroo instance for use by other users and scripts. |

| Add Wallaroo Registry Service to Workspace | Add the registry service configuration to a Wallaroo workspace for use by workspace users. | |

| Data Scientist | List Registries in a Workspace | List registries available from a workspace. |

| List Models in Registry | List available models in a model registry. | |

| List Model Versions of Registered Model | List versions of a registry stored model. | |

| List Model Version Artifacts | Retrieve the artifacts (usually files) for a model stored in a model registry. | |

| Upload Model from Registry | Upload a model and artifacts stored in a model registry into a Wallaroo workspace. |

Model Registry Operations

The following links to guides and information on setting up a model registry (also known as an artifact registry).

Create Model Registry

See Model serving with Azure Databricks for setting up a model registry service using Azure Databricks.

The following steps create an Access Token used to authenticate to an Azure Databricks Model Registry.

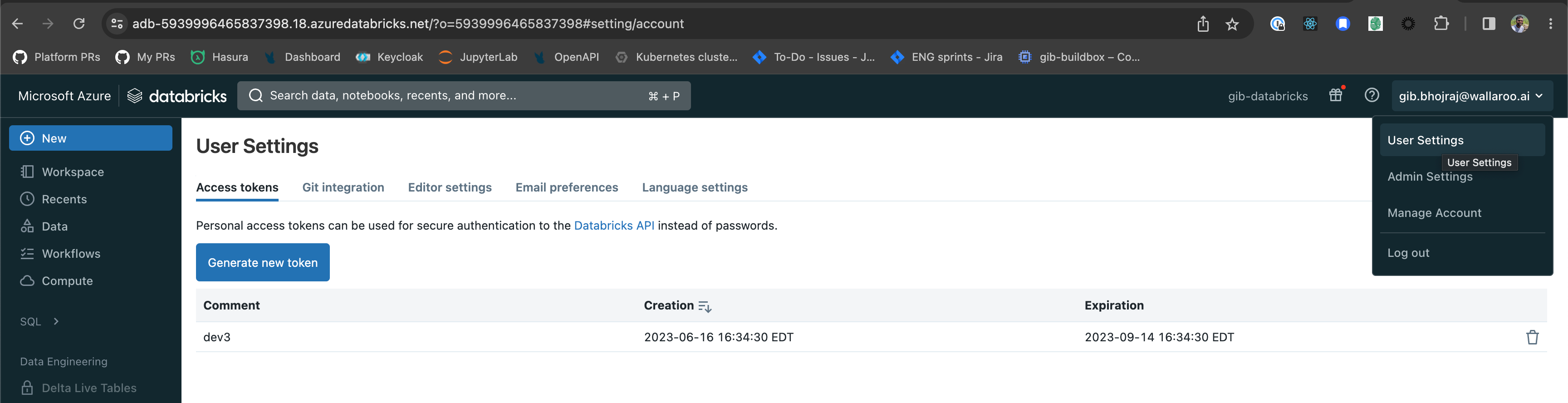

- Log into the Azure Databricks workspace.

- From the upper right corner access the User Settings.

- From the Access tokens, select Generate new token.

- Specify any token description and lifetime. Once complete, select Generate.

- Copy the token and store in a secure place. Once the Generate New Token module is closed, the token will not be retrievable.

The MLflow Model Registry provides a method of setting up a model registry service. Full details can be found at the MLflow Registry Guides.

A generic MLFlow model registry requires no token.

Wallaroo Registry Operations

- Connect Model Registry to Wallaroo: This details the link and connection information to a existing MLFlow registry service. Note that this does not create a MLFlow registry service, but adds the connection and credentials to Wallaroo to allow that MLFlow registry service to be used by other entities in the Wallaroo instance.

- Add a Registry to a Workspace: Add the created Wallaroo Model Registry so make it available to other workspace members.

- Remove a Registry from a Workspace: Remove the link between a Wallaroo Model Registry and a Wallaroo workspace.

Connect Model Registry to Wallaroo

MLFlow Registry connection information is added to a Wallaroo instance through the Wallaroo.Client.create_model_registry method.

Connect Model Registry to Wallaroo Parameters

| Parameter | Type | Description |

|---|---|---|

name | string (Required) | The name of the MLFlow Registry service. |

token | string (Required) | The authentication token used to authenticate to the MLFlow Registry. |

url | string (Required) | The URL of the MLFlow registry service. |

Connect Model Registry to Wallaroo Return

The following is returned when a MLFlow Registry is successfully created.

| Field | Type | Description |

|---|---|---|

Name | String | The name of the MLFlow Registry service. |

URL | string | The URL for connecting to the service. |

Workspaces | List[string] | The name of all workspaces this registry was added to. |

Created At | DateTime | When the registry was added to the Wallaroo instance. |

Updated At | DateTime | When the registry was last updated. |

Note that the token is not displayed for security reasons.

Connect Model Registry to Wallaroo Example

The following example creates a Wallaroo MLFlow Registry with the name ExampleNotebook stored in a sample Azure DataBricks environment.

wl.create_model_registry(name="ExampleNotebook",

token="abcdefg-3",

url="https://abcd-123489.456.azuredatabricks.net")

| Field | Value |

|---|---|

| Name | ExampleNotebook |

| URL | https://abcd-123489.456.azuredatabricks.net |

| Workspaces | sample.user@wallaroo.ai - Default Workspace |

| Created At | 2023-27-Jun 13:57:26 |

| Updated At | 2023-27-Jun 13:57:26 |

Add Registry to Workspace

Registries are assigned to a Wallaroo workspace with the Wallaroo.registry.add_registry_to_workspace method. This allows members of the workspace to access the registry connection. A registry can be associated with one or more workspaces.

Add Registry to Workspace Parameters

| Parameter | Type | Description |

|---|---|---|

name | string (Required) | The numerical identifier of the workspace. |

Add Registry to Workspace Returns

The following is returned when a MLFlow Registry is successfully added to a workspace.

| Field | Type | Description |

|---|---|---|

Name | String | The name of the MLFlow Registry service. |

URL | string | The URL for connecting to the service. |

Workspaces | List[string] | The name of all workspaces this registry was added to. |

Created At | DateTime | When the registry was added to the Wallaroo instance. |

Updated At | DateTime | When the registry was last updated. |

Example

registry.add_registry_to_workspace(workspace_id=workspace_id)

| Field | Value |

|---|---|

| Name | ExampleNotebook |

| URL | https://abcd-123489.456.azuredatabricks.net |

| Workspaces | sample.user@wallaroo.ai - Default Workspace |

| Created At | 2023-27-Jun 13:57:26 |

| Updated At | 2023-27-Jun 13:57:26 |

Remove Registry from Workspace

Registries are removed from a Wallaroo workspace with the Registry remove_registry_from_workspace method.

Remove Registry from Workspace Parameters

| Parameter | Type | Description |

|---|---|---|

workspace_id | Integer (Required) | The numerical identifier of the workspace. |

Remove Registry from Workspace Return

| Field | Type | Description |

|---|---|---|

Name | String | The name of the MLFlow Registry service. |

URL | string | The URL for connecting to the service. |

Workspaces | List[String] | A list of workspaces by name that still contain the registry. |

Created At | DateTime | When the registry was added to the Wallaroo instance. |

Updated At | DateTime | When the registry was last updated. |

Remove Registry from Workspace Example

registry.remove_registry_from_workspace(workspace_id=workspace_id)

| Field | Value |

|---|---|

| Name | JeffRegistry45 |

| URL | https://sample.registry.azuredatabricks.net |

| Workspaces | john.hummel@wallaroo.ai - Default Workspace |

| Created At | 2023-17-Jul 17:56:52 |

| Updated At | 2023-17-Jul 17:56:52 |

Wallaroo Registry Model Operations

- List Registries in a Workspace: List the available registries in the current workspace.

- List Models: List Models in a Registry

- Upload Model: Upload a version of a ML Model from the Registry to a Wallaroo workspace.

- List Model Versions: List the versions of a particular model.

- Remove Registry from Workspace: Remove a specific Registry configuration from a specific workspace.

List Registries in a Workspace

Registries associated with a workspace are listed with the Wallaroo.Client.list_model_registries() method. This lists all registries associated with the current workspace.

List Registries in a Workspace Parameters

None

List Registries in a Workspace Returns

A List of Registries with the following fields.

| Field | Type | Description |

|---|---|---|

Name | String | The name of the MLFlow Registry service. |

URL | string | The URL for connecting to the service. |

Created At | DateTime | When the registry was added to the Wallaroo instance. |

Updated At | DateTime | When the registry was last updated. |

List Registries in a Workspace Example

wl.list_model_registries()

| name | registry url | created at | updated at |

|---|---|---|---|

| gib | https://sampleregistry.wallaroo.ai | 2023-27-Jun 03:22:46 | 2023-27-Jun 03:22:46 |

| ExampleNotebook | https://sampleregistry.wallaroo.ai | 2023-27-Jun 13:57:26 | 2023-27-Jun 13:57:26 |

List Models in a Registry

A List of models available to the Wallaroo instance through the MLFlow Registry is performed with the Wallaroo.Registry.list_models() method.

List Models in a Registry Parameters

None

List Models in a Registry Returns

A List of models with the following fields.

| Field | Type | Description |

|---|---|---|

Name | String | The name of the model. |

Registry User | string | The user account that is tied to the registry service for this model. |

Versions | int | The number of versions for the model, starting at 0. |

Created At | DateTime | When the registry was added to the Wallaroo instance. |

Updated At | DateTime | When the registry was last updated. |

List Models in a Registry Example

registry.list_models()

| Name | Registry User | Versions | Created At | Updated At |

|---|---|---|---|---|

| testmodel | sample.user@wallaroo.ai | 0 | 2023-16-Jun 14:38:42 | 2023-16-Jun 14:38:42 |

| testmodel2 | sample.user@wallaroo.ai | 0 | 2023-16-Jun 14:41:04 | 2023-16-Jun 14:41:04 |

| wine_quality | sample.user@wallaroo.ai | 2 | 2023-16-Jun 15:05:53 | 2023-16-Jun 15:09:57 |

Retrieve Specific Model Details from the Registry

Model details are retrieved by assigning a MLFlow Registry Model to an object with the Wallaroo.Registry.list_models(), then specifying the element in the list to save it to a Registered Model object.

The following will return the most recent model added to the MLFlow Registry service.

mlflow_model = registry.list_models()[-1]

mlflow_model

| Field | Type | Description |

|---|---|---|

Name | String | The name of the model. |

Registry User | string | The user account that is tied to the registry service for this model. |

Versions | int | The number of versions for the model, starting at 0. |

Created At | DateTime | When the registry was added to the Wallaroo instance. |

Updated At | DateTime | When the registry was last updated. |

List Model Versions of Registered Model

MLFlow registries can contain multiple versions of a ML Model. These are listed and are listed with the Registered Model versions attribute. The versions are listed in reverse order of insertion, with the most recent model version in position 0.

List Model Versions of Registered Model Parameters

None

List Model Versions of Registered Model Returns

A List of the Registered Model Versions with the following fields.

| Field | Type | Description |

|---|---|---|

Name | String | The name of the model. |

Version | int | The version number. The higher numbers are the most recent. |

Description | String | The registered model’s description from the MLFlow Registry service. |

List Model Versions of Registered Model Example

The following will return the most recent model added to the MLFlow Registry service and list its versions.

mlflow_model = registry.list_models()[-1]

mlflow_model.versions

| Name | Version | Description |

|---|---|---|

| wine_quality | 2 | None |

| wine_quality | 1 | None |

List Model Version Artifacts

Artifacts belonging to a MLFlow registry model are listed with the Model Version list_artifacts() method. This returns all artifacts for the model.

List Model Version Artifacts Parameters

None

List Model Version Artifacts Returns

A List of artifacts with the following fields.

| Field | Type | Description |

|---|---|---|

file_name | String | The name assigned to the artifact. |

file_size | String | The size of the artifact in bytes. |

full_path | String | The path of the artifact. This will be used to upload the artifact to Wallaroo. |

List Model Version Artifacts Example

The following will list the artifacts in a single registry model.

single_registry_model.versions[0].list_artifacts()

Upload a Model from a Registry

Models uploaded to the Wallaroo workspace are uploaded from a MLFlow Registry with the Wallaroo.Registry.upload method.

Upload a Model from a Registry Parameters

| Parameter | Type | Description |

|---|---|---|

name | string (Required) | The name to assign the model once uploaded. Model names are unique within a workspace. Models assigned the same name as an existing model will be uploaded as a new model version. |

path | string (Required) | The full path to the model artifact in the registry. |

framework | string (Required) | The Wallaroo model Framework. See Model Uploads and Registrations Supported Frameworks |

input_schema | pyarrow.lib.Schema (Required for non-native runtimes) | The input schema in Apache Arrow schema format. |

output_schema | pyarrow.lib.Schema (Required for non-native runtimes) | The output schema in Apache Arrow schema format. |

Upload a Model from a Registry Returns

The registry model details as follows.

| Field | Type | Description |

|---|---|---|

Name | String | The name of the model. |

Version | string | The version registered in the Wallaroo instance in UUID format. |

File Name | string | The file name associated with the ML Model in the Wallaroo instance. |

SHA | string | The models hash value. |

Status | string | The status of the model from the following list.

|

Image Path | string | The image used for the containerization of the model. |

Updated At | DateTime | When the model was last updated. |

Upload a Model from a Registry Example

The following will retrieve the most recent uploaded model and upload it with the XGBOOST framework into the current Wallaroo workspace.

input_schema = pa.schema([

pa.field('inputs', pa.list_(pa.float32(), list_size=4))

])

output_schema = pa.schema([

pa.field('predictions', pa.int32())

])

model = registry.upload_model(

name="sklearnonnx",

path="https://sampleregistry.wallaroo.ai/api/2.0/dbfs/read?path=/databricks/mlflow-registry/9f38797c1dbf4e7eb229c4011f0f1f18/models/testmodel2/model.pkl",

framework=Framework.SKLEARN,

input_schema=input_schema,

output_schema=output_schema)

| Name | sklearnonnx |

| Version | 63bd932d-320d-4084-b972-0cfe1a943f5a |

| File Name | model.pkl |

| SHA | 970da8c178e85dfcbb69fab7bad0fb58cd0c2378d27b0b12cc03a288655aa28d |

| Status | pending_conversion |

| ImagePath | None |

| Updated At | 2023-05-Jul 19:14:49 |

Retrieve Model Status

The model status is retrieved with the Model status() method.

Retrieve Model Status Parameters

None

Retrieve Model Status Returns

| Field | Type | Description |

|---|---|---|

| status | String | The current status of the uploaded model.

|

Retrieve Model Status Returns Example

The following demonstrates checking the status in the for loop until the model shows either ready or error.

import time

while model.status() != "ready" and model.status() != "error":

print(model.status())

time.sleep(3)

print(model.status())

converting

converting

ready