Wallaroo MLOps API Essentials Guide: Pipeline Edge Publishing Management

Table of Contents

Wallaroo pipelines can be published to a Edge Open Container Initiative (OCI) Registry Service, known here as the Edge Registry Service, as a container images. This allows the Wallaroo pipelines to be deployed in other environments, such as Docker or Kubernetes with all of the pipeline model. When deployed, these pipelines can perform inferences from the ML models exactly as if they were deployed as part of a Wallaroo instance.

When a pipeline is updated with new model steps or deployment configurations, the updated pipeline is republished to the Edge Registry as a new repo and version. This allows DevOps engineers to update an Wallaroo pipeline in any container supporting environment with the new versions of the pipeline.

Pipeline Publishing Flow

A typical ML Model and Pipeline deployment to Wallaroo Ops and to remote locations as a Wallaroo Inference server is as follows:

- Components:

- Wallaroo Ops: The Wallaroo Ops provides the backbone services for ML Model deployment. This is where ML models are uploaded, pipelines created and deployed for inferencing, pipelines published to OCI compliant registries, and other functions.

- Wallaroo Inference Server: A remote deployment of a published Wallaroo pipeline with the Wallaroo Inference Engine outside the Wallaroo Ops instance. When the edge name is added to a Wallaroo publish, the Wallaroo Inference Server’s inference logs are submitted to the Wallaroo Ops instance. These inference logs are stored as part of the Wallaroo pipeline the remote deployment is published from.

- DevOps:

- Add Edge Publishing and Edge Observability to the Wallaroo Ops center. See Edge Deployment Registry Guide for details on updating the Wallaroo instance with Edge Publishing and Edge Observability.

- Data Scientists:

- Develop and train models.

- Test their deployments in Wallaroo Ops Center as Pipelines with:

- Pipeline Steps: The models part of the inference flow.

- Pipeline Deployment Configurations: CPUs, RAM, GPU, and Architecture settings to run the pipeline.

- Publish the Pipeline from the Wallaroo Ops to an OCI Registry: Store a image version of the Pipeline with models and pipeline configuration into the OCI Registry set by the DevOps engineers as the Wallaroo Edge Registry Service.

- DevOps:

- Retrieve the new or updated Wallaroo published pipeline from the Wallaroo Edge Registry Service.

- (Optional): Add an edge to the Wallaroo publish. This provides the

EDGE_BUNDLEwith the credentials for the Wallaroo Inference Server to transmit its inference result logs back to the Wallaroo Ops instance. These inference logs are added to the originating Wallaroo pipeline, labeled with themetadata.partitionbeing the name of the edge deployed Wallaroo Inference server. For more details, see Wallaroo SDK Essentials Guide: Pipeline Edge Publication: Edge Observability - Deploy the Pipeline as a Wallaroo Inference Server as a Docker or Kubernetes container, updating the resource allocations as part of the Helm chart, Docker Compose file, etc.

Enable Wallaroo Edge Registry

Set Edge Registry Service

Wallaroo Pipeline Publishes aka Wallaroo Servers are automatically routed to the Edge Open Container Initiative (OCI) Registry Service registered in the Wallaroo instance. This is enabled through either the Kots Administrative Dashboard through kots, or by enabling it through a helm chart setting. From here on out, we will refer to it as the Edge Registry Service.

Set Edge Registry Service through Kots

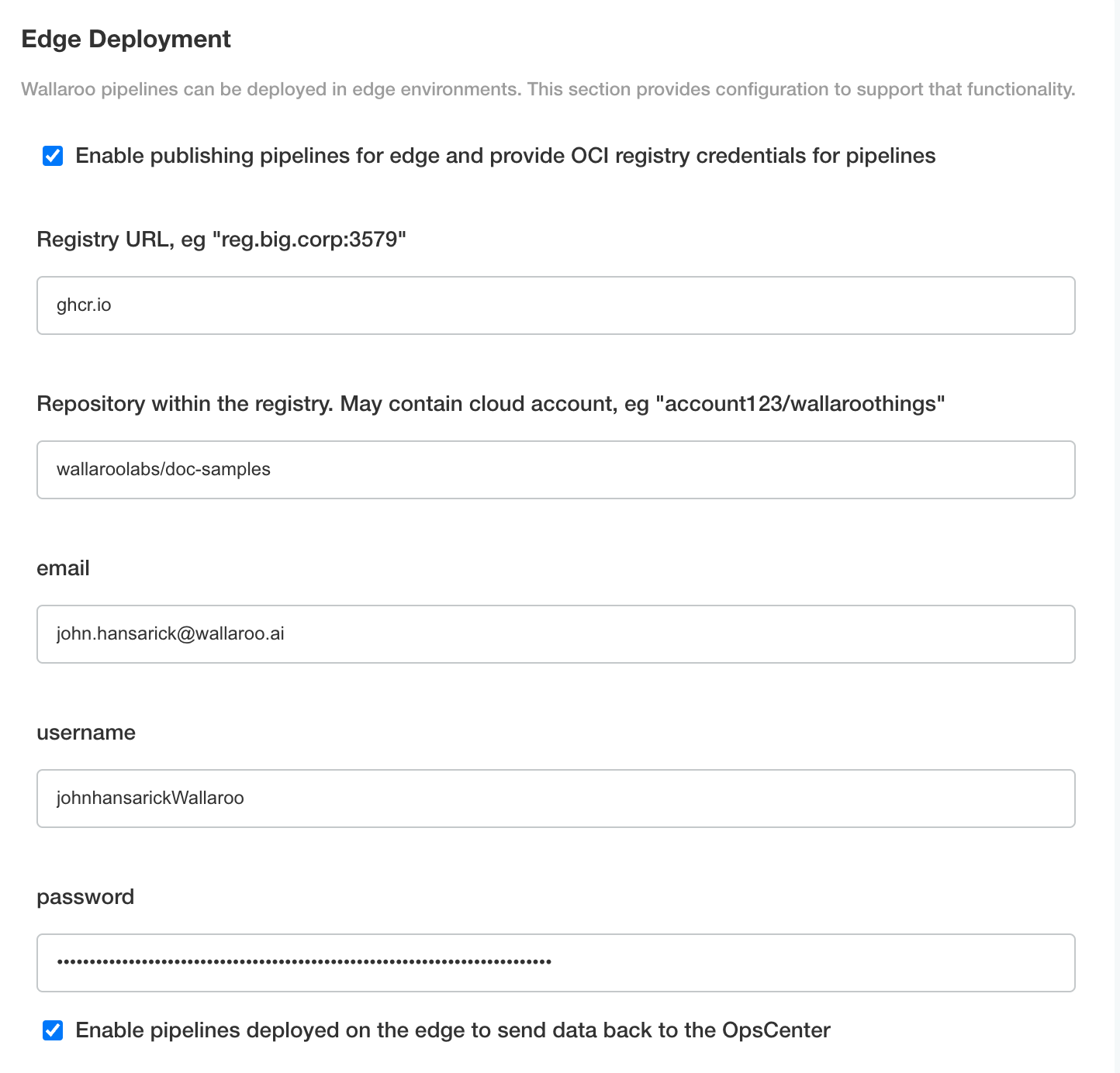

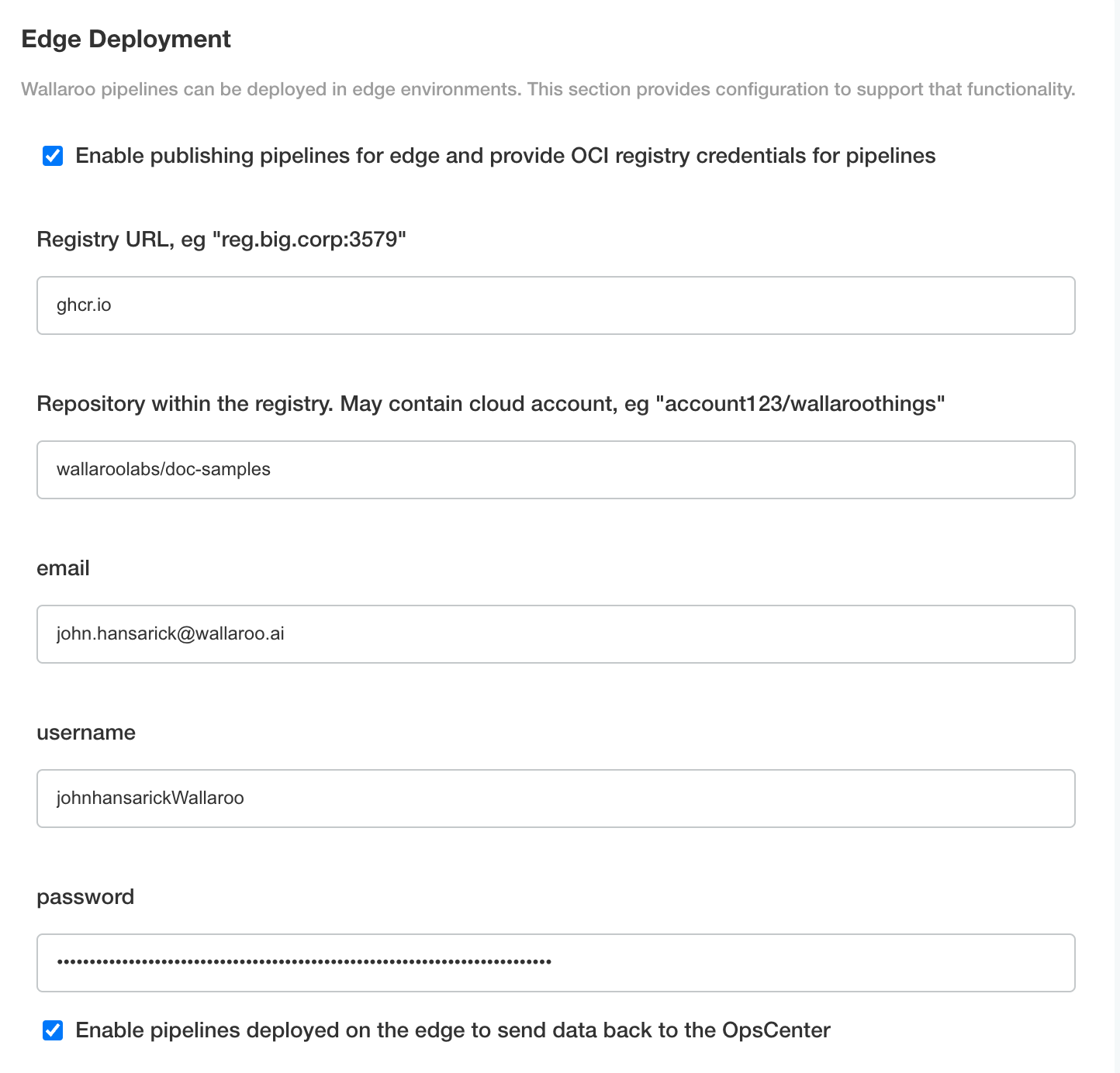

To set the Edge Registry Settings through the Kots Administrative Dashboard:

Launch the Kots Administrative Dashboard using the following command, replacing the

--namespaceparameter with the Kubernetes namespace for the Wallaroo instance:kubectl kots admin-console --namespace wallarooOpen a browser at the URL detailed in the step above and authenticate using the console password set as described in the as detailed in the Wallaroo Install Guides.

From the top menu, select Config then scroll to Edge Deployment.

Enable Provide OCI registry credentials for pipelines.

Enter the following:

- Registry URL: The address of the registry service. For example:

us-west1-docker.pkg.dev. - email: The email address of the user account used to authenticate to the service.

- username: The account used to authenticate to the registry service.

- password: The password or token used to authenticate to the registry service.

- To enable edge observability, enable Enable pipelines deployed on the edge to send data back to the OpsCenter.

- Registry URL: The address of the registry service. For example:

Save the updated configuration, then deploy it. Once complete, the edge registry settings will be available.

Set Edge Registry Service through Helm

The helm settings for adding the Edge Server configuration details are set through the ociRegistry element, with the following settings.

- ociRegistry: Sets the Edge Server registry information.

- enabled:

trueenables the Edge Server registry information,falsedisables it. - registry: The registry url. For example:

reg.big.corp:3579. - repository: The repository within the registry. This may include the cloud account, or the full path where the Wallaroo published pipelines should be kept. For example:

account123/wallaroo/pipelines. - email: Optional field to track the email address of the registry credential.

- username: The username to the registry. This may vary based on the provider. For example, GCP Artifact Registry with service accounts uses the username

_json_key_base64with the password as a base64 processed token of the credential information. - password: The password or token for the registry service.

- enabled:

Set Edge Observability Service

Edge Observability allows published Wallaroo Servers to community with the Wallaroo Ops center to update their associated Wallaroo Pipeline with inference results, visible in the Pipeline logs.

The edge sites will report their inference results to the address $WALLAROODOMAIN/edge. For example: If the Wallaroo Domain Name is wallaroo.example.com, then the Edge Observability Service address is wallaroo.example.com/edge.

Set Edge Observability Service through Kots

To enable Edge Observability using the Kots Administrative Dashboard for kots installed instances of Wallaroo Ops:

Launch the Kots Administrative Dashboard using the following command, replacing the

--namespaceparameter with the Kubernetes namespace for the Wallaroo instance:kubectl kots admin-console --namespace wallarooOpen a browser at the URL detailed in the step above and authenticate using the console password set as described in the as detailed in the Wallaroo Install Guides.

Access Config and scroll to Edge Deployment and enable Enable pipelines deployed on the edge to send data back to the OpsCenter.

Set the following:

- Enable Enable pipelines deployed on the edge to send data back to the OpsCenter.

Save the updated configuration, then deploy it. Once complete, the edge observability service is available.

Set Edge Observability Service through Helm

To enable the Edge Observability Service for Wallaroo Ops Helm based installation, include the following variables for the helm settings. For these instructions they are stored in local-values.yaml:

edgelb:

enabled: true

pipelines:

enabled: true

Update the Wallaroo Helm installation with the same version as the Wallaroo ops and the channel. For example, if updating Wallaroo Enterprise server, use the following:

helm upgrade wallaroo oci://registry.replicated.com/wallaroo/2025-2/wallaroo --version 2025.2.3-6588 --values local-values.yaml --timeout 10m --wait --wait-for-jobs

This process will take 5-15 minutes depending on other configuration options.

Registry Setup Guides

The following are short guides for setting up the credentials for different registry services. Refer to the registry documentation for full details.

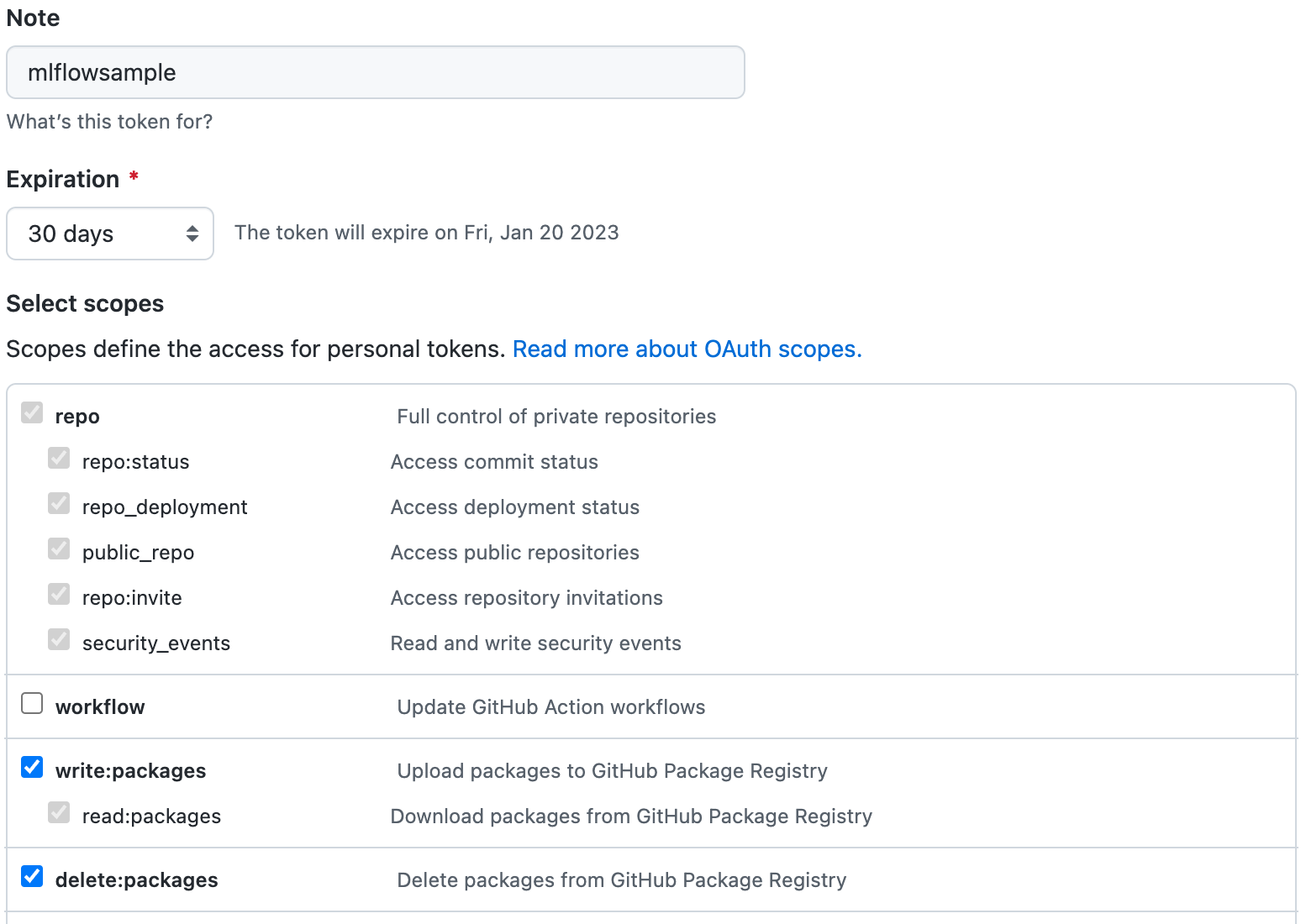

The following process is used with a GitHub Container Registry to create the authentication tokens for use with a Wallaroo instance’s Private Model Registry configuration.

See the GitHub Working with the Container registry for full details.

The following process is used register a GitHub Container Registry with Wallaroo.

Create a new token as per the instructions from the Creating a personal access token (classic) guide. Note that a classic token is recommended for this process. Store this token in a secure location as it will not be able to be retrieved later from GitHub. Verify the following permissions are set:

Select the

write:packagesscope to download and upload container images and read and write their metadata.Select the

read:packagesscope to download container images and read their metadata (selected whenwrite:packagesis selected by default).Select the

delete:packagesscope to delete container images.

Store the token in a secure location.

This can be tested with docker by logging into the specified registry. For example:

docker login -u {Github Username} --password {Your Token} ghcr.io/{Your Github Username or Organization}

The following process is an example of setting up an Artifact Registry Service with Google Cloud Platform (GCP) that is used to store containerized model images and retrieve them for use with Wallaroo.

Uploading and downloading containerized models to a Google Cloud Platform Registry follows these general steps.

Create the GCP registry.

Create a Service Account that will manage the registry service requests.

Assign appropriate Artifact Registry role to the Service Account

Retrieve the Service Account credentials.

Using either a specific user, or the Service Account credentials, upload the containerized model to the registry service.

Add the service account credentials to the Wallaroo instance’s containerized model private registry configuration.

Prerequisites

The commands below use the Google gcloud command line tool, and expect that a Google Cloud Platform account is created and the gcloud application is associated with the GCP Project for the organization.

For full details on the process and other methods, see the Google GCP documentation.

- Create the Registry

The following is based on the Create a repository using the Google Cloud CLI.

The following information is needed up front:

- $REPOSITORY_NAME: What to call the registry.

- $LOCATION: Where the repository will be located. GCP locations are derived through the

gcloud artifacts locations listcommand. - $DESCRIPTION: Any details to be displayed. Sensitive data should not be included.

The follow example script will create a GCP registry with the minimum requirements.

REPOSITORY_NAME="YOUR NAME"

LOCATION="us-west1"

DESCRIPTION="My amazing registry."

gcloud artifacts repositories create REPOSITORY \

--repository-format=docker \

--location=LOCATION \

--description="$DESCRIPTION" \

--async

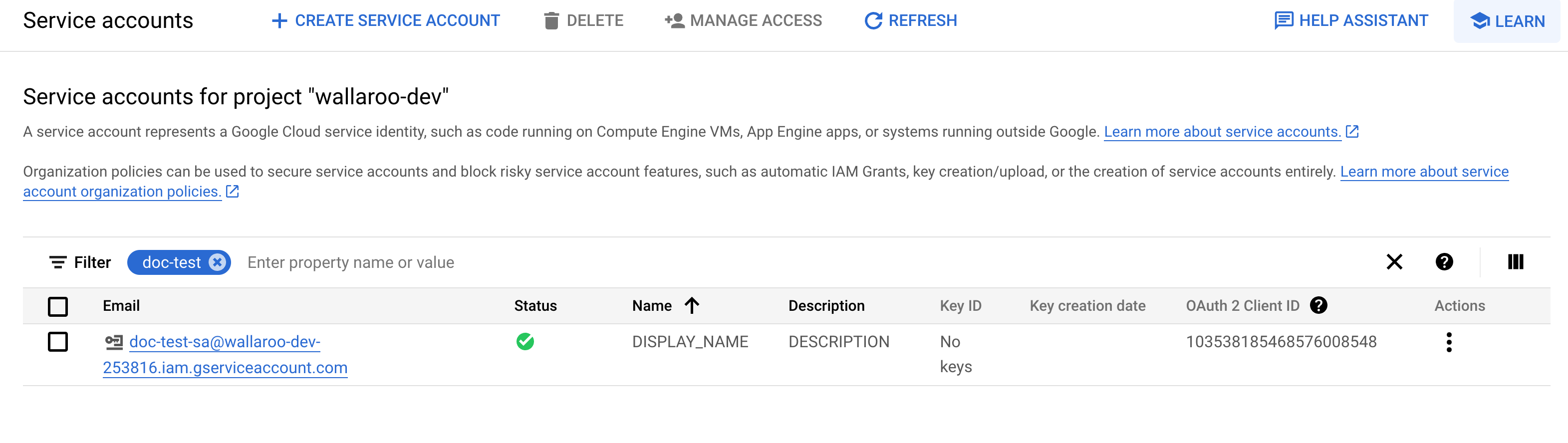

- Create a GCP Registry Service Account

The GCP Registry Service Account is used to manage the GCP registry service. The steps are details from the Google Create a service account guide.

The gcloud process for these steps are:

Connect the

gcloudapplication to the organization’s project.$PROJECT_ID="YOUR PROJECT ID" gcloud config set project $PROJECT_IDCreate the service account with the following:

- The name of the service account.

- A description of its purpose.

- The name to show when displayed.

SA_NAME="YOUR SERVICE ACCOUNT NAME" DESCRIPTION="Wallaroo container registry SA" DISPLAY_NAME="Wallaroo the Roo" gcloud iam service-accounts create $SA_NAME \ --description=$DESCRIPTION \ --display-name=$DISPLAY_NAME

- Assign Artifact Registry Role

Assign one or more of the following accounts to the new registry role based on the following criteria, as detailed in the Google GCP Repository Roles and Permissions Guide.

- For

pkg.devdomains.

| Role | Description |

|---|---|

| Artifact Registry Reader (roles/artifactregistry.reader) | View and get artifacts, view repository metadata. |

| Artifact Registry Writer (roles/artifactregistry.writer) | Read and write artifacts. |

| Artifact Registry Repository Administrator (roles/artifactregistry.repoAdmin) | Read, write, and delete artifacts. |

| Artifact Registry Administrator (roles/artifactregistry.admin) | Create and manage repositories and artifacts. |

- For

gcr.iorepositories.

| Role | Description |

|---|---|

| Artifact Registry Create-on-push Writer (roles/artifactregistry.createOnPushWriter) | Read and write artifacts. Create gcr.io repositories. |

| Artifact Registry Create-on-push Repository Administrator (roles/artifactregistry.createOnPushRepoAdmin) | Read, write, and delete artifacts. Create gcr.io repositories. |

For this example, we will add the Artifact Registry Create-on-push Writer to the created Service Account from the previous step.

Add the role to the service account, specifying the

memberas the new service account, and the role as the selected role. For this example, apkg.devis assumed for the Artifact Registry type.# for pkg.dev ROLE="roles/artifactregistry.writer" # for gcr.io #ROLE="roles/artifactregistry.createOnPushWriter gcloud projects add-iam-policy-binding \ $PROJECT_ID \ --member="serviceAccount:$SA_NAME@$PROJECT_ID.iam.gserviceaccount.com" \ --role=$ROLE

- Authenticate to Repository

To push and pull image from the new registry, we’ll use our new service account and authenticate through the local Docker application. See the GCP Push and pull images for details on using Docker and other methods to add artifacts to the GCP artifact registry.

- Set up Service Account Key

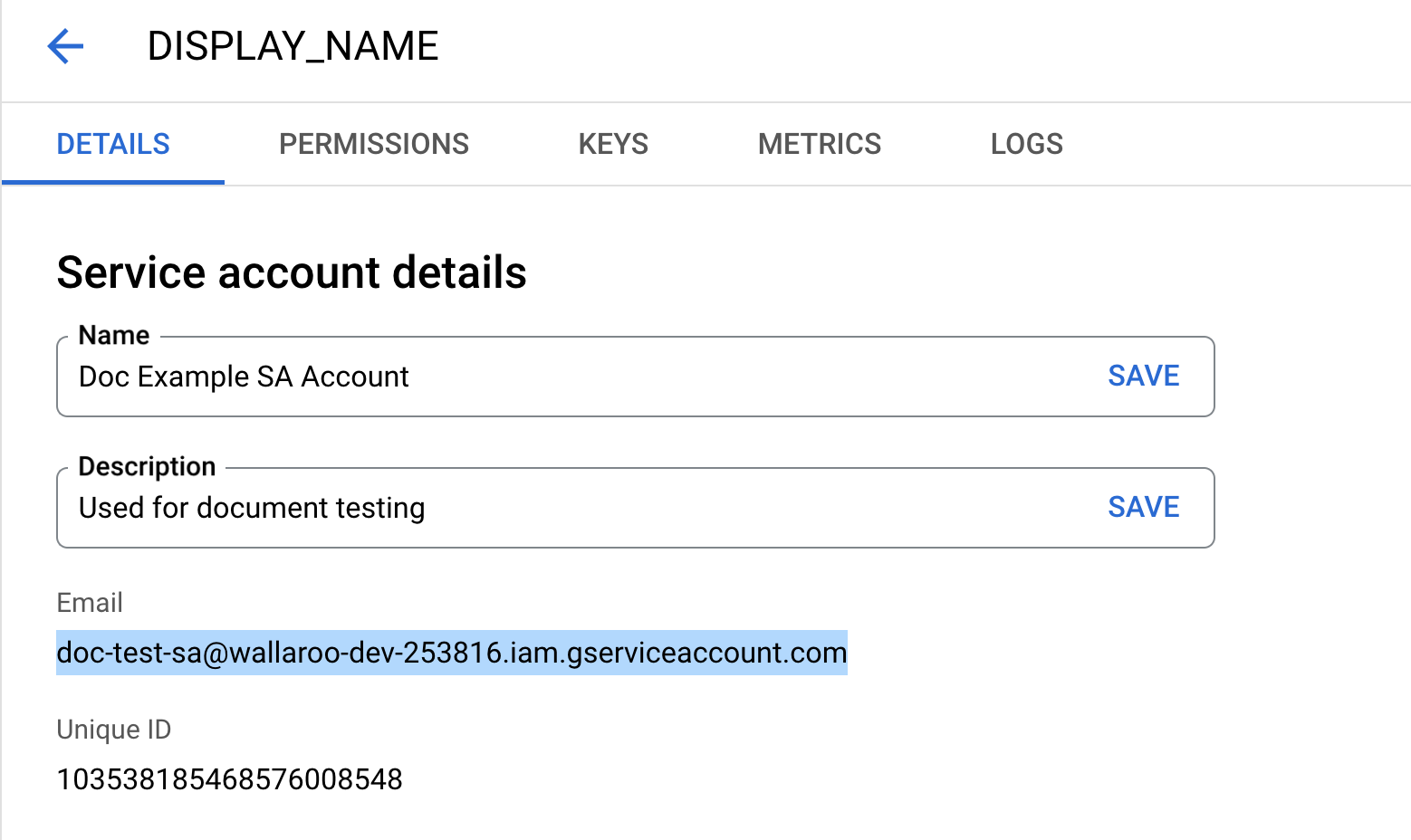

To set up the Service Account key, we’ll use the Google Console IAM & ADMIN dashboard based on the Set up authentication for Docker, using the JSON key approach.

From GCP console, search for

IAM & Admin.Select Service Accounts.

Select the service account to generate keys for.

Select the Email address listed and store this for later steps with the key generated through this process.

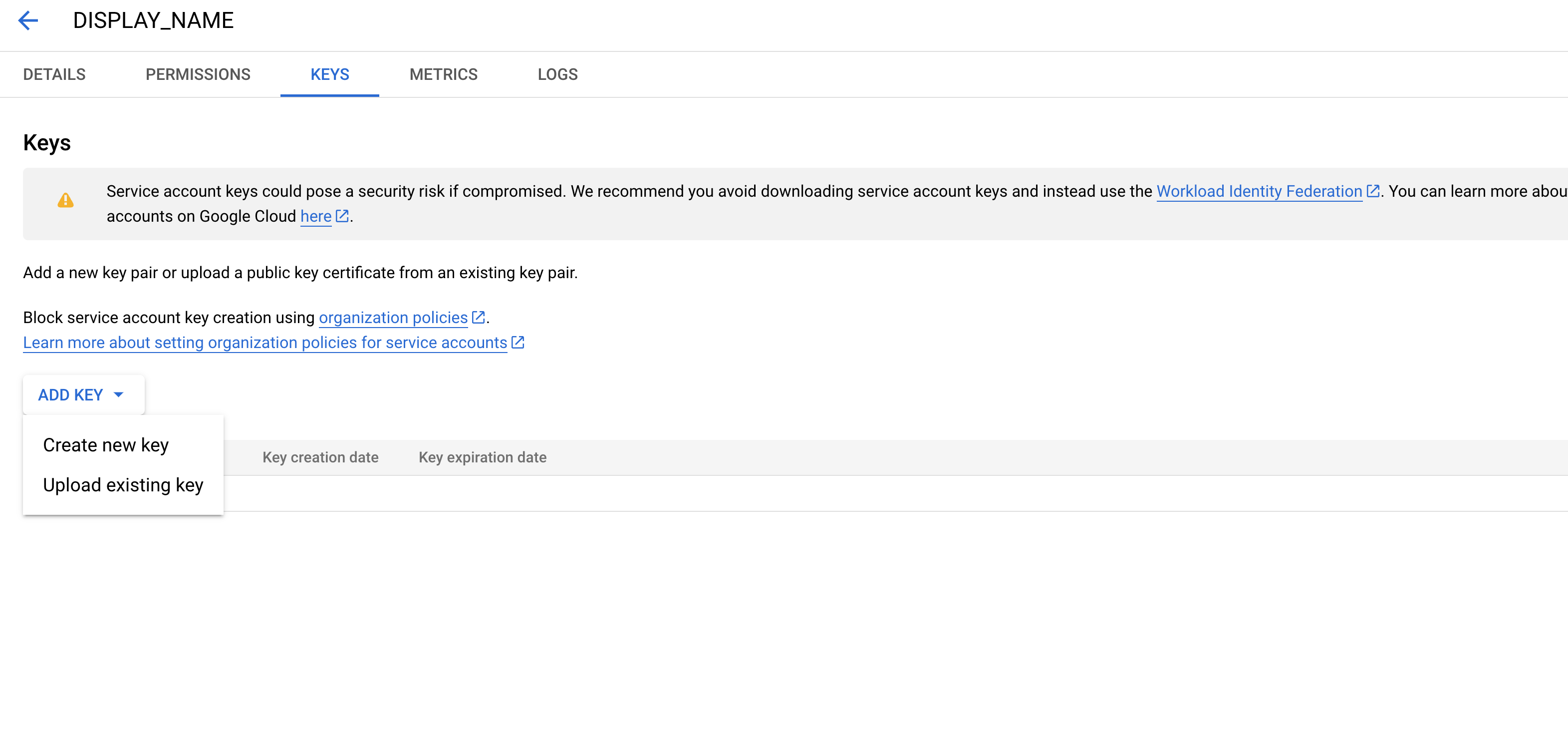

Select Keys, then Add Key, then Create new key.

Select JSON, then Create.

Store the key in a safe location.

- Convert SA Key to Base64

The key file downloaded in Set up Service Account Key needs to be converted to base64 with the following command, replacing the locations of KEY_FILE and KEYFILEBASE64:

KEY_FILE = ~/.gcp-sa-registry-keyfile.json

KEYFILEBASE64 = ~/.gcp-sa-registry-keyfile-b64.json

base64 -i $KEY_FILE -o $KEYFILEBASE64

This base64 key is then used as the authentication token, with the username _json_key_base64.

This can be tested with docker by logging into the specified registry. For example:

token=$(cat $KEYFILEBASE64)

cat $tok | docker login -u _json_key_base64 --password-stdin https://{GCP artifact registry region}.pkg.dev

Pipeline Publish Endpoints

Publish a Pipeline

Pipelines are published as images to the edge registry set in the Enable Wallaroo Edge Registry through the following endpoint.

- Endpoint:

/v1/api/pipelines/publish

- Parameters

- pipeline_id (Integer Required): The numerical id of the pipeline to publish to the edge registry.

- pipeline_version_id (Integer Required): The numerical id of the pipeline’s version to publish to the edge registry.

- model_config_ids (List Optional): The list of model config ids to include.

- Returns

- id (Integer): Numerical Wallaroo id of the published pipeline.

- pipeline_version_id (Integer): Numerical Wallaroo id of the pipeline version published.

- status: The status of the pipeline publish. Values include:

PendingPublish: The pipeline publication is about to be uploaded or is in the process of being uploaded.Published: The pipeline is published and ready for use.

- created_at (String): When the published pipeline was created.

- updated_at (String): When the published pipeline was updated.

- created_by (String): The email address of the Wallaroo user that created the pipeline publish.

- pipeline_url (String): The URL of the published pipeline in the edge registry. May be

nulluntil the status isPublished) - engine_url (String): The URL of the published pipeline engine in the edge registry. May be

nulluntil the status isPublished. - helm: The Helm chart information including the following fields:

- reference (String): The Helm reference.

- chart (String): The Helm chart URL.

- version (String): The Helm chart version.

- engine_config (*

wallaroo.deployment_config.DeploymentConfig) | The pipeline configuration included with the published pipeline.

Publish a Pipeline Example

The following example shows how to publish a pipeline to the edge registry service associated with the Wallaroo instance.

# get the pipeline version

pipeline_version_id = pipeline.versions()[-1].id()

pipeline_id = pipeline.id()

headers = {

"Authorization": wl.auth._bearer_token_str(),

"Content-Type": "application/json",

}

url = f"{wl.api_endpoint}/v1/api/pipelines/publish"

response = requests.post(

url,

headers=headers,

json={"pipeline_id": 1,

"pipeline_version_id": pipeline_version,

"model_config_ids": []

}

)

display(response.json())

{'id': 10,

'pipeline_version_id': 30,

'pipeline_version_name': 'ab7ab48f-9aab-4c3d-9f66-097477bd34bf',

'status': 'PendingPublish',

'created_at': '2023-08-28T14:54:54.14098+00:00',

'updated_at': '2023-08-28T14:54:54.14098+00:00',

'created_by': 'db364f8c-b866-4865-96b7-0b65662cb384',

'pipeline_url': None,

'engine_url': None,

'helm': None,

'engine_config': {'engine': {'resources': {'limits': {'cpu': 4.0,

'memory': '3Gi'},

'requests': {'cpu': 4.0, 'memory': '3Gi'}}},

'enginelb': {'resources': {'limits': {'cpu': 1.0, 'memory': '512Mi'},

'requests': {'cpu': 0.2, 'memory': '512Mi'}}},

'engineAux': {}}}

Get Publish Status

The status of a created Wallaroo pipeline publish is available through the following endpoint.

- Endpoint:

/v1/api/pipelines/get_publish_status

- Parameters

- publish_id (Integer Required): The numerical id of the pipeline publish to retrieve.

- Returns

- id (Integer): Numerical Wallaroo id of the published pipeline.

- pipeline_version_id (Integer): Numerical Wallaroo id of the pipeline version published.

- status: The status of the pipeline publish. Values include:

PendingPublish: The pipeline publication is about to be uploaded or is in the process of being uploaded.Published: The pipeline is published and ready for use.

- created_at (String): When the published pipeline was created.

- updated_at (String): When the published pipeline was updated.

- created_by (String): The email address of the Wallaroo user that created the pipeline publish.

- pipeline_url (String): The URL of the published pipeline in the edge registry. May be

nulluntil the status isPublished) - engine_url (String): The URL of the published pipeline engine in the edge registry. May be

nulluntil the status isPublished. - helm: The Helm chart information including the following fields:

- reference (String): The Helm reference.

- chart (String): The Helm chart URL.

- version (String): The Helm chart version.

- engine_config (*

wallaroo.deployment_config.DeploymentConfig) | The pipeline configuration included with the published pipeline.

Get Publish Status Example

The following example shows retrieving the status of a recently created pipeline publish.

# Publish Status

headers = {

"Authorization": wl.auth._bearer_token_str(),

"Content-Type": "application/json",

}

url = f"{wl.api_endpoint}/v1/api/pipelines/get_publish_status"

response = requests.post(

url,

headers=headers,

json={

"id": publish_id

},

)

display(response.json())

{'id': 10,

'pipeline_version_id': 30,

'pipeline_version_name': 'ab7ab48f-9aab-4c3d-9f66-097477bd34bf',

'status': 'Published',

'created_at': '2023-08-28T14:54:54.14098+00:00',

'updated_at': '2023-08-28T14:54:54.14098+00:00',

'created_by': 'db364f8c-b866-4865-96b7-0b65662cb384',

'pipeline_url': 'ghcr.io/wallaroolabs/doc-samples/pipelines/edge-pipeline:ab7ab48f-9aab-4c3d-9f66-097477bd34bf',

'engine_url': 'ghcr.io/wallaroolabs/doc-samples/engine:v2023.3.0-main-3731',

'helm': {'reference': 'ghcr.io/wallaroolabs/doc-samples/charts@sha256:dbb46f807dbd75840cf1433daee51f4cc272791da5836366bfb8b2b863abf63b',

'chart': 'ghcr.io/wallaroolabs/doc-samples/charts/edge-pipeline',

'version': '0.0.1-ab7ab48f-9aab-4c3d-9f66-097477bd34bf'},

'engine_config': {'engine': {'resources': {'limits': {'cpu': 4.0,

'memory': '3Gi'},

'requests': {'cpu': 4.0, 'memory': '3Gi'}}},

'enginelb': {'resources': {'limits': {'cpu': 1.0, 'memory': '512Mi'},

'requests': {'cpu': 0.2, 'memory': '512Mi'}}},

'engineAux': {}}}

List Publishes for a Pipeline

A list of publishes created for a specific pipeline is retrieved through the following endpoint.

- Endpoint

/v1/api/pipelines/list_publishes_for_pipeline

- Parameters

- publish_id (Integer Required): The numerical id of the pipeline to retrieve all publishes for.

- Returns a List of pipeline publishes with the following fields:

- id (Integer): Numerical Wallaroo id of the published pipeline.

- pipeline_version_id (Integer): Numerical Wallaroo id of the pipeline version published.

- status: The status of the pipeline publish. Values include:

PendingPublish: The pipeline publication is about to be uploaded or is in the process of being uploaded.Published: The pipeline is published and ready for use.

- created_at (String): When the published pipeline was created.

- updated_at (String): When the published pipeline was updated.

- created_by (String): The email address of the Wallaroo user that created the pipeline publish.

- pipeline_url (String): The URL of the published pipeline in the edge registry. May be

nulluntil the status isPublished) - engine_url (String): The URL of the published pipeline engine in the edge registry. May be

nulluntil the status isPublished. - helm: The Helm chart information including the following fields:

- reference (String): The Helm reference.

- chart (String): The Helm chart URL.

- version (String): The Helm chart version.

- engine_config (*

wallaroo.deployment_config.DeploymentConfig) | The pipeline configuration included with the published pipeline.

List Publishes for a Pipeline Example

The following retrieves a list of all publishes for a specific pipeline.

headers = {

"Authorization": wl.auth._bearer_token_str(),

"Content-Type": "application/json",

}

url = f"{wl.api_endpoint}/v1/api/pipelines/list_publishes_for_pipeline"

response = requests.post(

url,

headers=headers,

json={"pipeline_id": pipeline_id},

)

display(response.json())

{'pipelines': [{'id': 10,

'pipeline_version_id': 30,

'pipeline_version_name': 'ab7ab48f-9aab-4c3d-9f66-097477bd34bf',

'status': 'Published',

'created_at': '2023-08-28T14:54:54.14098+00:00',

'updated_at': '2023-08-28T14:54:54.14098+00:00',

'created_by': 'db364f8c-b866-4865-96b7-0b65662cb384',

'pipeline_url': 'ghcr.io/wallaroolabs/doc-samples/pipelines/edge-pipeline:ab7ab48f-9aab-4c3d-9f66-097477bd34bf',

'engine_url': 'ghcr.io/wallaroolabs/doc-samples/engine:v2023.3.0-main-3731',

'helm': {'reference': 'ghcr.io/wallaroolabs/doc-samples/charts@sha256:dbb46f807dbd75840cf1433daee51f4cc272791da5836366bfb8b2b863abf63b',

'chart': 'ghcr.io/wallaroolabs/doc-samples/charts/edge-pipeline',

'version': '0.0.1-ab7ab48f-9aab-4c3d-9f66-097477bd34bf'},

'engine_config': {'engine': {'resources': {'limits': {'cpu': 4.0,

'memory': '3Gi'},

'requests': {'cpu': 4.0, 'memory': '3Gi'}}},

'enginelb': {'resources': {'limits': {'cpu': 1.0, 'memory': '512Mi'},

'requests': {'cpu': 0.2, 'memory': '512Mi'}}},

'engineAux': {}}}]}

Edge Observability

Edge Observability allows edge deployments of Wallaroo Server to transmit inference results back to the Wallaroo Ops center and become part of the pipeline’s logs. This is valuable for data scientists and MLOps engineers to retrieve edge deployment logs for use in model observability, drift, and other use cases.

Before starting, the Edge Observability Service must be enabled in the Wallaroo Ops center. See the Edge Deployment Registry Guide for details on enabling the Wallaroo Edge Deployment service.

Wallaroo Server edge observability is enabled when a new edge location is added to the pipeline publish. Each location has its own EDGE_BUNDLE settings, a Base64 encoded set of instructions informing the edge deployed Wallaroo Server on how to communicate with Wallaroo Ops center.

Add Publish Edge

Edges are added to an existing pipeline publish with the following endpoint.

- Endpoint

/v1/api/pipelines/add_edge_to_publish

- Parameters

- name (String Required): The name of the edge. This must be a unique value across all edges in the Wallaroo instance.

- pipeline_publish_id (Integer Required): The numerical identifier of the pipeline publish to add this edge to.

- tags (List[String] Optional): A list of optional tags.

- Returns

- id (Integer): The integer ID of the pipeline publish.

- created_at: (String): The DateTime of the pipeline publish.

- docker_run_variables (String) The Docker variables in base64 encoded format that include the following: The

BUNDLE_VERSION,EDGE_NAME,JOIN_TOKEN_,OPSCENTER_HOST,PIPELINE_URL, andWORKSPACE_ID. - engine_config (String): The Wallaroo

wallaroo.deployment_config.DeploymentConfigfor the pipeline. - pipeline_version_id (Integer): The integer identifier of the pipeline version published.

- status (String): The status of the publish.

Publishedis a successful publish. - updated_at (DateTime): The DateTime when the pipeline publish was updated.

- user_images (List[String]): User images used in the pipeline publish.

- created_by (String): The UUID of the Wallaroo user that created the pipeline publish.

- engine_url (String): The URL for the published pipeline’s Wallaroo engine in the OCI registry.

- error (String): Any errors logged.

- helm (String): The helm chart, helm reference and helm version.

- pipeline_url (String): The URL for the published pipeline’s container in the OCI registry.

- pipeline_version_name (String): The UUID identifier of the pipeline version published.

- additional_properties (String): Any additional properties.

Add Publish Edge Example

headers = {

"Authorization": wl.auth._bearer_token_str(),

"Content-Type": "application/json",

}

edge_name = f"ccfraud-observability-api{suffix_random}"

url = f"{wl.api_endpoint}/v1/api/pipelines/add_edge_to_publish"

response = requests.post(

url,

headers=headers,

json={

"pipeline_publish_id": publish_id,

"name": edge_name,

"tags": []

}

)

display(response.json())

{'id': 9,

'pipeline_version_id': 17,

'pipeline_version_name': 'cd70ed84-2db7-4fc5-a766-482686ca3fcc',

'status': 'Published',

'created_at': '2023-10-30T19:04:16.758117+00:00',

'updated_at': '2023-10-30T19:04:16.758117+00:00',

'created_by': '1b26fc10-d0f9-4f92-a1a2-ab342b3d069e',

'pipeline_url': 'ghcr.io/wallaroolabs/doc-samples/pipelines/edge-pipeline:cd70ed84-2db7-4fc5-a766-482686ca3fcc',

'engine_url': 'ghcr.io/wallaroolabs/doc-samples/engines/proxy/wallaroo/ghcr.io/wallaroolabs/standalone-mini:v2023.4.0-main-4079',

'helm': {'reference': 'ghcr.io/wallaroolabs/doc-samples/charts@sha256:6f557685fb6e7509dcee4e09af1ece94df1dcc9886ef19a9adc6c015e9a7279b',

'chart': 'ghcr.io/wallaroolabs/doc-samples/charts/edge-pipeline',

'version': '0.0.1-cd70ed84-2db7-4fc5-a766-482686ca3fcc',

'values': {'edgeBundle': 'ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT1jY2ZyYXVkLW9ic2VydmFiaWxpdHktYXBpZnpkdgpleHBvcnQgSk9JTl9UT0tFTj1kYTM2Njk3Zi1iYWIyLTQyYTktYmNmNy03MTk5YWU0YWVkZjkKZXhwb3J0IE9QU0NFTlRFUl9IT1NUPWRvYy10ZXN0LmVkZ2Uud2FsbGFyb29jb21tdW5pdHkubmluamEKZXhwb3J0IFBJUEVMSU5FX1VSTD1naGNyLmlvL3dhbGxhcm9vbGFicy9kb2Mtc2FtcGxlcy9waXBlbGluZXMvZWRnZS1waXBlbGluZTpjZDcwZWQ4NC0yZGI3LTRmYzUtYTc2Ni00ODI2ODZjYTNmY2MKZXhwb3J0IFdPUktTUEFDRV9JRD05'}},

'engine_config': {'engine': {'resources': {'limits': {'cpu': 4.0,

'memory': '3Gi'},

'requests': {'cpu': 4.0, 'memory': '3Gi'}}},

'enginelb': {'resources': {'limits': {'cpu': 1.0, 'memory': '512Mi'},

'requests': {'cpu': 0.2, 'memory': '512Mi'}}},

'engineAux': {}},

'error': None,

'user_images': [],

'docker_run_variables': {'EDGE_BUNDLE': 'ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT1jY2ZyYXVkLW9ic2VydmFiaWxpdHktYXBpZnpkdgpleHBvcnQgSk9JTl9UT0tFTj1kYTM2Njk3Zi1iYWIyLTQyYTktYmNmNy03MTk5YWU0YWVkZjkKZXhwb3J0IE9QU0NFTlRFUl9IT1NUPWRvYy10ZXN0LmVkZ2Uud2FsbGFyb29jb21tdW5pdHkubmluamEKZXhwb3J0IFBJUEVMSU5FX1VSTD1naGNyLmlvL3dhbGxhcm9vbGFicy9kb2Mtc2FtcGxlcy9waXBlbGluZXMvZWRnZS1waXBlbGluZTpjZDcwZWQ4NC0yZGI3LTRmYzUtYTc2Ni00ODI2ODZjYTNmY2MKZXhwb3J0IFdPUktTUEFDRV9JRD05'}}

Remove Publish Edge

Edges are removed from an existing pipeline publish with the following endpoint.

- Endpoint

/v1/api/pipelines/remove_edge_from_publish

- Parameters

- name (String Required): The name of the edge. This must be a unique value across all edges in the Wallaroo instance.

- Returns

- null

Remove Publish Edge Example

The following example will add an edge to a pipeline publish, then remove the same edge.

# adding another edge to remove

headers = {

"Authorization": wl.auth._bearer_token_str(),

"Content-Type": "application/json",

}

edge_name2 = f"ccfraud-observability-api2{suffix_random}"

url = f"{wl.api_endpoint}/v1/api/pipelines/add_edge_to_publish"

response = requests.post(

url,

headers=headers,

json={

"pipeline_publish_id": publish_id,

"name": edge_name2,

"tags": []

}

)

display(response.json())

{'id': 10,

'pipeline_version_id': 17,

'pipeline_version_name': 'cd70ed84-2db7-4fc5-a766-482686ca3fcc',

'status': 'Published',

'created_at': '2023-10-30T19:50:32.164154+00:00',

'updated_at': '2023-10-30T19:50:32.164154+00:00',

'created_by': '1b26fc10-d0f9-4f92-a1a2-ab342b3d069e',

'pipeline_url': 'ghcr.io/wallaroolabs/doc-samples/pipelines/edge-pipeline:cd70ed84-2db7-4fc5-a766-482686ca3fcc',

'engine_url': 'ghcr.io/wallaroolabs/doc-samples/engines/proxy/wallaroo/ghcr.io/wallaroolabs/standalone-mini:v2023.4.0-main-4079',

'helm': {'reference': 'ghcr.io/wallaroolabs/doc-samples/charts@sha256:cfd0e77347ec46b6d3dec2c2cc83733c353c5b583428b5c6f4fca11277a2c43e',

'chart': 'ghcr.io/wallaroolabs/doc-samples/charts/edge-pipeline',

'version': '0.0.1-cd70ed84-2db7-4fc5-a766-482686ca3fcc',

'values': {'edgeBundle': 'ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT1jY2ZyYXVkLW9ic2VydmFiaWxpdHktYXBpMmtwd2wKZXhwb3J0IEpPSU5fVE9LRU49NWVmZmIyYzYtZTNmNi00MmY1LWFhYjAtMWE5YTNiN2YwMTIyCmV4cG9ydCBPUFNDRU5URVJfSE9TVD1kb2MtdGVzdC5lZGdlLndhbGxhcm9vY29tbXVuaXR5Lm5pbmphCmV4cG9ydCBQSVBFTElORV9VUkw9Z2hjci5pby93YWxsYXJvb2xhYnMvZG9jLXNhbXBsZXMvcGlwZWxpbmVzL2VkZ2UtcGlwZWxpbmU6Y2Q3MGVkODQtMmRiNy00ZmM1LWE3NjYtNDgyNjg2Y2EzZmNjCmV4cG9ydCBXT1JLU1BBQ0VfSUQ9OQ=='}},

'engine_config': {'engine': {'resources': {'limits': {'cpu': 4.0,

'memory': '3Gi'},

'requests': {'cpu': 4.0, 'memory': '3Gi'}}},

'enginelb': {'resources': {'limits': {'cpu': 1.0, 'memory': '512Mi'},

'requests': {'cpu': 0.2, 'memory': '512Mi'}}},

'engineAux': {}},

'error': None,

'user_images': [],

'docker_run_variables': {'EDGE_BUNDLE': 'ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT1jY2ZyYXVkLW9ic2VydmFiaWxpdHktYXBpMmtwd2wKZXhwb3J0IEpPSU5fVE9LRU49NWVmZmIyYzYtZTNmNi00MmY1LWFhYjAtMWE5YTNiN2YwMTIyCmV4cG9ydCBPUFNDRU5URVJfSE9TVD1kb2MtdGVzdC5lZGdlLndhbGxhcm9vY29tbXVuaXR5Lm5pbmphCmV4cG9ydCBQSVBFTElORV9VUkw9Z2hjci5pby93YWxsYXJvb2xhYnMvZG9jLXNhbXBsZXMvcGlwZWxpbmVzL2VkZ2UtcGlwZWxpbmU6Y2Q3MGVkODQtMmRiNy00ZmM1LWE3NjYtNDgyNjg2Y2EzZmNjCmV4cG9ydCBXT1JLU1BBQ0VfSUQ9OQ=='}}

# remove the edge

headers = {

"Authorization": wl.auth._bearer_token_str(),

"Content-Type": "application/json",

}

edge_name2 = f"ccfraud-observability-api2{suffix_random}"

url = f"{wl.api_endpoint}/v1/api/pipelines/remove_edge_from_publish"

response = requests.post(

url,

headers=headers,

json={

"name": edge_name2,

}

)

display(response.json())

null

Edge Bundle Token TTL

When an edge is added to a pipeline publish, the field docker_run_variables contains a JSON value for edge devices to connect to the Wallaroo Ops instance. The settings are stored in the key EDGE_BUNDLE as a base64 encoded value that include the following:

BUNDLE_VERSION: The current version of the bundled Wallaroo pipeline.EDGE_NAME: The edge name as defined when created and added to the pipeline publish.JOIN_TOKEN_: The one time authentication token for authenticating to the Wallaroo Ops instance.OPSCENTER_HOST: The hostname of the Wallaroo Ops edge service. See Edge Deployment Registry Guide for full details on enabling pipeline publishing and edge observability to Wallaroo.PIPELINE_URLWORKSPACE_ID.

For example:

{'edgeBundle': 'ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT14Z2ItY2NmcmF1ZC1lZGdlLXRlc3QKZXhwb3J0IEpPSU5fVE9LRU49MzE0OGFkYTUtMjg1YS00ZmNhLWIzYjgtYjUwYTQ4ZDc1MTFiCmV4cG9ydCBPUFNDRU5URVJfSE9TVD1kb2MtdGVzdC5lZGdlLndhbGxhcm9vY29tbXVuaXR5Lm5pbmphCmV4cG9ydCBQSVBFTElORV9VUkw9Z2hjci5pby93YWxsYXJvb2xhYnMvZG9jLXNhbXBsZXMvcGlwZWxpbmVzL2VkZ2UtcGlwZWxpbmU6ZjM4OGMxMDktOGQ1Ny00ZWQyLTk4MDYtYWExM2Y4NTQ1NzZiCmV4cG9ydCBXT1JLU1BBQ0VfSUQ9NQ=='}

base64 -D

ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT14Z2ItY2NmcmF1ZC1lZGdlLXRlc3QKZXhwb3J0IEpPSU5fVE9LRU49MzE0OGFkYTUtMjg1YS00ZmNhLWIzYjgtYjUwYTQ4ZDc1MTFiCmV4cG9ydCBPUFNDRU5URVJfSE9TVD1kb2MtdGVzdC5lZGdlLndhbGxhcm9vY29tbXVuaXR5Lm5pbmphCmV4cG9ydCBQSVBFTElORV9VUkw9Z2hjci5pby93YWxsYXJvb2xhYnMvZG9jLXNhbXBsZXMvcGlwZWxpbmVzL2VkZ2UtcGlwZWxpbmU6ZjM4OGMxMDktOGQ1Ny00ZWQyLTk4MDYtYWExM2Y4NTQ1NzZiCmV4cG9ydCBXT1JLU1BBQ0VfSUQ9NQ==^D

export BUNDLE_VERSION=1

export EDGE_NAME=xgb-ccfraud-edge-test

export JOIN_TOKEN=3148ada5-285a-4fca-b3b8-b50a48d7511b

export OPSCENTER_HOST=doc-test.wallaroocommunity.ninja/edge

export PIPELINE_URL=ghcr.io/wallaroolabs/doc-samples/pipelines/edge-pipeline:f388c109-8d57-4ed2-9806-aa13f854576b

export WORKSPACE_ID=5

The JOIN_TOKEN is a one time access token. Once used, a JOIN_TOKEN expires. The authentication session data is stored in persistent volumes. Persistent volumes must be specified for docker and docker compose based deployments of Wallaroo pipelines; helm based deployments automatically provide persistent volumes to store authentication credentials.

The JOIN_TOKEN has the following time to live (TTL) parameters.

- Once created, the

JOIN_TOKENis valid for 24 hours. After it expires the edge will not be allowed to contact the OpsCenter the first time and a new edge bundle will have to be created. - After an Edge joins to Wallaroo Ops for the first time with persistent storage, the edge must contact the Wallaroo Ops instance at least once every 7 days.

- If this period is exceeded, the authentication credentials will expire and a new edge bundle must be created with a new and valid

JOIN_TOKEN.

- If this period is exceeded, the authentication credentials will expire and a new edge bundle must be created with a new and valid

Wallaroo edges require unique names. To create a new edge bundle with the same name:

- Use the Remove Edge to remove the edge by name.

- Use Add Edge to add the edge with the same name. A new

EDGE_BUNDLEis generated with a newJOIN_TOKEN.

Pipeline Edge Deployment

Once a pipeline is published to the Edge Registry service, it can be deployed in environments such as Docker, Kubernetes, or similar container running services by a DevOps engineer. Before starting, verify the pipeline is published as per the Edge and Multi-cloud Pipeline Publish guide.

Docker Deployment

Before starting, verify that the the Docker environment is able to connect to the artifact registry service.

For more details, check with the documentation on the artifact registry service. The following are provided for the three major cloud services:

- Set up authentication for Docker

- Authenticate with an Azure container registry

- Authenticating Amazon ECR Repositories for Docker CLI with Credential Helper

For the deployment, the engine URL is specified with the following environmental variables:

DEBUG(true|false): Whether to include debug output.OCI_REGISTRY: The URL of the registry service.CONFIG_CPUS: The number of CPUs to use. This applies to the inference engine only.The following options apply to the inference pipeline and the models assigned as pipeline steps.

gpus: Whether to allocate available gpus to the deployment. If no gpus are to be allocated, this options is not available. For more details on how to specify gpu resources based on the edge hardware configuration, see Docker Engine: Containers: Access an NVIDIA GPU For example, to allocate gpus to the inference pipeline:--gpus all

cpus: The fractional number of cpus to apply. For example:--cpus=1.25--cpus=2.0

memory: The amount of ram to allocate in unit values of:k: kilobytem: megabyteg: gigabyte

For example:

--memory=1536m--memory=512k

PIPELINE_URL: The published pipeline URL.EDGE_BUNDLE(Optional): The base64 encoded edge token and other values to connect to the Wallaroo Ops instance. This is used for edge management and transmitting inference results for observability. IMPORTANT NOTE: The token forEDGE_BUNDLEis valid for one deployment. Best practice is to use thePERSISTENT_VOLUME_DIRto store the authentication credentials between deployments. For subsequent deployments, generate a new edge location with its ownEDGE_BUNDLE.LOCAL_INFERENCE_STORAGE(Optional): Sets amount of storage to allocate for the edge deployments inference log storage capacity. This is in the format{size as number}{unit value}. The values are similar to the Kubernetes memory resource units format. If used, must be used withPLATEAU_PAGE_SIZE. The accepted unit values are:Ki(for KiloBytes)Mi(for MegaBytes)Gi(for GigaBytes)Ti(for TeraBytes)

PLATEAU_PAGE_SIZE(Optional): How many inference log rows to upload from the edge deployment at a time. Must be used withLOCAL_INFERENCE_STORAGE.

The following variables must be set by the user.

OCI_USERNAME: The edge registry username.OCI_PASSWORD: The edge registry password or token.EDGE_PORT: The external port used to connect to the edge endpoints.PERSISTENT_VOLUME_DIR(Only applies to edge deployments with edge locations): The location for the persistent volume used by the edge location to store session information, logs, etc.

The following example shows deploying models in an edge environment with the following resources allocated:

- Wallaroo inference engine:

- cpus: 1

- Inference Pipeline:

- cpus: 1.25

- memory: 1536m

- gpus: true

docker run \

-p $EDGE_PORT:8080 \

-e OCI_USERNAME=$OCI_USERNAME \

-e OCI_PASSWORD=$OCI_PASSWORD \

-e PIPELINE_URL=sample-pipeline-url \

-e CONFIG_CPUS=1.0 --gpus all --cpus=1.25 --memory=1536m \

sample-engine-url

Docker Deployment Example

Using our sample environment, here’s sample deployment using Docker with a computer vision ML model, the same used in the Wallaroo Use Case Tutorials Computer Vision: Retail tutorials.

Login through

dockerto confirm access to the registry service. First,docker login. For example, logging into the artifact registry with the token stored in the variabletok:cat $tok | docker login -u _json_key_base64 --password-stdin https://sample-registry.comThen deploy the Wallaroo published pipeline with an edge added to the pipeline publish through

docker run.IMPORTANT NOTE: Edge deployments with Edge Observability enabled with the

EDGE_BUNDLEoption include an authentication token that only authenticates once. To store the token long term, include the persistent volume flag-v {path to storage}setting.Deployment with

EDGE_BUNDLEfor observability.docker run -p 8080:8080 \ -v ./data:/persist \ -e DEBUG=true \ -e OCI_REGISTRY=$REGISTRYURL \ -e EDGE_BUNDLE=ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT1lZGdlLWNjZnJhdWQtb2JzZXJ2YWJpbGl0eXlhaWcKZXhwb3J0IEpPSU5fVE9LRU49MjZmYzFjYjgtMjUxMi00YmU3LTk0ZGUtNjQ2NGI1MGQ2MzhiCmV4cG9ydCBPUFNDRU5URVJfSE9TVD1kb2MtdGVzdC5lZGdlLndhbGxhcm9vY29tbXVuaXR5Lm5pbmphCmV4cG9ydCBQSVBFTElORV9VUkw9Z2hjci5pby93YWxsYXJvb2xhYnMvZG9jLXNhbXBsZXMvcGlwZWxpbmVzL2VkZ2Utb2JzZXJ2YWJpbGl0eS1waXBlbGluZTozYjQ5ZmJhOC05NGQ4LTRmY2EtYWVjYy1jNzUyNTdmZDE2YzYKZXhwb3J0IFdPUktTUEFDRV9JRD03 \ -e CONFIG_CPUS=1 \ -e OCI_USERNAME=$REGISTRYUSERNAME \ -e OCI_PASSWORD=$REGISTRYPASSWORD \ -e PIPELINE_URL=ghcr.io/wallaroolabs/doc-samples/pipelines/edge-observability-pipeline:3b49fba8-94d8-4fca-aecc-c75257fd16c6 \ ghcr.io/wallaroolabs/doc-samples/engines/proxy/wallaroo/ghcr.io/wallaroolabs/standalone-mini:v2023.4.0-main-4079Connection to the Wallaroo Ops instance from edge deployment with

EDGE_BUNDLEis verified with the long entryNode attestation was successful.Deployment without observability.

docker run -p 8080:8080 \ -e DEBUG=true \ -e OCI_REGISTRY=$REGISTRYURL \ -e CONFIG_CPUS=1 \ -e OCI_USERNAME=$REGISTRYUSERNAME \ -e OCI_PASSWORD=$REGISTRYPASSWORD \ -e PIPELINE_URL=ghcr.io/wallaroolabs/doc-samples/pipelines/edge-observability-pipeline:3b49fba8-94d8-4fca-aecc-c75257fd16c6 \ ghcr.io/wallaroolabs/doc-samples/engines/proxy/wallaroo/ghcr.io/wallaroolabs/standalo

Docker Compose Deployment

For users who prefer to use docker compose, the following sample compose.yaml file is used to launch the Wallaroo Edge pipeline. This is the same used in the Wallaroo Use Case Tutorials Computer Vision: Retail tutorials. The volumes tag is used to preserve the login session from the one-time token generated as part of the EDGE_BUNDLE.

EDGE_BUNDLE is only required when adding an edge to a Wallaroo publish for observability. The following is deployed without observability.

services:

engine:

image: {Your Engine URL}

ports:

- 8080:8080

environment:

PIPELINE_URL: {Your Pipeline URL}

OCI_REGISTRY: {Your Edge Registry URL}

OCI_USERNAME: {Your Registry Username}

OCI_PASSWORD: {Your Token or Password}

CONFIG_CPUS: 4

The procedure is:

Login through

dockerto confirm access to the registry service. First,docker login. For example, logging into the artifact registry with the token stored in the variabletokto the registryus-west1-docker.pkg.dev:cat $tok | docker login -u _json_key_base64 --password-stdin https://sample-registry.comSet up the

compose.yamlfile.IMPORTANT NOTE: Edge deployments with Edge Observability enabled with the

EDGE_BUNDLEoption include an authentication token that only authenticates once. To store the token long term, include the persistent volume with thevolumes:tag.services: engine: image: sample-registry.com/engine:v2023.3.0-main-3707 ports: - 8080:8080 volumes: - ./data:/persist environment: PIPELINE_URL: sample-registry.com/pipelines/edge-cv-retail:bf70eaf7-8c11-4b46-b751-916a43b1a555 EDGE_BUNDLE: ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IEVER0VfTkFNRT1lZGdlLWNjZnJhdWQtb2JzZXJ2YWJpbGl0eXlhaWcKZXhwb3J0IEpPSU5fVE9LRU49MjZmYzFjYjgtMjUxMi00YmU3LTk0ZGUtNjQ2NGI1MGQ2MzhiCmV4cG9ydCBPUFNDRU5URVJfSE9TVD1kb2MtdGVzdC5lZGdlLndhbGxhcm9vY29tbXVuaXR5Lm5pbmphCmV4cG9ydCBQSVBFTElORV9VUkw9Z2hjci5pby93YWxsYXJvb2xhYnMvZG9jLXNhbXBsZXMvcGlwZWxpbmVzL2VkZ2Utb2JzZXJ2YWJpbGl0eS1waXBlbGluZTozYjQ5ZmJhOC05NGQ4LTRmY2EtYWVjYy1jNzUyNTdmZDE2YzYKZXhwb3J0IFdPUktTUEFDRV9JRD03 OCI_REGISTRY: sample-registry.com OCI_USERNAME: _json_key_base64 OCI_PASSWORD: abc123 CONFIG_CPUS: 4Then deploy with

docker compose up.

Docker Compose Deployment Example

The deployment and undeployment is then just a simple docker compose up and docker compose down. The following shows an example of deploying the Wallaroo edge pipeline using docker compose.

docker compose up

[+] Running 1/1

✔ Container cv_data-engine-1 Recreated 0.5s

Attaching to cv_data-engine-1

cv_data-engine-1 | Wallaroo Engine - Standalone mode

cv_data-engine-1 | Login Succeeded

cv_data-engine-1 | Fetching manifest and config for pipeline: sample-registry.com/pipelines/edge-cv-retail:bf70eaf7-8c11-4b46-b751-916a43b1a555

cv_data-engine-1 | Fetching model layers

cv_data-engine-1 | digest: sha256:c6c8869645962e7711132a7e17aced2ac0f60dcdc2c7faa79b2de73847a87984

cv_data-engine-1 | filename: c6c8869645962e7711132a7e17aced2ac0f60dcdc2c7faa79b2de73847a87984

cv_data-engine-1 | name: resnet-50

cv_data-engine-1 | type: model

cv_data-engine-1 | runtime: onnx

cv_data-engine-1 | version: 693e19b5-0dc7-4afb-9922-e3f7feefe66d

cv_data-engine-1 |

cv_data-engine-1 | Fetched

cv_data-engine-1 | Starting engine

cv_data-engine-1 | Looking for preexisting `yaml` files in //modelconfigs

cv_data-engine-1 | Looking for preexisting `yaml` files in //pipelines

Podman Deployment

Wallaroo edge deployments can be made using Podman.

For the deployment, the engine URL is specified with the following environmental variables:

DEBUG(true|false): Whether to include debug output.OCI_REGISTRY: The URL of the registry service.CONFIG_CPUS: The number of CPUs to use. This applies to the inference engine only.The following options apply to the inference pipeline and the models assigned as pipeline steps.

gpus: Whether to allocate available gpus to the deployment. If no gpus are to be allocated, this options is not available. For more details on how to specify gpu resources based on the edge hardware configuration, see Docker Engine: Containers: Access an NVIDIA GPU For example, to allocate gpus to the inference pipeline:--gpus all

cpus: The fractional number of cpus to apply. For example:--cpus=1.25--cpus=2.0

memory: The amount of ram to allocate in unit values of:k: kilobytem: megabyteg: gigabyte

For example:

--memory=1536m--memory=512k

PIPELINE_URL: The published pipeline URL.EDGE_BUNDLE(Optional): The base64 encoded edge token and other values to connect to the Wallaroo Ops instance. This is used for edge management and transmitting inference results for observability. IMPORTANT NOTE: The token forEDGE_BUNDLEis valid for one deployment. For subsequent deployments, generate a new edge location with its ownEDGE_BUNDLE.LOCAL_INFERENCE_STORAGE(Optional): Sets amount of storage to allocate for the edge deployments inference log storage capacity. This is in the format{size as number}{unit value}. The values are similar to the Kubernetes memory resource units format. If used, must be used withPLATEAU_PAGE_SIZE. The accepted unit values are:Ki(for KiloBytes)Mi(for MegaBytes)Gi(for GigaBytes)Ti(for TeraBytes)

PLATEAU_PAGE_SIZE(Optional): How many inference log rows to upload from the edge deployment at a time. Must be used withLOCAL_INFERENCE_STORAGE.

The following variables must be set by the user.

OCI_USERNAME: The edge registry username.OCI_PASSWORD: The edge registry password or token.EDGE_PORT: The external port used to connect to the edge endpoints.PERSISTENT_VOLUME_DIR(Only applies to edge deployments with edge locations): The location for the persistent volume used by the edge location to store session information, logs, etc.

Podman Deployment Example

Using our sample environment, here’s sample deployment using Podman with a linear regression ML model, the same used in the Wallaroo Edge Observability with Wallaroo Assays tutorials.

Best practice is to login as the root user before running

podman. For example:sudo su-.Deploy the Wallaroo published pipeline with an edge added to the pipeline publish through

podman run.IMPORTANT NOTE: Edge deployments with Edge Observability enabled with the

EDGE_BUNDLEoption include an authentication token that only authenticates once. To store the token long term, include the persistent volume flag-v {path to storage}setting.Deployment with

EDGE_BUNDLEfor observability. This is for edge deployments with specific edge locations defined for observability. For more details, see Edge Observabilitypodman run -v $PERSISTENT_VOLUME_DIR:/persist \ -p $EDGE_PORT:8080 \ -e OCI_USERNAME=$OCI_USERNAME \ -e OCI_PASSWORD=$OCI_PASSWORD \ -e PIPELINE_URL=ghcr.io/wallaroolabs/doc-samples/pipelines/assay-demonstration-tutorial:1ff19772-f41f-42fb-b0d1-f82130bf5801\ -e EDGE_BUNDLE=ZXhwb3J0IEJVTkRMRV9WRVJTSU9OPTEKZXhwb3J0IENPTkZJR19DUFVTPTQKZXhwb3J0IEVER0VfTkFNRT1ob3VzZXByaWNlLWVkZ2UtZGVtb25zdHJhdGlvbi0wMgpleHBvcnQgT1BTQ0VOVEVSX0hPU1Q9ZG9jLXRlc3Qud2FsbGFyb29jb21tdW5pdHkubmluamEKZXhwb3J0IFBJUEVMSU5FX1VSTD1naGNyLmlvL3dhbGxhcm9vbGFicy9kb2Mtc2FtcGxlcy9waXBlbGluZXMvYXNzYXktZGVtb25zdHJhdGlvbi10dXRvcmlhbDoxZmYxOTc3Mi1mNDFmLTQyZmItYjBkMS1mODIxMzBiZjU4MDEKZXhwb3J0IEpPSU5fVE9LRU49YTQ0OGIyZjItNjgwYi00Y2ZiLThiMjItY2ZjNTI5MTk5ZjY5CmV4cG9ydCBPQ0lfUkVHSVNUUlk9Z2hjci5pbw== \ -e CONFIG_CPUS=4 --cpus=4.0 --memory=3g \ ghcr.io/wallaroolabs/doc-samples/engines/proxy/wallaroo/ghcr.io/wallaroolabs/fitzroy-mini:v2025.1.0-6250Deployment without observability. This is for publishes that do not have a specific edge location defined.

podman run \ -p $EDGE_PORT:8080 \ -e OCI_USERNAME=$OCI_USERNAME \ -e OCI_PASSWORD=$OCI_PASSWORD \ -e PIPELINE_URL=ghcr.io/wallaroolabs/doc-samples/pipelines/assay-demonstration-tutorial:1ff19772-f41f-42fb-b0d1-f82130bf5801 \ -e CONFIG_CPUS=4 --cpus=4.0 --memory=3g \ ghcr.io/wallaroolabs/doc-samples/engines/proxy/wallaroo/ghcr.io/wallaroolabs/fitzroy-mini:v2025.1.0-6250

Helm Deployment

Published pipelines can be deployed through the use of helm charts.

Helm deployments take up to two steps - the first step is in retrieving the required values.yaml and making updates to override.

IMPORTANT NOTE: Edge deployments with Edge Observability enabled with the EDGE_BUNDLE option include an authentication token that only authenticates once. Helm chart installations automatically add a persistent volume during deployment to store the authentication session data for future deployments.

Login to the registry service with

helm registry login. For example, if the token is stored in the variabletok:helm registry login sample-registry.com --username _json_key_base64 --password $tokPull the helm charts from the published pipeline. The two fields are the Helm Chart URL and the Helm Chart version to specify the OCI . This typically takes the format of:

helm pull oci://{published.helm_chart_url} --version {published.helm_chart_version}Extract the

tgzfile and copy thevalues.yamland copy the values used to edit engine allocations, etc. The following are required for the deployment to run:ociRegistry: registry: {your registry service} username: {registry username here} password: {registry token here}For Wallaroo Server deployments with edge location set, the values include

edgeBundleas generated when the edge was added to the pipeline publish.ociRegistry: registry: {your registry service} username: {registry username here} password: {registry token here} edgeBundle: abcdefg

Store this into another file, suc as local-values.yaml.

Create the namespace to deploy the pipeline to. For example, the namespace

wallaroo-edge-pipelinewould be:kubectl create -n wallaroo-edge-pipelineDeploy the

helminstallation withhelm installthrough one of the following options:Specify the

tgzfile that was downloaded and the local values file. For example:helm install --namespace {namespace} --values {local values file} {helm install name} {tgz path} --timeout 10m --wait --wait-for-jobsSpecify the expended directory from the downloaded

tgzfile.helm install --namespace {namespace} --values {local values file} {helm install name} {helm directory path} --timeout 10m --wait --wait-for-jobsSpecify the Helm Pipeline Helm Chart and the Pipeline Helm Version.

helm install --namespace {namespace} --values {local values file} {helm install name} oci://{published.helm_chart_url} --version {published.helm_chart_version} --timeout 10m --wait --wait-for-jobs

Once deployed, the DevOps engineer will have to forward the appropriate ports to the

svc/engine-svcservice in the specific pipeline. For example, usingkubectl port-forwardto the namespaceccfraudthat would be:kubectl port-forward svc/engine-svc -n ccfraud01 8080 --address 0.0.0.0`