Model Data Schema Definitions

Table of Contents

Models uploaded and deployed to Wallaroo pipelines require that the input data matches the model’s data schema. Pipeline steps chained in Wallaroo pipelines accept the outputs of the previous pipeline step, which may or may not be a model. Any sequence of steps requires the previous step’s outputs to match the next step’s inputs.

This is where tools like BYOP (Bring Your Own Predict) aka Arbitrary Python, or Python Models are useful to reshape data submitted to a Wallaroo pipeline or between models deployed in a Wallaroo pipeline become essential.

This guide aims to demonstrate how Wallaroo handles data types in regards to models, and how to shape data for different Wallaroo deployed models.

Data Types

Data types for inputs and outputs to inference requests through Wallaroo is based on the Apache Arrow Data Types, with the following exceptions:

null: Not allowed. All fields must have submitted values that match their data type. For example, if the schema expects a float value, then some value of type float such as0.0must be submitted and cannot beNoneorNull. If a schema expects a string value, then some value of type string must be submitted, etc. The exception are BYOP models, which can accept optional inputs.time32andtime64: Datetime data types must be converted to string.

Data Constraints

Data submitted to Wallaroo for inference requests have the following data constraints.

- Equal rows constraint: The number of input rows and output rows must match.

- Data Type Consistency: Data types within each tensor are of the same type.

Equal Rows

Each input row for an inference is related directly to the inference row output.

For example, the INPUT and OUTPUT rows match, with each input row directly corresponding to an output row.

Equal Rows Input Example

| tensor | |

|---|---|

| 0 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 1 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 2 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 3 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 4 | [0.5817662108, 0.09788155100000001, 0.1546819424, 0.4754101949, -0.19788623060000002, -0.45043448540000003, 0.016654044700000002, -0.0256070551, 0.0920561602, -0.2783917153, 0.059329944100000004, -0.0196585416, -0.4225083157, -0.12175388770000001, 1.5473094894000001, 0.2391622864, 0.3553974881, -0.7685165301, -0.7000849355000001, -0.1190043285, -0.3450517133, -1.1065114108, 0.2523411195, 0.0209441826, 0.2199267436, 0.2540689265, -0.0450225094, 0.10867738980000001, 0.2547179311] |

Equal Rows Output Example

| time | in.tensor | out.dense_1 | anomaly.count | |

|---|---|---|---|---|

| 0 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 1 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 2 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 3 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 4 | 2023-11-17 20:34:17.005 | [0.5817662108, 0.097881551, 0.1546819424, 0.4754101949, -0.1978862306, -0.4504344854, 0.0166540447, -0.0256070551, 0.0920561602, -0.2783917153, 0.0593299441, -0.0196585416, -0.4225083157, -0.1217538877, 1.5473094894, 0.2391622864, 0.3553974881, -0.7685165301, -0.7000849355, -0.1190043285, -0.3450517133, -1.1065114108, 0.2523411195, 0.0209441826, 0.2199267436, 0.2540689265, -0.0450225094, 0.1086773898, 0.2547179311] | [0.0010916889] | 0 |

Data Type Consistency

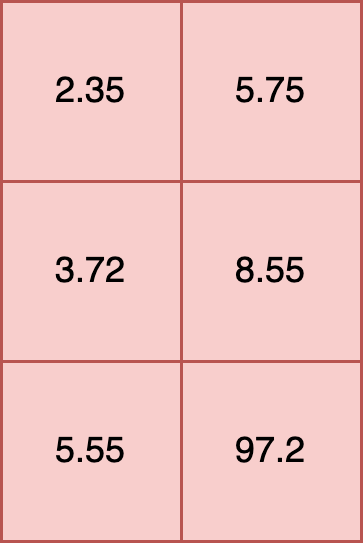

Each element must have the same internal data type. For example, the following is valid because all of the data types within each element are float32.

t = [

[2.35, 5.75],

[3.72, 8.55],

[5.55, 97.2]

]

The following is invalid, as it mixes floats and strings in each element:

t = [

[2.35, "Bob"],

[3.72, "Nancy"],

[5.55, "Wani"]

]

The following inputs are valid, as each data type is consistent within the elements.

df = pd.DataFrame({

"t": [

[2.35, 5.75, 19.2],

[5.55, 7.2, 15.7],

],

"s": [

["Bob", "Nancy", "Wani"],

["Jason", "Rita", "Phoebe"]

]

})

df

| t | s | |

|---|---|---|

| 0 | [2.35, 5.75, 19.2] | [Bob, Nancy, Wani] |

| 1 | [5.55, 7.2, 15.7] | [Jason, Rita, Phoebe] |

Data Requirements for ONNX Models

ONNX models used for inference requests in Wallaroo have an additional requirement.

- The shape of each element within a field is the same.

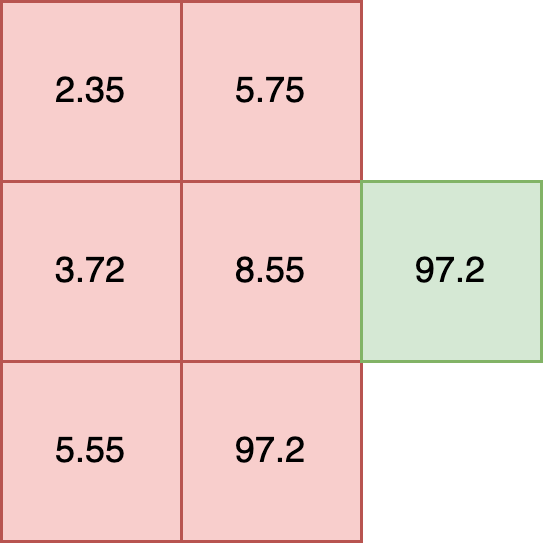

For ONNX models, all inputs must be tensors. This requires that the shape of each element is the same. For example, the following is a proper input:

t = [

[2.35, 5.75],

[3.72, 8.55],

[5.55, 97.2]

]

Another example is a 2,2,3 tensor, where the shape of each element is (3,), and each element has 2 rows, and the outer row is bound by 2 rows.

t = [

[2.35, 5.75, 19.2],

[3.72, 8.55, 10.5]

],

[

[5.55, 7.2, 15.7],

[9.6, 8.2, 2.3]

]

In this example each element has a shape of (2,). Tensors with elements of different shapes, known as ragged tensors, are not supported for batch inputs. For example:

t = [

[2.35, 5.75],

[3.72, 8.55, 10.5],

[5.55, 97.2]

])

**INVALID SHAPE**

For this situation, the rows must be broken up to avoid the ragged tensors, or submitted as single row inputs. For example:

t1 = [[2.35, 5.75]]

t2 = [[3.72, 8.55, 10.5]]

t3 = [[5.55, 97.2]]

Batch vs Single Row Data Requirements for ONNX models

Data submitted to ONNX models deployed in Wallaroo is submitted as either batch data or single row input data based on the model configuration, typically at model upload.

ONNX models with the following data input conditions must be set as single row inputs:

- The inputs do not have an outer dimension.

- The output has an outer dimension of 1.

The simple rule of thumb is that for batch inputs, the data meets the following requirements.

- Equal rows constraint: The number of input rows and output rows must match.

- Data Type Consistency: Data types within each tensor are of the same type.

- Data Shape Consistency: The shape of each element within a field is the same.

For single row inputs, the data meets the following requirements.

- Equal rows constraint: The number of input rows and output rows must match.

- Data Type Consistency: Data types within each tensor are of the same type.

For single row inputs, the following image does not have an outer dimension, and depending on the size of the image, the shape of the data changes.

For example, the following images each have data type consistency when converted from an image to tensors. But the shape of each image differs from their size.

# a 640x480 image converted to tensors

df_image, resizedImage = utils.loadImageAndConvertToDataframe('./data/images/input/example/dairy_bottles.png', width, height)

# flatten the multi-dimensional array for inferencing through a Wallaroo deployed model

df_for_inference = pd.DataFrame(wallaroo.utils.flatten_np_array_columns(df_image, 'tensor'))

# get the shape - from a 640x480 image with 3 color values per pixel this is 921600 elements

print(df_for_inference['tensor'][0].shape)

(921600,)

| tensor | |

|---|---|

| 0 | [0.9372549, 0.9529412, 0.9490196, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.9490196, 0.9490196, 0.9529412, 0.9529412, 0.9490196, 0.9607843, 0.96862745, 0.9647059, 0.96862745, 0.9647059, 0.95686275, 0.9607843, 0.9647059, 0.9647059, 0.9607843, 0.9647059, 0.972549, 0.95686275, 0.9607843, 0.91764706, 0.95686275, 0.91764706, 0.8784314, 0.89411765, 0.84313726, 0.8784314, 0.8627451, 0.8509804, 0.9254902, 0.84705883, 0.96862745, 0.89411765, 0.81960785, 0.8509804, 0.92941177, 0.8666667, 0.8784314, 0.8666667, 0.9647059, 0.9764706, 0.98039216, 0.9764706, 0.972549, 0.972549, 0.972549, 0.972549, 0.972549, 0.972549, 0.98039216, 0.89411765, 0.48235294, 0.4627451, 0.43137255, 0.27058825, 0.25882354, 0.29411766, 0.34509805, 0.36862746, 0.4117647, 0.45490196, 0.4862745, 0.5254902, 0.56078434, 0.6039216, 0.64705884, 0.6862745, 0.72156864, 0.74509805, 0.7490196, 0.7882353, 0.8666667, 0.98039216, 0.9882353, 0.96862745, 0.9647059, 0.96862745, 0.972549, 0.9647059, 0.9607843, 0.9607843, 0.9607843, 0.9607843, …] |

# a 1920x1080 image converted to tensors

df_image, resizedImage = utils.loadImageAndConvertToDataframe('./data/images/input/example/crowd_of_people.png', width, height)

# flatten the multi-dimensional array for inferencing through a Wallaroo deployed model

df_for_inference = pd.DataFrame(wallaroo.utils.flatten_np_array_columns(df_image, 'tensor'))

# get the shape - from a 1920x1080 image with 3 color values per pixel this is 6220800 elements

df_for_inference['tensor'][0].shape

(6220800,)

display(df_for_inference)

| tensor | |

|---|---|

| 0 | [0.9098039, 0.9372549, 0.9490196, 0.9529412, 0.9529412, 0.9529412, 0.9490196, 0.9490196, 0.9490196, 0.9490196, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.94509804, 0.9490196, 0.9490196, 0.9490196, 0.9490196, 0.9490196, 0.9529412, 0.9529412, 0.9529412, 0.9529412, 0.9529412, 0.9529412, 0.9529412, 0.9490196, 0.9490196, 0.9529412, 0.95686275, 0.9607843, 0.9647059, 0.96862745, 0.96862745, 0.972549, 0.96862745, 0.9647059, 0.9647059, 0.9647059, 0.9647059, 0.9647059, 0.9647059, 0.9607843, 0.9607843, 0.9607843, 0.95686275, 0.95686275, 0.95686275, 0.9607843, 0.9607843, 0.9647059, 0.9647059, 0.972549, 0.96862745, 0.9647059, 0.9607843, 0.9607843, 0.9607843, 0.9607843, 0.9607843, …] |

For each input, the shape of the input field tensor depends on the size of the image, and there is no outer dimension. In these instances where single batch inputs are required because of the data schema, the ONNX model’s configuration must be set to batch_config=single.

mobilenet_model = wl.upload_model("mobilenet-demo",

'./models/mobilenet.pt.onnx',

framework=Framework.ONNX).configure(batch_config="single")

Each inference request submitted must be a single row pandas DataFrame or Apache Arrow table when the model is configured as batch_config="single".

Setting Model Data Schemas

ML Models uploaded to Wallaroo except Wallaroo Native Runtimes require their fields and data types specified in Apache Arrow pyarrow.Schema. For full details on specific ML models and their data requirements, see Wallaroo SDK Essentials Guide: Model Uploads and Registrations.

Each field is defined by its data type. For example, the following input and output schema are used for a Pytorch single input and output model, which receives a list of float values and returns a list of float values.

input_schema = pa.schema([

pa.field('input', pa.list_(pa.float32(), list_size=10))

])

output_schema = pa.schema([

pa.field('output', pa.list_(pa.float32(), list_size=1))

])

Additional Examples

Other examples come from the Wallaroo Tutorials as listed below.

- Computer Vision: Yolov8n Demonstration

- Computer Vision Tutorials

- Wallaroo Edge Computer Vision Yolov8n Demonstration

- ARM Computer Vision Retail Yolov8 Demonstration

- Wallaroo Workshop: Edge Deployment: Computer Vision Yolov8n

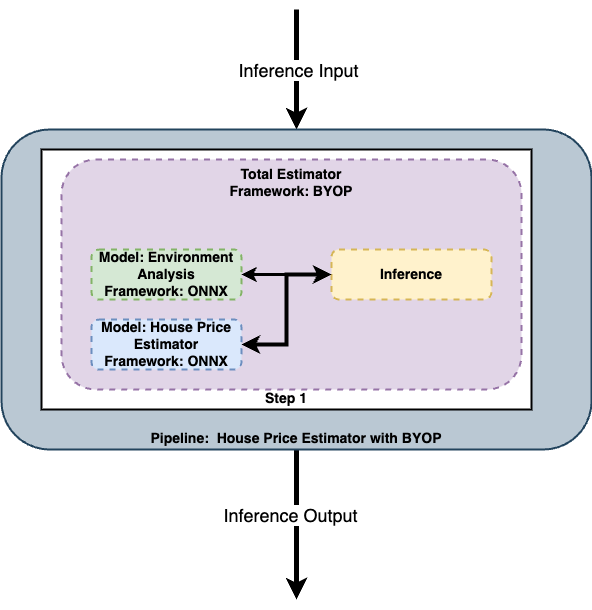

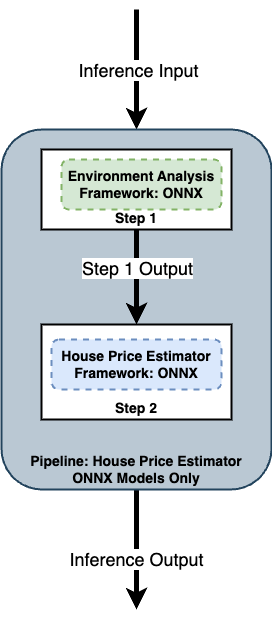

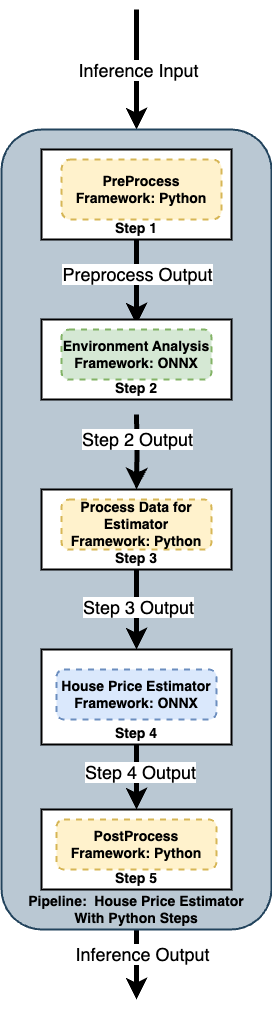

Multiple Step Pipelines

Pipelines with multiple models as pipeline steps still have the same input and output data requirements. The inference input is fed to the pipeline, which submits it to the first model. The first model’s output becomes the input for the next model, until with the last model the pipeline submits the final inference results.

There are different ways of handling data in this scenario.

Set each model’s inputs and outputs to match. This will require each model’s outputs to match the next model’s inputs. This will require tailoring each model to match the previous model’s data types.

Use Python models, where a Python script a model to transform the data outputs from one model to match the data inputs of the next one. See Wallaroo SDK Essentials Guide: Model Uploads and Registrations: Python Models for full details and requirements.

Use BYOP (Bring Your Own Predict) aka Arbitrary Python models, which combines models and Python scripts as one cohesive model. See Wallaroo SDK Essentials Guide: Model Uploads and Registrations: Arbitrary Python for full details and requirements.