Wallaroo SDK Essentials Guide: Model Uploads and Registrations: ONNX

How to upload and use ONNX ML Models with Wallaroo

Models are uploaded or registered to a Wallaroo workspace depending on the model framework and version.

Pipeline deployment configurations provide two runtimes to run models in the Wallaroo engine:

Native Runtimes: Models that are deployed “as is” with the Wallaroo engine. These are:

Containerized Runtimes: Containerized models such as MLFlow or Arbitrary Python. These are run in the Wallaroo engine in their containerized form.

Non-Native Runtimes: Models that when uploaded are either converted to a native Wallaroo runtime, or are containerized so they can be run in the Wallaroo engine. When uploaded, Wallaroo will attempt to convert it to a native runtime. If it can not be converted, then it will be packed in a Wallaroo containerized model based on its framework type.

Pipeline configurations are dependent on whether the model is converted to the Native Runtime space, or Containerized Model Runtime space.

This model will always run in the native runtime space.

The following configuration allocates 0.25 CPU and 1 Gi RAM to the native runtime models for a pipeline.

deployment_config = DeploymentConfigBuilder()

.cpus(0.25)

.memory('1Gi')

.build()

The following frameworks are supported. Frameworks fall under either Native or Containerized runtimes in the Wallaroo engine. For more details, see the specific framework what runtime a specific model framework runs in.

| Runtime Display | Model Runtime Space | Pipeline Configuration |

|---|---|---|

tensorflow | Native | Native Runtime Configuration Methods |

onnx | Native | Native Runtime Configuration Methods |

python | Native | Native Runtime Configuration Methods |

mlflow | Containerized | Containerized Runtime Deployment |

Please note the following.

Wallaroo natively supports Open Neural Network Exchange (ONNX) models into the Wallaroo engine.

| Parameter | Description |

|---|---|

| Web Site | https://onnx.ai/ |

| Supported Libraries | See table below. |

| Framework | Framework.ONNX aka onnx |

| Runtime | Native aka onnx |

The following ONNX versions models are supported:

| Wallaroo Version | ONNX Version | ONNX IR Version | ONNX OPset Version | ONNX ML Opset Version |

|---|---|---|---|---|

| 2023.2.1 (July 2023) | 1.12.1 | 8 | 17 | 3 |

| 2023.2 (May 2023) | 1.12.1 | 8 | 17 | 3 |

| 2023.1 (March 2023) | 1.12.1 | 8 | 17 | 3 |

| 2022.4 (December 2022) | 1.12.1 | 8 | 17 | 3 |

| After April 2022 until release 2022.4 (December 2022) | 1.10.* | 7 | 15 | 2 |

| Before April 2022 | 1.6.* | 7 | 13 | 2 |

For the most recent release of Wallaroo 2023.2.1, the following native runtimes are supported:

DEFAULT_OPSET_NUMBER is 17.Using different versions or settings outside of these specifications may result in inference issues and other unexpected behavior.

ONNX models always run in the native runtime space.

ONNX models deployed to Wallaroo have the following data requirements.

Inference performed through ONNX models are assumed to be in batch format, where each input row corresponds to an output row. This is reflected in the in fields returned for an inference. In the following example, each input row for an inference is related directly to the inference output.

df = pd.read_json('./data/cc_data_1k.df.json')

display(df.head())

result = ccfraud_pipeline.infer(df.head())

display(result)

INPUT

| tensor | |

|---|---|

| 0 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 1 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 2 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 3 | [-1.0603297501, 2.3544967095000002, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192000001, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526000001, 1.9870535692, 0.7005485718000001, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] |

| 4 | [0.5817662108, 0.09788155100000001, 0.1546819424, 0.4754101949, -0.19788623060000002, -0.45043448540000003, 0.016654044700000002, -0.0256070551, 0.0920561602, -0.2783917153, 0.059329944100000004, -0.0196585416, -0.4225083157, -0.12175388770000001, 1.5473094894000001, 0.2391622864, 0.3553974881, -0.7685165301, -0.7000849355000001, -0.1190043285, -0.3450517133, -1.1065114108, 0.2523411195, 0.0209441826, 0.2199267436, 0.2540689265, -0.0450225094, 0.10867738980000001, 0.2547179311] |

OUTPUT

| time | in.tensor | out.dense_1 | check_failures | |

|---|---|---|---|---|

| 0 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 1 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 2 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 3 | 2023-11-17 20:34:17.005 | [-1.0603297501, 2.3544967095, -3.5638788326, 5.1387348926, -1.2308457019, -0.7687824608, -3.5881228109, 1.8880837663, -3.2789674274, -3.9563254554, 4.0993439118, -5.6539176395, -0.8775733373, -9.131571192, -0.6093537873, -3.7480276773, -5.0309125017, -0.8748149526, 1.9870535692, 0.7005485718, 0.9204422758, -0.1041491809, 0.3229564351, -0.7418141657, 0.0384120159, 1.0993439146, 1.2603409756, -0.1466244739, -1.4463212439] | [0.99300325] | 0 |

| 4 | 2023-11-17 20:34:17.005 | [0.5817662108, 0.097881551, 0.1546819424, 0.4754101949, -0.1978862306, -0.4504344854, 0.0166540447, -0.0256070551, 0.0920561602, -0.2783917153, 0.0593299441, -0.0196585416, -0.4225083157, -0.1217538877, 1.5473094894, 0.2391622864, 0.3553974881, -0.7685165301, -0.7000849355, -0.1190043285, -0.3450517133, -1.1065114108, 0.2523411195, 0.0209441826, 0.2199267436, 0.2540689265, -0.0450225094, 0.1086773898, 0.2547179311] | [0.0010916889] | 0 |

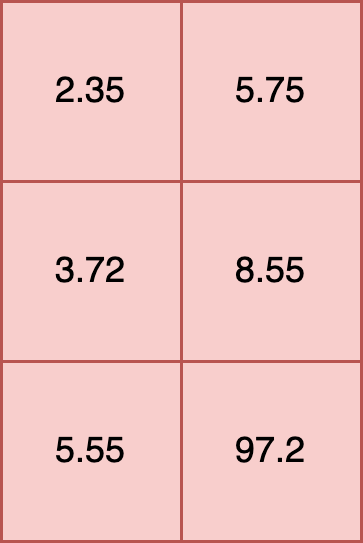

All inputs into an ONNX model must be tensors. This requires that the shape of each element is the same. For example, the following is a proper input:

t [

[2.35, 5.75],

[3.72, 8.55],

[5.55, 97.2]

]

Another example is a 2,2,3 tensor, where the shape of each element is (3,), and each element has 2 rows.

t = [

[2.35, 5.75, 19.2],

[3.72, 8.55, 10.5]

],

[

[5.55, 7.2, 15.7],

[9.6, 8.2, 2.3]

]

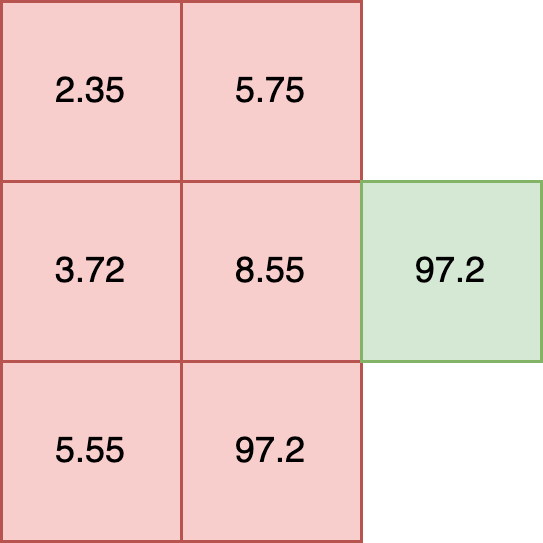

In this example each element has a shape of (2,). Tensors with elements of different shapes, known as ragged tensors, are not supported. For example:

t = [

[2.35, 5.75],

[3.72, 8.55, 10.5],

[5.55, 97.2]

])

**INVALID SHAPE**

For models that require ragged tensor or other shapes, see other data formatting options such as Bring Your Own Predict models.

All inputs into an ONNX model must have the same internal data type. For example, the following is valid because all of the data types within each element are float32.

t = [

[2.35, 5.75],

[3.72, 8.55],

[5.55, 97.2]

]

The following is invalid, as it mixes floats and strings in each element:

t = [

[2.35, "Bob"],

[3.72, "Nancy"],

[5.55, "Wani"]

]

The following inputs are valid, as each data type is consistent within the elements.

df = pd.DataFrame({

"t": [

[2.35, 5.75, 19.2],

[5.55, 7.2, 15.7],

],

"s": [

["Bob", "Nancy", "Wani"],

["Jason", "Rita", "Phoebe"]

]

})

df

| t | s | |

|---|---|---|

| 0 | [2.35, 5.75, 19.2] | [Bob, Nancy, Wani] |

| 1 | [5.55, 7.2, 15.7] | [Jason, Rita, Phoebe] |

| Parameter | Description |

|---|---|

| Web Site | https://www.tensorflow.org/ |

| Supported Libraries | tensorflow==2.9.1 |

| Framework | Framework.TENSORFLOW aka tensorflow |

| Runtime | Native aka tensorflow |

| Supported File Types | SavedModel format as .zip file |

These requirements are <strong>not</strong> for Tensorflow Keras models, only for non-Keras Tensorflow models in the SavedModel format. For Tensorflow Keras deployment in Wallaroo, see the Tensorflow Keras requirements.

TensorFlow models are .zip file of the SavedModel format. For example, the Aloha sample TensorFlow model is stored in the directory alohacnnlstm:

├── saved_model.pb

└── variables

├── variables.data-00000-of-00002

├── variables.data-00001-of-00002

└── variables.index

This is compressed into the .zip file alohacnnlstm.zip with the following command:

zip -r alohacnnlstm.zip alohacnnlstm/

ML models that meet the Tensorflow and SavedModel format will run as Wallaroo Native runtimes by default.

See the SavedModel guide for full details.

| Parameter | Description |

|---|---|

| Web Site | https://www.python.org/ |

| Supported Libraries | python==3.8 |

| Framework | Framework.PYTHON aka python |

| Runtime | Native aka python |

Python models uploaded to Wallaroo are executed as a native runtime.

Note that Python models - aka “Python steps” - are standalone python scripts that use the python libraries natively supported by the Wallaroo platform. These are used for either simple model deployment (such as ARIMA Statsmodels), or data formatting such as the postprocessing steps. A Wallaroo Python model will be composed of one Python script that matches the Wallaroo requirements.

This is contrasted with Arbitrary Python models, also known as Bring Your Own Predict (BYOP) allow for custom model deployments with supporting scripts and artifacts. These are used with pre-trained models (PyTorch, Tensorflow, etc) along with whatever supporting artifacts they require. Supporting artifacts can include other Python modules, model files, etc. These are zipped with all scripts, artifacts, and a requirements.txt file that indicates what other Python models need to be imported that are outside of the typical Wallaroo platform.

Python models uploaded to Wallaroo are Python scripts that must include the wallaroo_json method as the entry point for the Wallaroo engine to use it as a Pipeline step.

This method receives the results of the previous Pipeline step, and its return value will be used in the next Pipeline step.

If the Python model is the first step in the pipeline, then it will be receiving the inference request data (for example: a preprocessing step). If it is the last step in the pipeline, then it will be the data returned from the inference request.

In the example below, the Python model is used as a post processing step for another ML model. The Python model expects to receive data from a ML Model who’s output is a DataFrame with the column dense_2. It then extracts the values of that column as a list, selects the first element, and returns a DataFrame with that element as the value of the column output.

def wallaroo_json(data: pd.DataFrame):

print(data)

return [{"output": [data["dense_2"].to_list()[0][0]]}]

In line with other Wallaroo inference results, the outputs of a Python step that returns a pandas DataFrame or Arrow Table will be listed in the out. metadata, with all inference outputs listed as out.{variable 1}, out.{variable 2}, etc. In the example above, this results the output field as the out.output field in the Wallaroo inference result.

| time | in.tensor | out.output | check_failures | |

|---|---|---|---|---|

| 0 | 2023-06-20 20:23:28.395 | [0.6878518042, 0.1760734021, -0.869514083, 0.3.. | [12.886651039123535] | 0 |

| Parameter | Description |

|---|---|

| Web Site | https://huggingface.co/models |

| Supported Libraries |

|

| Frameworks | The following Hugging Face pipelines are supported by Wallaroo.

|

| Runtime | Containerized aka tensorflow / mlflow |

Input and output schemas for each Hugging Face pipeline are defined below. Note that adding additional inputs not specified below will raise errors, except for the following:

Framework.HUGGING-FACE-IMAGE-TO-TEXTFramework.HUGGING-FACE-TEXT-CLASSIFICATIONFramework.HUGGING-FACE-SUMMARIZATIONFramework.HUGGING-FACE-TRANSLATIONAdditional inputs added to these Hugging Face pipelines will be added as key/pair value arguments to the model’s generate method. If the argument is not required, then the model will default to the values coded in the original Hugging Face model’s source code.

See the Hugging Face Pipeline documentation for more details on each pipeline and framework.

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-FEATURE-EXTRACTION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string())

])

output_schema = pa.schema([

pa.field('output', pa.list_(

pa.list_(

pa.float64(),

list_size=128

),

))

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-IMAGE-CLASSIFICATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('top_k', pa.int64()),

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64(), list_size=2)),

pa.field('label', pa.list_(pa.string(), list_size=2)),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-IMAGE-SEGMENTATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('threshold', pa.float64()),

pa.field('mask_threshold', pa.float64()),

pa.field('overlap_mask_area_threshold', pa.float64()),

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64())),

pa.field('label', pa.list_(pa.string())),

pa.field('mask',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=100

),

list_size=100

),

)),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-IMAGE-TO-TEXT |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.list_( #required

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

# pa.field('max_new_tokens', pa.int64()), # optional

])

output_schema = pa.schema([

pa.field('generated_text', pa.list_(pa.string())),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-OBJECT-DETECTION |

Schemas:

input_schema = pa.schema([

pa.field('inputs',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('threshold', pa.float64()),

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64())),

pa.field('label', pa.list_(pa.string())),

pa.field('box',

pa.list_( # dynamic output, i.e. dynamic number of boxes per input image, each sublist contains the 4 box coordinates

pa.list_(

pa.int64(),

list_size=4

),

),

),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-QUESTION-ANSWERING |

Schemas:

input_schema = pa.schema([

pa.field('question', pa.string()),

pa.field('context', pa.string()),

pa.field('top_k', pa.int64()),

pa.field('doc_stride', pa.int64()),

pa.field('max_answer_len', pa.int64()),

pa.field('max_seq_len', pa.int64()),

pa.field('max_question_len', pa.int64()),

pa.field('handle_impossible_answer', pa.bool_()),

pa.field('align_to_words', pa.bool_()),

])

output_schema = pa.schema([

pa.field('score', pa.float64()),

pa.field('start', pa.int64()),

pa.field('end', pa.int64()),

pa.field('answer', pa.string()),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-STABLE-DIFFUSION-TEXT-2-IMG |

Schemas:

input_schema = pa.schema([

pa.field('prompt', pa.string()),

pa.field('height', pa.int64()),

pa.field('width', pa.int64()),

pa.field('num_inference_steps', pa.int64()), # optional

pa.field('guidance_scale', pa.float64()), # optional

pa.field('negative_prompt', pa.string()), # optional

pa.field('num_images_per_prompt', pa.string()), # optional

pa.field('eta', pa.float64()) # optional

])

output_schema = pa.schema([

pa.field('images', pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=128

),

list_size=128

)),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-SUMMARIZATION |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string()),

pa.field('return_text', pa.bool_()),

pa.field('return_tensors', pa.bool_()),

pa.field('clean_up_tokenization_spaces', pa.bool_()),

# pa.field('extra_field', pa.int64()), # every extra field you specify will be forwarded as a key/value pair

])

output_schema = pa.schema([

pa.field('summary_text', pa.string()),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-TEXT-CLASSIFICATION |

Schemas

input_schema = pa.schema([

pa.field('inputs', pa.string()), # required

pa.field('top_k', pa.int64()), # optional

pa.field('function_to_apply', pa.string()), # optional

])

output_schema = pa.schema([

pa.field('label', pa.list_(pa.string(), list_size=2)), # list with a number of items same as top_k, list_size can be skipped but may lead in worse performance

pa.field('score', pa.list_(pa.float64(), list_size=2)), # list with a number of items same as top_k, list_size can be skipped but may lead in worse performance

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-TRANSLATION |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string()), # required

pa.field('return_tensors', pa.bool_()), # optional

pa.field('return_text', pa.bool_()), # optional

pa.field('clean_up_tokenization_spaces', pa.bool_()), # optional

pa.field('src_lang', pa.string()), # optional

pa.field('tgt_lang', pa.string()), # optional

# pa.field('extra_field', pa.int64()), # every extra field you specify will be forwarded as a key/value pair

])

output_schema = pa.schema([

pa.field('translation_text', pa.string()),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-ZERO-SHOT-CLASSIFICATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', pa.string()), # required

pa.field('candidate_labels', pa.list_(pa.string(), list_size=2)), # required

pa.field('hypothesis_template', pa.string()), # optional

pa.field('multi_label', pa.bool_()), # optional

])

output_schema = pa.schema([

pa.field('sequence', pa.string()),

pa.field('scores', pa.list_(pa.float64(), list_size=2)), # same as number of candidate labels, list_size can be skipped by may result in slightly worse performance

pa.field('labels', pa.list_(pa.string(), list_size=2)), # same as number of candidate labels, list_size can be skipped by may result in slightly worse performance

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-ZERO-SHOT-IMAGE-CLASSIFICATION |

Schemas:

input_schema = pa.schema([

pa.field('inputs', # required

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=100

),

list_size=100

)),

pa.field('candidate_labels', pa.list_(pa.string(), list_size=2)), # required

pa.field('hypothesis_template', pa.string()), # optional

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64(), list_size=2)), # same as number of candidate labels

pa.field('label', pa.list_(pa.string(), list_size=2)), # same as number of candidate labels

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-ZERO-SHOT-OBJECT-DETECTION |

Schemas:

input_schema = pa.schema([

pa.field('images',

pa.list_(

pa.list_(

pa.list_(

pa.int64(),

list_size=3

),

list_size=640

),

list_size=480

)),

pa.field('candidate_labels', pa.list_(pa.string(), list_size=3)),

pa.field('threshold', pa.float64()),

# pa.field('top_k', pa.int64()), # we want the model to return exactly the number of predictions, we shouldn't specify this

])

output_schema = pa.schema([

pa.field('score', pa.list_(pa.float64())), # variable output, depending on detected objects

pa.field('label', pa.list_(pa.string())), # variable output, depending on detected objects

pa.field('box',

pa.list_( # dynamic output, i.e. dynamic number of boxes per input image, each sublist contains the 4 box coordinates

pa.list_(

pa.int64(),

list_size=4

),

),

),

])

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-SENTIMENT-ANALYSIS | Hugging Face Sentiment Analysis |

| Wallaroo Framework | Reference |

|---|---|

Framework.HUGGING-FACE-TEXT-GENERATION |

Any parameter that is not part of the required inputs list will be forwarded to the model as a key/pair value to the underlying models generate method. If the additional input is not supported by the model, an error will be returned.

input_schema = pa.schema([

pa.field('inputs', pa.string()),

pa.field('return_tensors', pa.bool_()), # optional

pa.field('return_text', pa.bool_()), # optional

pa.field('return_full_text', pa.bool_()), # optional

pa.field('clean_up_tokenization_spaces', pa.bool_()), # optional

pa.field('prefix', pa.string()), # optional

pa.field('handle_long_generation', pa.string()), # optional

# pa.field('extra_field', pa.int64()), # every extra field you specify will be forwarded as a key/value pair

])

output_schema = pa.schema([

pa.field('generated_text', pa.list_(pa.string(), list_size=1))

])

| Parameter | Description |

|---|---|

| Web Site | https://pytorch.org/ |

| Supported Libraries |

|

| Framework | Framework.PYTORCH aka pytorch |

| Supported File Types | pt ot pth in TorchScript format |

| Runtime | Containerized aka mlflow |

Sci-kit Learn aka SKLearn.

| Parameter | Description |

|---|---|

| Web Site | https://scikit-learn.org/stable/index.html |

| Supported Libraries |

|

| Framework | Framework.SKLEARN aka sklearn |

| Runtime | Containerized aka tensorflow / mlflow |

SKLearn schema follows a different format than other models. To prevent inputs from being out of order, the inputs should be submitted in a single row in the order the model is trained to accept, with all of the data types being the same. For example, the following DataFrame has 4 columns, each column a float.

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

For submission to an SKLearn model, the data input schema will be a single array with 4 float values.

input_schema = pa.schema([

pa.field('inputs', pa.list_(pa.float64(), list_size=4))

])

When submitting as an inference, the DataFrame is converted to rows with the column data expressed as a single array. The data must be in the same order as the model expects, which is why the data is submitted as a single array rather than JSON labeled columns: this insures that the data is submitted in the exact order as the model is trained to accept.

Original DataFrame:

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

Converted DataFrame:

| inputs | |

|---|---|

| 0 | [5.1, 3.5, 1.4, 0.2] |

| 1 | [4.9, 3.0, 1.4, 0.2] |

Outputs for SKLearn that are meant to be predictions or probabilities when output by the model are labeled in the output schema for the model when uploaded to Wallaroo. For example, a model that outputs either 1 or 0 as its output would have the output schema as follows:

output_schema = pa.schema([

pa.field('predictions', pa.int32())

])

When used in Wallaroo, the inference result is contained in the out metadata as out.predictions.

pipeline.infer(dataframe)

| time | in.inputs | out.predictions | check_failures | |

|---|---|---|---|---|

| 0 | 2023-07-05 15:11:29.776 | [5.1, 3.5, 1.4, 0.2] | 0 | 0 |

| 1 | 2023-07-05 15:11:29.776 | [4.9, 3.0, 1.4, 0.2] | 0 | 0 |

| Parameter | Description |

|---|---|

| Web Site | https://www.tensorflow.org/api_docs/python/tf/keras/Model |

| Supported Libraries |

|

| Framework | Framework.KERAS aka keras |

| Supported File Types | SavedModel format as .zip file and HDF5 format |

| Runtime | Containerized aka mlflow |

TensorFlow Keras SavedModel models are .zip file of the SavedModel format. For example, the Aloha sample TensorFlow model is stored in the directory alohacnnlstm:

├── saved_model.pb

└── variables

├── variables.data-00000-of-00002

├── variables.data-00001-of-00002

└── variables.index

This is compressed into the .zip file alohacnnlstm.zip with the following command:

zip -r alohacnnlstm.zip alohacnnlstm/

See the SavedModel guide for full details.

Wallaroo supports the H5 for Tensorflow Keras models.

| Parameter | Description |

|---|---|

| Web Site | https://xgboost.ai/ |

| Supported Libraries | xgboost==1.7.4 |

| Framework | Framework.XGBOOST aka xgboost |

| Supported File Types | pickle (XGB files are not supported.) |

| Runtime | Containerized aka tensorflow / mlflow |

XGBoost schema follows a different format than other models. To prevent inputs from being out of order, the inputs should be submitted in a single row in the order the model is trained to accept, with all of the data types being the same. If a model is originally trained to accept inputs of different data types, it will need to be retrained to only accept one data type for each column - typically pa.float64() is a good choice.

For example, the following DataFrame has 4 columns, each column a float.

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

For submission to an XGBoost model, the data input schema will be a single array with 4 float values.

input_schema = pa.schema([

pa.field('inputs', pa.list_(pa.float64(), list_size=4))

])

When submitting as an inference, the DataFrame is converted to rows with the column data expressed as a single array. The data must be in the same order as the model expects, which is why the data is submitted as a single array rather than JSON labeled columns: this insures that the data is submitted in the exact order as the model is trained to accept.

Original DataFrame:

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

Converted DataFrame:

| inputs | |

|---|---|

| 0 | [5.1, 3.5, 1.4, 0.2] |

| 1 | [4.9, 3.0, 1.4, 0.2] |

Outputs for XGBoost are labeled based on the trained model outputs. For this example, the output is simply a single output listed as output. In the Wallaroo inference result, it is grouped with the metadata out as out.output.

output_schema = pa.schema([

pa.field('output', pa.int32())

])

pipeline.infer(dataframe)

| time | in.inputs | out.output | check_failures | |

|---|---|---|---|---|

| 0 | 2023-07-05 15:11:29.776 | [5.1, 3.5, 1.4, 0.2] | 0 | 0 |

| 1 | 2023-07-05 15:11:29.776 | [4.9, 3.0, 1.4, 0.2] | 0 | 0 |

| Parameter | Description |

|---|---|

| Web Site | https://www.python.org/ |

| Supported Libraries | python==3.8 |

| Framework | Framework.CUSTOM aka custom |

| Runtime | Containerized aka mlflow |

Arbitrary Python models, also known as Bring Your Own Predict (BYOP) allow for custom model deployments with supporting scripts and artifacts. These are used with pre-trained models (PyTorch, Tensorflow, etc) along with whatever supporting artifacts they require. Supporting artifacts can include other Python modules, model files, etc. These are zipped with all scripts, artifacts, and a requirements.txt file that indicates what other Python models need to be imported that are outside of the typical Wallaroo platform.

Contrast this with Wallaroo Python models - aka “Python steps”. These are standalone python scripts that use the python libraries natively supported by the Wallaroo platform. These are used for either simple model deployment (such as ARIMA Statsmodels), or data formatting such as the postprocessing steps. A Wallaroo Python model will be composed of one Python script that matches the Wallaroo requirements.

Arbitrary Python (BYOP) models are uploaded to Wallaroo via a ZIP file with the following components:

| Artifact | Type | Description |

|---|---|---|

Python scripts aka .py files with classes that extend mac.inference.Inference and mac.inference.creation.InferenceBuilder | Python Script | Extend the classes mac.inference.Inference and mac.inference.creation.InferenceBuilder. These are included with the Wallaroo SDK. Further details are in Arbitrary Python Script Requirements. Note that there is no specified naming requirements for the classes that extend mac.inference.Inference and mac.inference.creation.InferenceBuilder - any qualified class name is sufficient as long as these two classes are extended as defined below. |

requirements.txt | Python requirements file | This sets the Python libraries used for the arbitrary python model. These libraries should be targeted for Python 3.8 compliance. These requirements and the versions of libraries should be exactly the same between creating the model and deploying it in Wallaroo. This insures that the script and methods will function exactly the same as during the model creation process. |

| Other artifacts | Files | Other models, files, and other artifacts used in support of this model. |

For example, the if the arbitrary python model will be known as vgg_clustering, the contents may be in the following structure, with vgg_clustering as the storage directory:

vgg_clustering\

feature_extractor.h5

kmeans.pkl

custom_inference.py

requirements.txt

Note the inclusion of the custom_inference.py file. This file name is not required - any Python script or scripts that extend the classes listed above are sufficient. This Python script could have been named vgg_custom_model.py or any other name as long as it includes the extension of the classes listed above.

The sample arbitrary python model file is created with the command zip -r vgg_clustering.zip vgg_clustering/.

Wallaroo Arbitrary Python uses the Wallaroo SDK mac module, included in the Wallaroo SDK 2023.2.1 and above. See the Wallaroo SDK Install Guides for instructions on installing the Wallaroo SDK.

The entry point of the arbitrary python model is any python script that extends the following classes. These are included with the Wallaroo SDK. The required methods that must be overridden are specified in each section below.

mac.inference.Inference interface serves model inferences based on submitted input some input. Its purpose is to serve inferences for any supported arbitrary model framework (e.g. scikit, keras etc.).

classDiagram

class Inference {

<<Abstract>>

+model Optional[Any]

+expected_model_types()* Set

+predict(input_data: InferenceData)* InferenceData

-raise_error_if_model_is_not_assigned() None

-raise_error_if_model_is_wrong_type() None

}mac.inference.creation.InferenceBuilder builds a concrete Inference, i.e. instantiates an Inference object, loads the appropriate model and assigns the model to to the Inference object.

classDiagram

class InferenceBuilder {

+create(config InferenceConfig) * Inference

-inference()* Any

}| Object | Type | Description |

|---|---|---|

model Optional[Any] | An optional list of models that match the supported frameworks from wallaroo.framework.Framework included in the arbitrary python script. Note that this is optional - no models are actually required. A BYOP can refer to a specific model(s) used, be used for data processing and reshaping for later pipeline steps, or other needs. |

| Method | Returns | Description |

|---|---|---|

expected_model_types (Required) | Set | Returns a Set of models expected for the inference as defined by the developer. Typically this is a set of one. Wallaroo checks the expected model types to verify that the model submitted through the InferenceBuilder method matches what this Inference class expects. |

_predict (input_data: mac.types.InferenceData) (Required) | mac.types.InferenceData | The entry point for the Wallaroo inference with the following input and output parameters that are defined when the model is updated.

InferenceDataValidationError exception is raised when the input data does not match mac.types.InferenceData. |

raise_error_if_model_is_not_assigned | N/A | Error when expected_model_types is not set. |

raise_error_if_model_is_wrong_type | N/A | Error when the model does not match the expected_model_types. |

InferenceBuilder builds a concrete Inference, i.e. instantiates an Inference object, loads the appropriate model and assigns the model to the Inference.

classDiagram

class InferenceBuilder {

+create(config InferenceConfig) * Inference

-inference()* Any

}Each model that is included requires its own InferenceBuilder. InferenceBuilder loads one model, then submits it to the Inference class when created. The Inference class checks this class against its expected_model_types() Set.

| Method | Returns | Description |

|---|---|---|

create(config mac.config.inference.CustomInferenceConfig) (Required) | The custom Inference instance. | Creates an Inference subclass, then assigns a model and attributes. The CustomInferenceConfig is used to retrieve the config.model_path, which is a pathlib.Path object pointing to the folder where the model artifacts are saved. Every artifact loaded must be relative to config.model_path. This is set when the arbitrary python .zip file is uploaded and the environment for running it in Wallaroo is set. For example: loading the artifact vgg_clustering\feature_extractor.h5 would be set with config.model_path \ feature_extractor.h5. The model loaded must match an existing module. For our example, this is from sklearn.cluster import KMeans, and this must match the Inference expected_model_types. |

inference | custom Inference instance. | Returns the instantiated custom Inference object created from the create method. |

Arbitrary Python always run in the containerized model runtime.

| Parameter | Description |

|---|---|

| Web Site | https://mlflow.org |

| Supported Libraries | mlflow==1.30.0 |

| Runtime | Containerized aka mlflow |

For models that do not fall under the supported model frameworks, organizations can use containerized MLFlow ML Models.

This guide details how to add ML Models from a model registry service into Wallaroo.

Wallaroo supports both public and private containerized model registries. See the Wallaroo Private Containerized Model Container Registry Guide for details on how to configure a Wallaroo instance with a private model registry.

Wallaroo users can register their trained MLFlow ML Models from a containerized model container registry into their Wallaroo instance and perform inferences with it through a Wallaroo pipeline.

As of this time, Wallaroo only supports MLFlow 1.30.0 containerized models. For information on how to containerize an MLFlow model, see the MLFlow Documentation.

Wallaroo supports both public and private containerized model registries. See the Wallaroo Private Containerized Model Container Registry Guide for details on how to configure a Wallaroo instance with a private model registry.

Wallaroo frameworks are listed from the Wallaroo.Framework class. The following demonstrates listing all available supported frameworks.

from wallaroo.framework import Framework

[e.value for e in Framework]

['onnx',

'tensorflow',

'python',

'keras',

'sklearn',

'pytorch',

'xgboost',

'hugging-face-feature-extraction',

'hugging-face-image-classification',

'hugging-face-image-segmentation',

'hugging-face-image-to-text',

'hugging-face-object-detection',

'hugging-face-question-answering',

'hugging-face-stable-diffusion-text-2-img',

'hugging-face-summarization',

'hugging-face-text-classification',

'hugging-face-translation',

'hugging-face-zero-shot-classification',

'hugging-face-zero-shot-image-classification',

'hugging-face-zero-shot-object-detection',

'hugging-face-sentiment-analysis',

'hugging-face-text-generation']

How to upload and use ONNX ML Models with Wallaroo

How to upload and use Containerized MLFlow with Wallaroo

How to upload and use Containerized MLFlow with Wallaroo

How to upload and use Registry ML Models with Wallaroo

How to upload and use Python Models as Wallaroo Pipeline Steps

How to upload and use PyTorch ML Models with Wallaroo

How to upload and use SKLearn ML Models with Wallaroo

How to upload and use Hugging Face ML Models with Wallaroo

How to upload and use TensorFlow ML Models with Wallaroo

How to upload and use TensorFlow Keras ML Models with Wallaroo

How to upload and use XGBoost ML Models with Wallaroo